a.以pod为主体,针对pod和pod部署在同一个拓扑的问题

a.以pod为主体,针对pod和pod不能部署在同一个拓扑的问题

一.Pod调度简介

默认情况下,Pod调度到哪个节点都是由Scheduler组件进行调度,对于在工作和学习中需要指定设备运行特定pod时就显得不实用,此时我们就需要合理利用几个调度规则,主要包括全自动调度、定向调度、亲和性调度、污点调度、容忍调度等

二.Deployment/RC全自动调度

1.简介

主要功能是自动部署一个容器的多个副本,以来持续保持使用者指定的副本数量(replicas)

2.案例演示

创建一个Deployment来管理pod,指定创建2个副本,自动调度pod,创建完成后查看调度情况

(1)Deployment

[root@k8s-master pod]# kubectl create deployment my-nginx --image=nginx --replicas=2 --port=80 -n myns --dry-run=client -o yaml > mydeployment.yaml

[root@k8s-master pod]# cat mydeployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: my-nginx

name: my-nginx

namespace: myns

spec:

replicas: 2

selector:

matchLabels:

app: my-nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: my-nginx

spec:

containers:

- image: nginx

name: nginx

ports:

- containerPort: 80

resources: {}

status: {}

[root@k8s-master pod]# kubectl apply -f mydeployment.yaml

[root@k8s-master pod]# kubectl get deploy -n myns #创建了两个副本

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 2/2 2 2 8m17s

[root@k8s-master pod]# kubectl get pods -n myns -o wide #两个node分别分配到了各自的pod任务

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx-64f6999999-5j7nl 1/1 Running 0 8m40s 10.244.36.65 k8s-node1 <none> <none>

my-nginx-64f6999999-hjbd4 1/1 Running 0 8m40s 10.244.169.130 k8s-node2 <none> <none>(2)RC

[root@k8s-master pod]# cat myrc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-rc

namespace: myns

spec:

replicas: 2

selector:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 80

[root@k8s-master pod]# kubectl apply -f myrc.yaml

replicationcontroller/nginx-rc created

[root@k8s-master pod]# kubectl get rc -n myns

NAME DESIRED CURRENT READY AGE

nginx-rc 2 2 2 25s

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-rc-4qfm8 1/1 Running 0 36s 10.244.36.70 k8s-node1 <none> <none>

nginx-rc-xmdbc 1/1 Running 0 36s 10.244.169.133 k8s-node2 <none> <none>三.nodeSelector/nodeName指定节点调度

1.原理简介

(1)nodeSelector原理

就是给具体的受管node打上标签,在部署pod时指定调度到特定标签的node,前提是这个集群中需要有指定的node,若没有这个特定node即使有其他正常运行的node,这个pod也无法完成调度任务。如果多个node拥有同一个标签,那么Scheduler会进一步选择一个可用的node进行配合调度任务。

(2)nodeName原理

强制指定node的名称,也就是“kubectl get nodes”可以查出来的node名称

2.案例演示

(1)仍然使用mydeployment.yaml进行测试,nodeSelector为节点打上标签,,加上nodeSelector参数指定节点,运行完成后可以看到3个副本都运行在node1

[root@k8s-master pod]# kubectl label nodes k8s-node1 name=node1

[root@k8s-master pod]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master Ready control-plane 34m v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s-node1 Ready <none> 34m v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node1,kubernetes.io/os=linux,name=node1

k8s-node2 Ready <none> 33m v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node2,kubernetes.io/os=linux,name=node2

[root@k8s-master pod]# cat mydeployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: my-nginx

name: my-nginx

namespace: myns

spec:

replicas: 3

selector:

matchLabels:

app: my-nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: my-nginx

spec:

containers:

- image: nginx

name: nginx

ports:

- containerPort: 80

nodeSelector:

name: node1

[root@k8s-master pod]# kubectl apply -f mydeployment.yaml

deployment.apps/my-nginx created

[root@k8s-master pod]# kubectl get deployments -n myns

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 3/3 3 3 23s

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx-74db7ccb9b-gvgfk 1/1 Running 0 31s 10.244.36.67 k8s-node1 <none> <none>

my-nginx-74db7ccb9b-kpsb2 1/1 Running 0 31s 10.244.36.66 k8s-node1 <none> <none>

my-nginx-74db7ccb9b-xwpjt 1/1 Running 0 31s 10.244.36.68 k8s-node1 <none> <none>[root@k8s-master pod]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 70m v1.28.2

k8s-node1 Ready <none> 70m v1.28.2

k8s-node2 Ready <none> 70m v1.28.2

[root@k8s-master pod]# cat mydeployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: my-nginx

name: my-nginx

namespace: myns

spec:

replicas: 3

selector:

matchLabels:

app: my-nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: my-nginx

spec:

containers:

- image: nginx

name: nginx

ports:

- containerPort: 80

nodeName: k8s-node1

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx-7544fbf745-gwxv7 1/1 Running 0 85s 10.244.36.70 k8s-node1 <none> <none>

my-nginx-7544fbf745-kn8lj 1/1 Running 0 85s 10.244.36.71 k8s-node1 <none> <none>

my-nginx-7544fbf745-q6w6j 1/1 Running 0 85s 10.244.36.69 k8s-node1 <none> <none>3.kubernetes预定义pod标签

可以看到查看node的labels后,有一长串现有标签,这些就是k8s预定义的pod标签,当然也可以只指定标签进一步指定node进行pod调度。其中基本上都是指定的基于设备架构类型和操作系统类型

[root@k8s-master pod]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master Ready control-plane 41m v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s-node1 Ready <none> 40m v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node1,kubernetes.io/os=linux,name=node1

k8s-node2 Ready <none> 40m v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node2,kubernetes.io/os=linux,name=node2四.Affinity亲和性调度

1.简介

对pod配置亲和性调度,可以解决上面介绍的定向调度存在“没有满足匹配条件的node时,即使其他有可用node,pod也无法成功调度”的问题,亲和性调度会先选择满足条件的node,若没有,也可以调度到其他可用node上

2.三类Affinity

(1)nodeAffinity,node亲和性

a.以node为主体,针对pod调度到node问题

b.两种表达方式和可配置项

[root@k8s-master pod]# kubectl explain pod.spec.affinity.nodeAffinity

KIND: Pod

VERSION: v1

preferredDuringSchedulingIgnoredDuringExecution <[]PreferredSchedulingTerm>

#强调优先满足指定规则,可以理解为尽量满足该规则,实在是不满足再为其分配其他可用node,调度器尝试但不强求调度pod到node上,若有多个优先级规则那么可以设置权值来调整调度顺序

- weight: 1 #权值,越小优先级越高,1-100

preference: #节点选择器,关联weight

matchExpressions: #按照节点的标签来匹配选择目标

- key: kubernetes.io/arch #同上

operator: In

values:

- amd64

requiredDuringSchedulingIgnoredDuringExecution <NodeSelector>

#必须满足指定的规则才能调度pod至node上,硬性条件

nodeSelectorTerms: #节点选择列表

- matchExpressions: #或matchFields,当然比较推荐matchExpressions,表示按照节点的标签来匹配选择调度目标

- key: kubernetes.io/hostname #标签的键

operator: In

#operator关系符,可以是In(属于)、NotIn(不属于)、Exists(存在)、DoseNotExists(不存在)、Gt(大于)、Lt(小于)

values: #标签的值

- k8s-node1c.案例演示

下例要求必须运行在hostname为k8s-node1的节点,尽量运行在架构为amd64的设备上

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 0 9s 10.244.36.82 k8s-node1 <none> <none>

[root@k8s-master pod]# cat myaffinity.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

namespace: myns

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node1

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: kubernetes.io/arch

operator: In

values:

- amd64

containers:

- name: nginx-container

image: nginx:latest

ports:

- name: nginx-port

containerPort: 80若将硬限制处改为不存在的node,那么会调度错误

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

namespace: myns

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node3

#preferredDuringSchedulingIgnoredDuringExecution:

#- weight: 1

#preference:

#matchExpressions:

#- key: kubernetes.io/arch

#operator: In

#values:

#- amd64

containers:

- name: nginx-container

image: nginx:latest

ports:

- name: nginx-port

containerPort: 80

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 0/1 Pending 0 6s <none> <none> <none> <none>

[root@k8s-master pod]# kubectl describe pod nginx-pod -n myns | tail -5

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 25s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 2 node(s) didn't match Pod's node affinity/selector. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling..若将软限制更改为一个不存在的node,那么会自动挑选一个可用node接受调度任务

[root@k8s-master pod]# cat myaffinity.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

namespace: myns

spec:

affinity:

nodeAffinity:

#requiredDuringSchedulingIgnoredDuringExecution:

#nodeSelectorTerms:

#- matchExpressions:

#- key: kubernetes.io/hostname

#operator: In

#values:

#- k8s-node3

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node3

containers:

- name: nginx-container

image: nginx:latest

ports:

- name: nginx-port

containerPort: 80

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 0 8s 10.244.36.83 k8s-node1 <none> <none>d.注意事项

定向调度nodeSelector和nodeAffinity可以同时配置,但需要两者的条件都满足才能正常运行调度

pod被调度到node后node的标签发生了改变不符合亲和性调度匹配规则时,系统将忽略此变化(requiredDuringSchedulingIgnoredDuringExecution表达方式中的IgnoredDuringExecution就表示这个意思)

定义了多个matchExpressions时需要都满足才成功

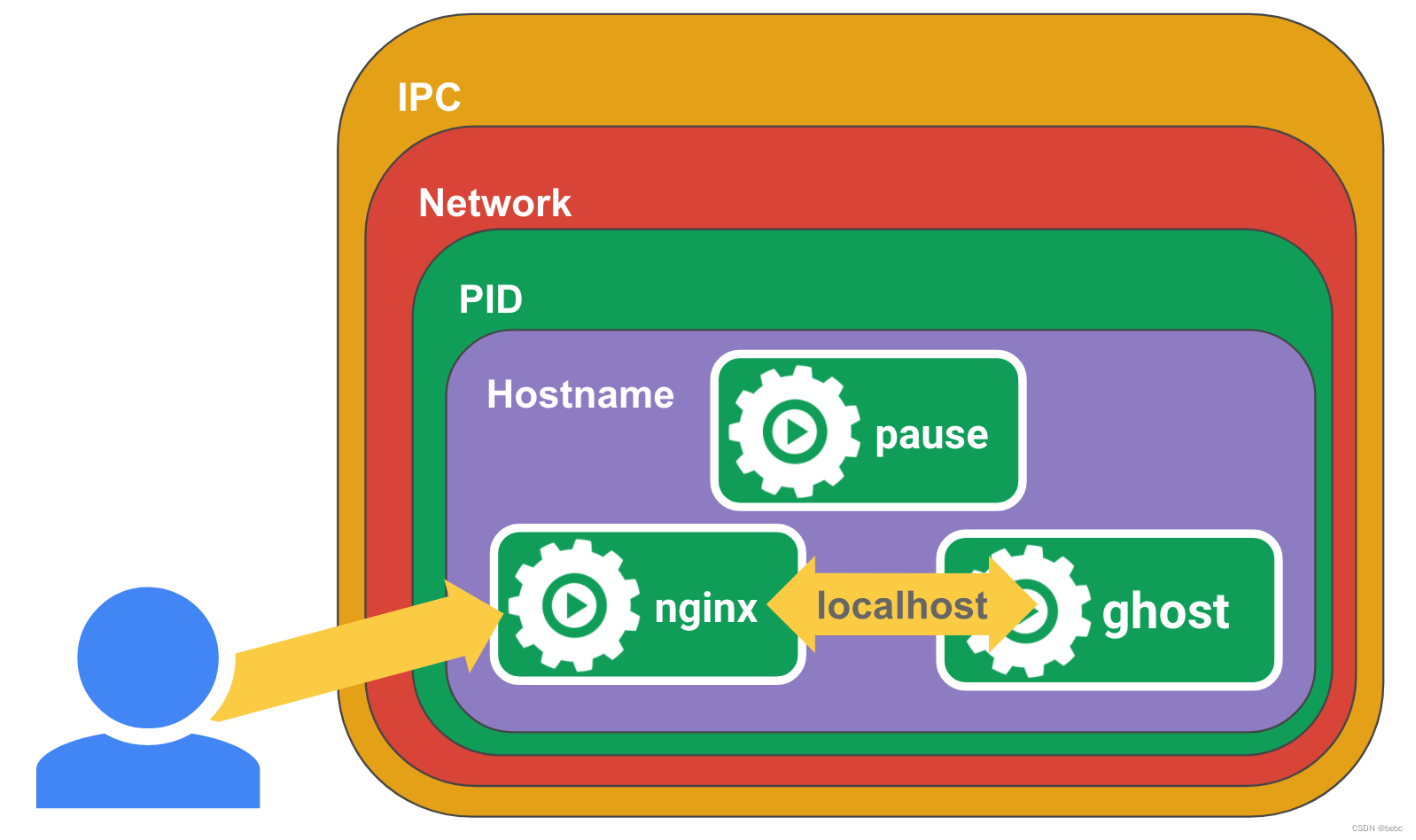

(2)podAffinity,pod亲和性

a.以pod为主体,针对pod和pod部署在同一个拓扑的问题

为减少网络通信性能损耗,多用于两个pod应用频繁交互互动的情况

b.两种表达方式和可配置项

[root@k8s-master pod]# kubectl explain pod.spec.affinity.podAffinity

KIND: Pod

VERSION: v1

FIELDS:

preferredDuringSchedulingIgnoredDuringExecution <[]WeightedPodAffinityTerm>

#软限制,尽量满足

- weight: 3

podAffinityTerm: #选项

labelSelector:

matchExpressions:

- key: kubernetes.io/arch

operator: In

values:

- amd64

topologyKey: kubernetes.io/arch

requiredDuringSchedulingIgnoredDuringExecution <[]PodAffinityTerm>

#硬限制,必须满足

- labelSelector: #标签选择器

matchExpressions: #同nodeAffinity

- key: name

operator: In

values:

- su1

namespaces: xxx #指定参照pod的名称空间

topologyKey: kubernetes.io/hostname #指定调度作用域,kubernetes.io/hostname(以Node节点为区分范围),kubernetes.io/os(以Node节点的操作系统类型来区分)等

#matchLabels 可指定多个matchExpressions内容c.案例演示

下例要求将两个nginx的pod运行在同一个node,并且是以hostname为调度作用域

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx1 1/1 Running 0 9s 10.244.36.80 k8s-node1 <none> <none>

my-nginx2 1/1 Running 0 9s 10.244.36.81 k8s-node1 <none> <none>

[root@k8s-master pod]# cat twopod.yaml

apiVersion: v1

kind: Pod

metadata:

name: my-nginx1

labels:

name: su1

namespace: myns

spec:

containers:

- name: my-nginx1

image: nginx

---

apiVersion: v1

kind: Pod

metadata:

name: my-nginx2

labels:

name: su2

namespace: myns

spec:

containers:

- name: my-nginx2

image: nginx

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: name

operator: In

values:

- su1

topologyKey: kubernetes.io/hostname

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 3

podAffinityTerm:

labelSelector:

matchExpressions:

- key: kubernetes.io/arch

operator: In

values:

- amd64

topologyKey: kubernetes.io/arch (3)podAntiAffinity,pod反亲和性

a.以pod为主体,针对pod和pod不能部署在同一个拓扑的问题

为提高服务可用性,多用于pod应用有多个副本,让应用分布到各个node上的情况,用法和podAffinity一致

b.案例演示

简单测试下效果就将上面podAffinity例子改为podAntiAffinity即可

[root@k8s-master pod]# cat twopod.yaml

apiVersion: v1

kind: Pod

metadata:

name: my-nginx1

labels:

name: su1

namespace: myns

spec:

containers:

- name: my-nginx1

image: nginx

---

apiVersion: v1

kind: Pod

metadata:

name: my-nginx2

labels:

name: su2

namespace: myns

spec:

containers:

- name: my-nginx2

image: nginx

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: name

operator: In

values:

- su1

topologyKey: kubernetes.io/hostname

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 3

podAffinityTerm:

labelSelector:

matchExpressions:

- key: kubernetes.io/arch

operator: In

values:

- amd64

topologyKey: kubernetes.io/arch

[root@k8s-master pod]# kubectl get pods -n myns -o wide #已将其运行在其他node

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx1 1/1 Running 0 9s 10.244.36.84 k8s-node1 <none> <none>

my-nginx2 1/1 Running 0 9s 10.244.169.132 k8s-node2 <none> <none>五.taint污点调度和Toleration容忍调度

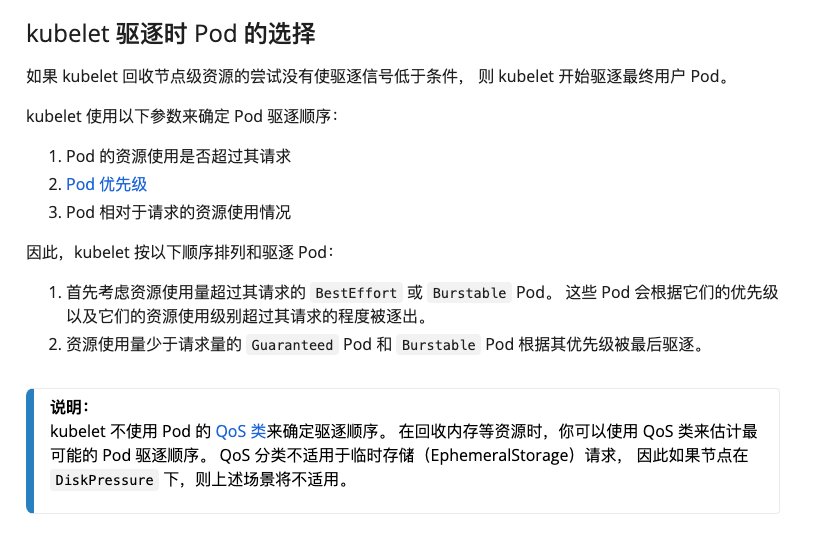

1.污点调度(node方)

也就是在node上添加一个taint的属性,来标识此node是否允许pod调度

(1)三个污点策略

PreferNoSchedule

能不来你就别来了,但是要是没有其他node可以调度了可以过来

NoSchedule

新pod就别来了,我现在只负责我的老pod了

NoExecute

老pod我也不负责了,新的我也调度不了

(2)添加污点/查看污点/去除污点

#添加

kubectl taint nodes nodename 键=值:污点策略

#查看

kubectl describe nodes nodename | grep Taints

#删除

kubectl taint nodes nodename 键:污点策略-

#删除所有

kubectl taint nodes nodename 键-(3)案例演示

模拟目前集群中只有k8s-node1一个node可用,在该node上演示污点调度的三种模式效果

[root@k8s-master pod]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready control-plane 8m32s v1.28.2 192.168.2.150 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.24

k8s-node1 Ready <none> 7m42s v1.28.2 192.168.2.151 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.24a.PreferNoSchedule

可以看到设置PreferNoSchedule后在无其他可用节点时仍然mytaint1可以被调度上来

[root@k8s-master pod]# kubectl taint nodes k8s-node1 status=taint:PreferNoSchedule

node/k8s-node1 tainted

[root@k8s-master pod]# kubectl run mytaint1 --image=nginx -n myns

pod/mytaint1 created

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mytaint1 1/1 Running 0 9s 10.244.36.66 k8s-node1 <none> <none>

[root@k8s-master pod]# kubectl taint nodes k8s-node1 status:PreferNoSchedule-

node/k8s-node1 untaintedb.NoSchedule

可以看到设置NoSchedule后,新pod无法被调度,但不会影响原有的mytaint1

[root@k8s-master pod]# kubectl taint nodes k8s-node1 status=taint:NoSchedule

node/k8s-node1 tainted

[root@k8s-master pod]# kubectl run mytaint2 --image=nginx -n myns

pod/mytaint2 created

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mytaint1 1/1 Running 0 2m54s 10.244.36.66 k8s-node1 <none> <none>

mytaint2 0/1 Pending 0 9s <none> <none> <none> <none>

[root@k8s-master pod]# kubectl taint nodes k8s-node1 status:NoSchedule-

node/k8s-node1 untaintedc.NoExecute

可以看到在设置NoExecute后,node上原有的pod都被剔除,只剩mytaint3,他同样也无法被调度

[root@k8s-master pod]# kubectl taint nodes k8s-node1 status=taint:NoExecute

node/k8s-node1 tainted

[root@k8s-master pod]# kubectl run mytaint3 --image=nginx -n myns

pod/mytaint3 created

[root@k8s-master pod]# kkubec get pods -n myns -o wide

-bash: kkubec: command not found

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mytaint3 0/1 Pending 0 16s <none> <none> <none> <none>2.容忍调度(pod方)

就是实在需要将pod调度到污点node上,可以利用容忍调度

(1)容忍的配置参数

[root@k8s-master pod]# kubectl explain pod.spec.tolerations

KIND: Pod

VERSION: v1

FIELDS:

effect <string>

#指定对应想要调度node的污点策略,若是空则匹配所有策略,必须和目标node的策略一致

key <string>

#指定对应想要调度node的污点键,必须和目标node的污点键一致

operator <string>

#运算符,只支持Equal和Exists,默认Exists

tolerationSeconds <integer>

#容忍时间,设置pod在node上的存活时间,只有在污点策略为NoExecute才生效

value <string>

#指定对应想要调度node的污点键对应的值,必须和目标node的污点键值一致(2)案例演示

在污点为“status=taint:NoExecute”的k8s-node1上成功调度my-to

[root@k8s-master pod]# kubectl describe node k8s-node1 | grep Taints

Taints: status=taint:NoExecute

[root@k8s-master pod]# cat mytoleration.yaml

apiVersion: v1

kind: Pod

metadata:

name: my-to

namespace: myns

spec:

containers:

- name: my-nginx

image: nginx

tolerations:

- key: status

operator: Equal

value: taint

effect: NoExecute

[root@k8s-master pod]# kubectl get pods -n myns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-to 1/1 Running 0 6m48s 10.244.36.68 k8s-node1 <none> <none>

原文地址:https://blog.csdn.net/weixin_64334766/article/details/134596464

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_1186.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!