Abstract

Recently, Neural Radiance Fields (NeRF) has shown promising performances on reconstructing 3D scenes and synthesizing novel views from a sparse set of 2D images. Albeit effective, the performance of NeRF is highly influenced by the quality of training samples. With limited posed images from the scene, NeRF fails to generalize well to novel views and may collapse to trivial solutions in unobserved regions. This makes NeRF impractical under resource–constrained scenarios. In this paper, we present a novel learning framework, ActiveNeRF, aiming to model a 3D scene with a constrained input budget. Specifically, we first incorporate uncertainty estimation into a NeRF model, which ensures robustness under few observations and provides an interpretation of how NeRF understands the scene. On this basis, we propose to supplement the existing training set with newly captured samples based on an active learning scheme. By evaluating the reduction of uncertainty given new inputs, we select the samples that bring the most information gain. In this way, the quality of novel view synthesis can be improved with minimal additional resources. Extensive experiments validate the performance of our model on both realistic and synthetic scenes, especially with scarcer training data. Code will be released at https://github.com/LeapLabTHU/ActiveNeRF.

最近,神经辐射场 (NeRF) 在重建 3D 场景和从一组稀疏的 2D 图像中合成新颖的视图方面表现出了良好的性能。尽管有效,但 NeRF 的性能很大程度上受训练样本质量的影响。由于场景中的姿势图像有限,NeRF 无法很好地推广到新颖的视图,并且可能会在未观察到的区域中崩溃为破碎结果。这使得 NeRF 在资源受限的情况下不切实际。在本文中,我们提出了一种新颖的学习框架 ActiveNeRF,旨在以有限的输入对 3D 场景进行建模。具体来说,我们首先将不确定性估计纳入 NeRF 模型中,这确保了少视角下的鲁棒性,并提供了 NeRF 如何理解场景的解释。在此基础上,我们建议基于主动学习方案用新捕获的样本来补充现有的训练集。通过评估给定新输入的不确定性的减少,我们选择带来最大信息增益的样本。通过这种方式,可以用最少的额外资源来提高新颖视图合成的质量。大量的实验验证了我们的模型在现实场景和合成场景上的性能,特别是在训练数据较少的情况下。代码将在 https://github.com/LeapLabTHU/ActiveNeRF 发布。

1 Introduction

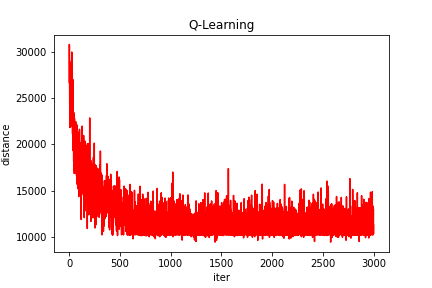

Despite its success in synthesizing high-quality images, the learning scheme for a NeRF model puts forward higher demands on the training data. First, NeRF usually requires a large number of posed images and is proved to generalize poorly with limited inputs [36]. Second, it takes a whole observation in the scene to train a well-generalized NeRF. As illustrated in Figure 2, if we remove observations of a particular part in the scene, NeRF fails to model the region and tends to collapse (e.g., predicting zero density everywhere in the scene) instead of performing reasonable predictions. This poses challenges under real-world applications such as robot localization and mapping, where capturing training data can be costly, and perception of the entire scene is required [23,11,31].

尽管 NeRF 模型在合成高质量图像方面取得了成功,但其学习方案对训练数据提出了更高的要求。首先,NeRF 通常需要大量的位姿已知图像,并且被证明在输入有限的情况下泛化能力很差 [36]。其次,需要对场景进行整体观察来训练泛化良好的 NeRF。如图 2 所示,如果我们删除场景中特定部分的观测,NeRF 无法对该区域进行建模,并且往往会崩溃(例如,预测场景中各处的密度为零),而不是执行合理的预测。这在机器人定位和地图绘制等现实应用中提出了挑战,其中捕获训练数据的成本可能很高,并且需要感知整个场景[23,11,31]。

3 Background

4 NeRF with Uncertainty Estimation

r

(

t

)

r(t)

r(t) follows a Gaussian distribution parameterized by mean

c

‾

(

r

(

t

)

)

overline{{c}}(r(t))

c(r(t)) and variance

β

‾

2

(

r

(

t

)

)

overline{{β}}^2(r(t))

β

0

2

β^2_0

r

(

t

)

r(t)

r(t) as

c

(

r

(

t

)

)

∼

N

(

c

‾

(

r

(

t

)

)

,

β

‾

2

(

r

(

t

)

)

)

c(r(t)) ∼ N (overline{{c}}(r(t)),overline{{β}}^2(r(t)))

α

i

s

α_is

αis are the same as in Eq.(4), and

C

‾

(

r

)

overline{C}(r)

C(r),

β

‾

2

(

r

)

overline{{β}}^2(r)

β2(r) denote the mean and variance of the rendered color through the sampled ray

r

r

r

i

=

1

N

{r^N_{i=1}}

ri=1N from a batch

B

B

α

i

α_i

5 ActiveNeRF

5.1 Prior and Posterior Distribution

D

1

D_1

D1 denote the existing training set and

F

θ

F_θ

Fθ denote the trained NeRF model given

D

1

D_1

D1 . For simplicity, we first consider the influence of a single ray

r

2

r_2

r2 from the new input

D

2

D_2

D2 . Thus, for the

t

h

k_{th}

r

2

(

t

k

)

r_2(t_k)

r

2

r_2

r2 are independent with

r

2

(

t

k

)

r_2(t_k)

r2(tk), we can represent the unrelated part in the mean as a constant

b

(

t

k

)

b(t_k)

5.2 Acquisition Function

C

(

r

2

)

C(r_2)

C(r2), the variance is independent of the ground truth value and therefore can be precisely computed based on the current model

F

θ

F_θ

Fθ. Additionally, it is worth noting that the variance of the posterior distribution of a newly observed location

r

2

(

t

k

)

r_2(t_k)

r

2

(

t

k

)

r_2(t_k)

r2(tk) from the new ray

r

2

r_2

H

,

W

H, W

H,W , we can sample

N

=

H

×

W

N = H×W

N=H×W independent rays, with

N

s

N_s

r

i

r_i

ri denotes ray from different images, and

x

=

r

i

(

t

k

i

)

,

∀

i

x = r_i(t_{k_i}), ∀i

x=ri(tki),∀i. Please refer to Appendix B for details.。

In practical implementation, we first sample candidate views from a spherical space, and choose the top-k candidates that score highest in the acquisition function as the supplementary of the current training set. In this way, the captured new inputs bring the most information gain and promote the performance of the current model with the highest efficiency.

Besides, a quality-efficiency trade-off can also be achieved by evaluating new inputs with lower resolution. For example, instead of using full image size

H

×

W

H×W

H×W as new rays, we can sample

H

/

r

×

W

/

r

H/r×W/r

H/r×W/r rays to approximate the influence of the whole image with only

1

/

r

2

1/r^2

5.3 Optimization and Inference

C

(

r

)

C(r)