官方文档

https://clickhouse.com/docs/zh/getting–started/tutorial#cluster–deployment

https://clickhouse.com/docs/en/engines/table–engines/special/distributed

https://zookeeper.apache.org/

Clickhouse的优点

列式存储数据库,数据压缩;

关系型、支持SQL;

分布式并行计算,把单机性能压榨到极限;

高可用;数据量级在PB级别。

集群的分片和副本和节点数量的选择,CK officially supports only one shard/replica per node

分片数*副本数=节点数

最佳实践就是3分片2副本6节点

3节点3分片2副本,无法配置起来,会报错DB::Exception: There are two exactly the same ClickHouse instances DAILACHDBUD001:9000 in cluster cluster_3shared2replicas

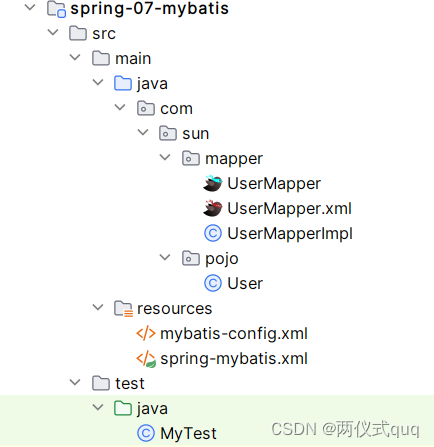

ClickHouse集群集群部署设置步骤:

在群集的所有机器上安装ClickHouse服务端

在配置文件中设置集群配置

在每个实例上创建本地表

创建一个分布式表,分布式表实际上是一种view,映射到ClickHouse集群的本地表。 从分布式表中执行SELECT查询会使用集群所有分片的资源。 您可以为多个集群指定configs,并创建多个分布式表,为不同的集群提供视图。

备注:也就是说集群的最终目标就是为了能创建分布式表,通过分布式表可以实现读取量发布到各个节点,也是为了能给大表做横向扩展。

集群<cluster_2shared2replicas>配置项对ENGINE = Distributed表和ENGINE = ReplicatedMergeTree表和MergeTree表有效,集群配置项中只对ENGINE = ReplicatedMergeTree表有效。单独的ENGINE = MergeTree仅仅是对表的数据做分片,这样的话Clickhouse缺少了两个功能————数据高可用(HA)和横向扩展。HA的目的是为了如果有一个数据副本丢失或者损坏不至于完全丢失数据,至于横向扩展自然是为了提高数据存储能力了。数据高可用(HA)的实现需要使用ReplicatedMergeTree表引擎,横向扩展的实现需要使用Distributed表引擎,MergeTree表引擎+Distributed表引擎同时使用,只能做分片并把分片分发到不同节点,但是Distributed表查询的总量还是异常

Clickhouse除了提供TCP 9000端口的连接,还提供了HTTP 8123端口的连接http://ip:8123/play

Clickhouse本身没有类似Mongodb一样的Mongos Route Server前端路由实例让整个集群看上去像单一连接点以便前端应用可以透明连接该路由实例,但是可以通过F5或A10提供的负载均衡功能来实现Clickhouse的路由接入,通过负载均衡设置提供的单一地址实现客户端连接到多个不同clickhouse节点

ClickHouse集群环境下,DDL语句的同步使用ZooKeeper来同步,所以在一个节点上createalter本地表时加上on cluster clustername的话,这张表的表结构就会同时在其他节点上被createalter

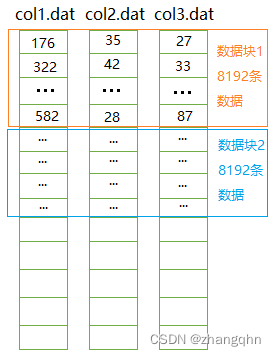

clickhouse集群是非主从结构,各个节点是相互独立的,这点和mongodb不一样。分片(Shard)就是将一份数据分片段存储到多台服务器上,每台服务器只存储这份数据的一部分,在这种架构下,每台服务器被称为一个分片(Shard)。副本(Replica)就是将一份数据冗余存储到多台服务器上。假如4台服务器的集群环境下,2分片2副本的集群策略的话,就是把一份数据分成2个片段来存储,这2个片段都有2份,也就是这一份数据在物理上变成了4个单元,其中4个单元分成2个片段,每个片段的2份的数据是一模一样。

本地表(Local Table)

数据只会存储在当前写入的节点上,不会被分散到多台服务器上。本地表的写入和查询,受限于单台服务器的存储、计算资源,不具备横向拓展能力。

分布式表(Distributed Table)

本地表的集合,它将多个本地表抽象为一张统一的表,对外提供写入、查询功能。当写入分布式表时,数据会被自动分发到集合中的各个本地表中;当查询分布式表时,集合中的各个本地表都会被分别查询,并且把最终结果汇总后返回。分布式表的写入和查询,可以利用多台服务器的存储、计算资源,具有较好的横向拓展能力。ClickHouse集群就是因为分布式表(Distributed Table)而产生的。

1、操作系统的配置

1.1、四节点的IP、服务器名称、OS信息如下

172.22.137.132 DAILACHDBUD001 Ubuntu 22.04

172.22.137.133 DAILACHDBUD002 Ubuntu 22.04

172.22.137.134 DAILACHDBUD003 Ubuntu 22.04

172.22.137.136 DAILACHDBUD004 Ubuntu 22.04

172.22.137.134 DAILACHDBUD003

172.22.137.133 DAILACHDBUD002

172.22.137.132 DAILACHDBUD001

172.22.137.136 DAILACHDBUD004

ufw disable

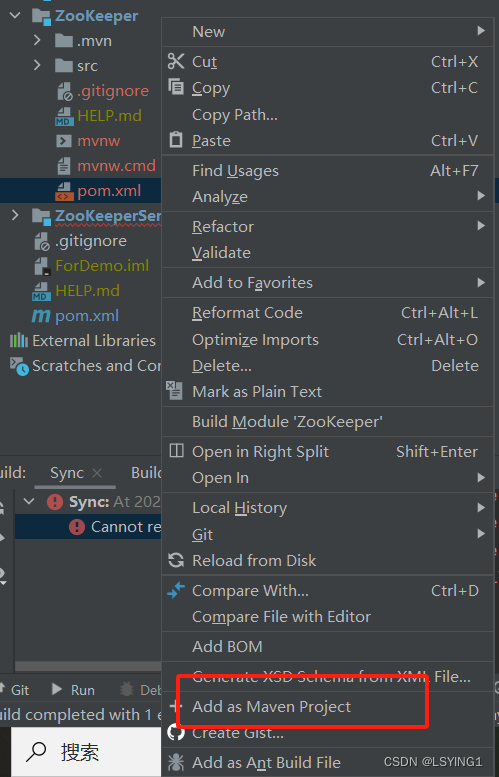

2、zookeeper 集群配置

2.1、下载zookeeper,进入zookeeper页面https://zookeeper.apache.org/releases.html可以看到各个版本,下载最新的稳定版

wget https://dlcdn.apache.org/zookeeper/zookeeper-3.7.1/apache-zookeeper-3.7.1-bin.tar.gz

2.2、四台服务器都把zookeeper安装包放到/chdata/zookeeper目录后并解压,拷贝zoo_sample.cfg成zoo.cfg,并修改或新增zoo.cfg,/chdata/zookeeper/apache-zookeeper-3.7.1-bin/conf/zoo.cfg内容如下

dataDir=/chdata/zookeeper/data

server.1=DAILACHDBUD001:2888:3888

server.2=DAILACHDBUD002:2888:3888

server.3=DAILACHDBUD003:2888:3888

server.4=DAILACHDBUD004:2888:3888

–备注:server.每个节点服务编号=服务器ip地址:集群通信端口:选举端口

2.3、四台服务器分别配置/chdata/zookeeper/data/myid,使/chdata/zookeeper/data/myid内容和上面步骤3中的server.1=DAILACHDBUD001对应,也就是1对应服务器DAILACHDBUD001

DAILACHDBUD001上执行:echo 1 >> /chdata/zookeeper/data/myid

DAILACHDBUD002上执行:echo 2 >> /chdata/zookeeper/data/myid

DAILACHDBUD003上执行:echo 3 >> /chdata/zookeeper/data/myid

DAILACHDBUD004上执行:echo 4 >> /chdata/zookeeper/data/myid

2.4、四台服务器安装jdk(不安装的话,启动zookeeper的时候会报错)并启动四台服务器的zookeeper,并查看四台服务器的角色

apt-cache search openjdk

apt install openjdk-18-jdk

/chdata/zookeeper/apache-zookeeper-3.7.1-bin/bin/zkServer.sh start /chdata/zookeeper/apache-zookeeper-3.7.1-bin/conf/zoo.cfg

/chdata/zookeeper/apache-zookeeper-3.7.1-bin/bin/zkServer.sh status /chdata/zookeeper/apache-zookeeper-3.7.1-bin/conf/zoo.cfg

2.5、连接四台服务器的zookeeper,检查是否可以正常连接

/chdata/zookeeper/apache-zookeeper-3.7.1-bin/bin/zkCli.sh -server DAILACHDBUD001:2181

/chdata/zookeeper/apache-zookeeper-3.7.1-bin/bin/zkCli.sh -server DAILACHDBUD002:2181

/chdata/zookeeper/apache-zookeeper-3.7.1-bin/bin/zkCli.sh -server DAILACHDBUD003:2181

/chdata/zookeeper/apache-zookeeper-3.7.1-bin/bin/zkCli.sh -server DAILACHDBUD004:2181

3、clickhouse集群配置

3.1、在四个节点上安装clickhouse,创建路径/chdata/clickhouse/data/和/chdata/clickhouse/user_files/用来分别存放数据文件和用户文件,由于操作系统是ubuntu,所以用ubuntu的官方预编译deb软件包

sudo apt-get install -y apt-transport-https ca-certificates dirmngr

sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 8919F6BD2B48D754

echo "deb https://packages.clickhouse.com/deb stable main" > /etc/apt/sources.list.d/clickhouse.list

sudo apt-get update

sudo apt-get install -y clickhouse-server clickhouse-client

sudo service clickhouse-server start

3.2、在四个节点上配置2分片,每个分片3副本的集群策略,zookeeper默认端口是2181,参见/chdata/zookeeper/apache-zookeeper-3.7.1-bin/conf/zoo.cfg配置文件中clientPort配置项,clickhouse端口默认是9000,参见/etc/clickhouse-server/config.xml配置文件中tcp_port配置项。配置数据文件存放路径

/chdata/clickhouse/data/

和用户文件存放路径<user_files_path>/chdata/clickhouse/user_files/</user_files_path>,本机绑定地址让集群内的各个节点互通,配置宏来识别每个用于创建表的分片和副本(1-2节点配置01,3-4节点配置02,的话每个节点配置为自己的服务器名即可比如DAILACHDBUD001配置为DAILACHDBUD001)。

/chdata/clickhouse/data/

<user_files_path>/chdata/clickhouse/user_files/</user_files_path>

</remote_servers>

<cluster_2shared2replicas>

<internal_replication>true</internal_replication>

DAILACHDBUD001

9000

default

Won123_De1

<password_sha256_hex>9174c7d10af863c34f4fc684413740431cda606273ea1cea905d3f08cb84c820</password_sha256_hex>

DAILACHDBUD002

9000

default

Won123_De1

<password_sha256_hex>9174c7d10af863c34f4fc684413740431cda606273ea1cea905d3f08cb84c820</password_sha256_hex>

<internal_replication>true</internal_replication>

DAILACHDBUD003

9000

default

Won123_De1

<password_sha256_hex>9174c7d10af863c34f4fc684413740431cda606273ea1cea905d3f08cb84c820</password_sha256_hex>

DAILACHDBUD004

9000

default

Won123_De1

<password_sha256_hex>9174c7d10af863c34f4fc684413740431cda606273ea1cea905d3f08cb84c820</password_sha256_hex>

</cluster_2shared2replicas>

</remote_servers>

<zookeeper>

<node>

<host>DAILACHDBUD001</host>

<port>2181</port>

</node>

<node>

<host>DAILACHDBUD002</host>

<port>2181</port>

</node>

<node>

<host>DAILACHDBUD003</host>

<port>2181</port>

</node>

<node>

<host>DAILACHDBUD004</host>

<port>2181</port>

</node>

</zookeeper>

<macros>

<shard>01</shard>

<replica>DAILACHDBUD001</replica>

</macros>

<networks>

<ip>::/0</ip>

</networks>

备注1:如果新增配置文件,这配置文件中最上和最下的<></>里面内容试了只能是yandex或clickhouse,否则启动不了会报错Failed to merge config with /etc/clickhouse-server/config.d/clusterpolicy.xml,并且<></>里面的内容必须严格按照xml格式,否则启动不了会报错code=exited, status=232/ADDRESS_FAMILIES且通过journalctl –xe也看不到任何有效信息

备注2:首先查看config.xml是否已经有remote_servers配置项,有的话,在里面加就行了。个人遇到的问题:在config.xml里面添加了remote_servers配置项并自定义的集群名称后,重启发现clickhouse后没有看到自定义的集群名称,show clusters时一直显示系统默认的8个集群名称,后来才发现config.xml里面已经有了remote_servers配置项并且配置了默认的8个集群名称

备注3:参数internal_replication 是否启用内部复制,即写入数据时只写入到一个副本,其他副本的同步工作靠复制表和ZooKeeper异步进行。Whether to write data to just one of the replicas. Default: false (write data to all replicas).

备注4:项中指定shardreplica值后,以后创建复制表时可以直接使用ENGINE = ReplicatedMergeTree()不用带参数,参数会自动使用项中指定的shardreplica值代入到ReplicatedMergeTree(‘/clickhouse/tables/{shard}/{database}/table_name’, ‘{replica}’),参考https://clickhouse.com/docs/zh/engines/table–engines/mergetree–family/replication

备注5: 项中指定::/0,表示要为任何网络的用户打开访问权限

备注6:不启动clickhouse的情况下, 各个节点执行/chdata/zookeeper/apache-zookeeper-3.7.1-bin/bin/zkServer.sh status可以看到谁是主谁是从,但是各个节点执行netstat -pan |grep 2181发现没有连接

备注7:10

,如果分片权重越大,那么其被写入的数据也会越多。

4、验证

4.1、4节点配置密码文件/etc/clickhouse-server/users.d/default-password.xml,如下两种形式都行

形式1

<yandex>

<users>

<default>

<password>Won123_De1</password>

</default>

</users>

</yandex>

形式2

<yandex>

<users>

<default>

<password remove='1' />

<password_sha256_hex>9174c7d10af863c34f4fc684413740431cda606273ea1cea905d3f08cb84c820</password_sha256_hex>

</default>

</users>

</yandex>

root@DAILACHDBUD001:~# systemctl start clickhouse-server

4.3、clickhouse-client命令行连接到clickhouse集群各个节点执行

root@DAILACHDBUD001:~# clickhouse-client -h DAILACHDBUD001 --port 9000 --password Won123_De1

root@DAILACHDBUD001:~# clickhouse-client -h DAILACHDBUD002 --port 9000 --password Won123_De1

root@DAILACHDBUD001:~# clickhouse-client -h DAILACHDBUD003 --port 9000 --password Won123_De1

root@DAILACHDBUD001:~# clickhouse-client -h DAILACHDBUD004 --port 9000 --password Won123_De1

DAILACHDBUD001 :) show clusters;

┌─cluster─────────────────────────────────────────┐

│ cluster_2shared2replicas │

│ parallel_replicas │

│ test_cluster_one_shard_three_replicas_localhost │

│ test_cluster_two_shards │

│ test_cluster_two_shards_internal_replication │

│ test_cluster_two_shards_localhost │

│ test_shard_localhost │

│ test_shard_localhost_secure │

│ test_unavailable_shard │

└─────────────────────────────────────────────────┘

备注:4个节点执行show clusters;都显示了cluster_2shared2replicas

DAILACHDBUD001 :) select cluster,shard_num,shard_weight,replica_num,host_name,host_address,port,user from system.clusters where cluster='cluster_2shared2replicas';

┌─cluster──────────────────┬─shard_num─┬─shard_weight─┬─replica_num─┬─host_name──────┬─host_address───┬─port─┬─user────┐

│ cluster_2shared2replicas │ 1 │ 1 │ 1 │ DAILACHDBUD001 │ 172.22.137.132 │ 9000 │ default │

│ cluster_2shared2replicas │ 1 │ 1 │ 2 │ DAILACHDBUD002 │ 172.22.137.133 │ 9000 │ default │

│ cluster_2shared2replicas │ 2 │ 1 │ 1 │ DAILACHDBUD003 │ 172.22.137.134 │ 9000 │ default │

│ cluster_2shared2replicas │ 2 │ 1 │ 2 │ DAILACHDBUD004 │ 172.22.137.136 │ 9000 │ default │

└──────────────────────────┴───────────┴──────────────┴─────────────┴────────────────┴────────────────┴──────┴─────────┘

备注:4个节点查询system.clusters系统表,都显示正都有cluster_2shared2replicas,且每个分片对应4台机器

5、设置开机启动,使用deb包安装的clickhouse已经自动配置了开机启动,剩下就是设置zookeeper的开机启动

5.1、编辑文件/root/script/startupzookeeper.sh内容如下

#!/bin/bash

/chdata/zookeeper/apache-zookeeper-3.7.1-bin/bin/zkServer.sh start /chdata/zookeeper/apache-zookeeper-3.7.1-bin/conf/zoo.cfg

5.2、编辑文件/usr/lib/systemd/system/zookeeper.service内容如下

[Unit]

Description=zookeeper

After=network.target

[Service]

User=root

Group=root

Type=forking

ExecStart=/bin/bash /root/script/startupzookeeper.sh

Restart=on-failure

[Install]

WantedBy=multi-user.target

5.3、执行systemctl enable zookeeper命令,设置开机启动

root@ODSLACHDBUP001:~# systemctl enable zookeeper

Created symlink /etc/systemd/system/multi-user.target.wants/zookeeper.service → /lib/systemd/system/zookeeper.service

clickhouse错误日志存放在/var/log/clickhouse-server/目录

3节点,3shard,3replicats配置如下,遇到很多问题

create database testlukes20230526 on cluster cluster_3shared3replicas;

报错

DAILACHDBUD001 . DB::Exception: There are two exactly the same ClickHouse instances DAILACHDBUD001:9000 in cluster cluster_3shared3replicas

DAILACHDBUD002 . DB::Exception: There are two exactly the same ClickHouse instances DAILACHDBUD002:9000 in cluster cluster_3shared3replicas

DAILACHDBUD003 . DB::Exception: There are two exactly the same ClickHouse instances DAILACHDBUD003:9000 in cluster cluster_3shared3replicas

报错解读:clickhouse不允许有相同host,因为不允许互相存有分片副本。每个分片每个副本需要配置在单独的节点上。(CK officially supports only one shard/replica per node. )

解决方法:在节点1 DAILACHDBUD001上重新配置3个分片的副本,只保留DAILACHDBUD002和DAILACHDBUD003,在节点2 DAILACHDBUD002上重新配置3个分片的副本,只保留DAILACHDBUD001和DAILACHDBUD003,在节点3 DAILACHDBUD003上重新配置3个分片的副本,只保留DAILACHDBUD001和DAILACHDBUD002

create database testlukes20230526 on cluster cluster_3shared3replicas;

继续报错

DAILACHDBUD001 . DB::Exception: Not found host DAILACHDBUD001:9000 in definition of cluster cluster_3shared3replicas.

DAILACHDBUD002 . DB::Exception: Not found host DAILACHDBUD002:9000 in definition of cluster cluster_3shared3replicas.

DAILACHDBUD003 . DB::Exception: Not found host DAILACHDBUD003:9000 in definition of cluster cluster_3shared3replicas.

<cluster_3shared3replicas>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>DAILACHDBUD001</host>

<port>9000</port>

</replica>

<replica>

<host>DAILACHDBUD002</host>

<port>9000</port>

</replica>

<replica>

<host>DAILACHDBUD003</host>

<port>9000</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>DAILACHDBUD001</host>

<port>9000</port>

</replica>

<replica>

<host>DAILACHDBUD002</host>

<port>9000</port>

</replica>

<replica>

<host>DAILACHDBUD003</host>

<port>9000</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>DAILACHDBUD001</host>

<port>9000</port>

</replica>

<replica>

<host>DAILACHDBUD002</host>

<port>9000</port>

</replica>

<replica>

<host>DAILACHDBUD003</host>

<port>9000</port>

</replica>

</shard>

</cluster_3shared2replicas>

3节点,3shard,2 replicats配置如下,创建engine=MergeTree()的表时会报错DB::Exception: There are two exactly the same ClickHouse instances DAILACHDBUD001:9000 in cluster cluster_3shared2replicas

<cluster_3shared2replicas>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>DAILACHDBUD001</host>

<port>9000</port>

</replica>

<replica>

<host>DAILACHDBUD002</host>

<port>9000</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>DAILACHDBUD002</host>

<port>9000</port>

</replica>

<replica>

<host>DAILACHDBUD003</host>

<port>9000</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>DAILACHDBUD003</host>

<port>9000</port>

</replica>

<replica>

<host>DAILACHDBUD001</host>

<port>9000</port>

</replica>

</shard>

</cluster_3shared2replicas>

原文地址:https://blog.csdn.net/lusklusklusk/article/details/134741272

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_23658.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!