1、’changelog-producer’ = ‘full-compaction’

2、’changelog-producer’ = ‘lookup’

前言

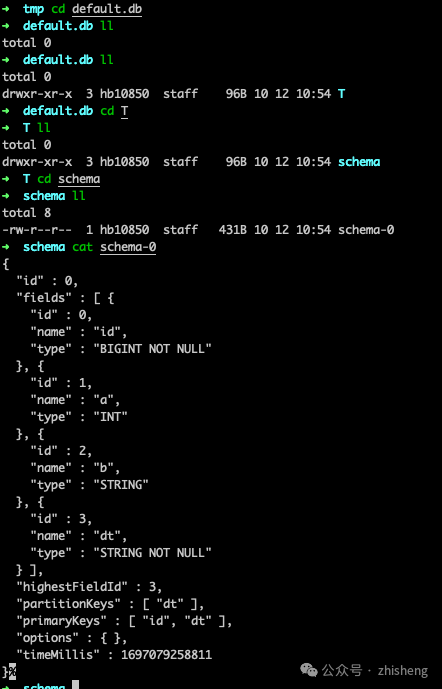

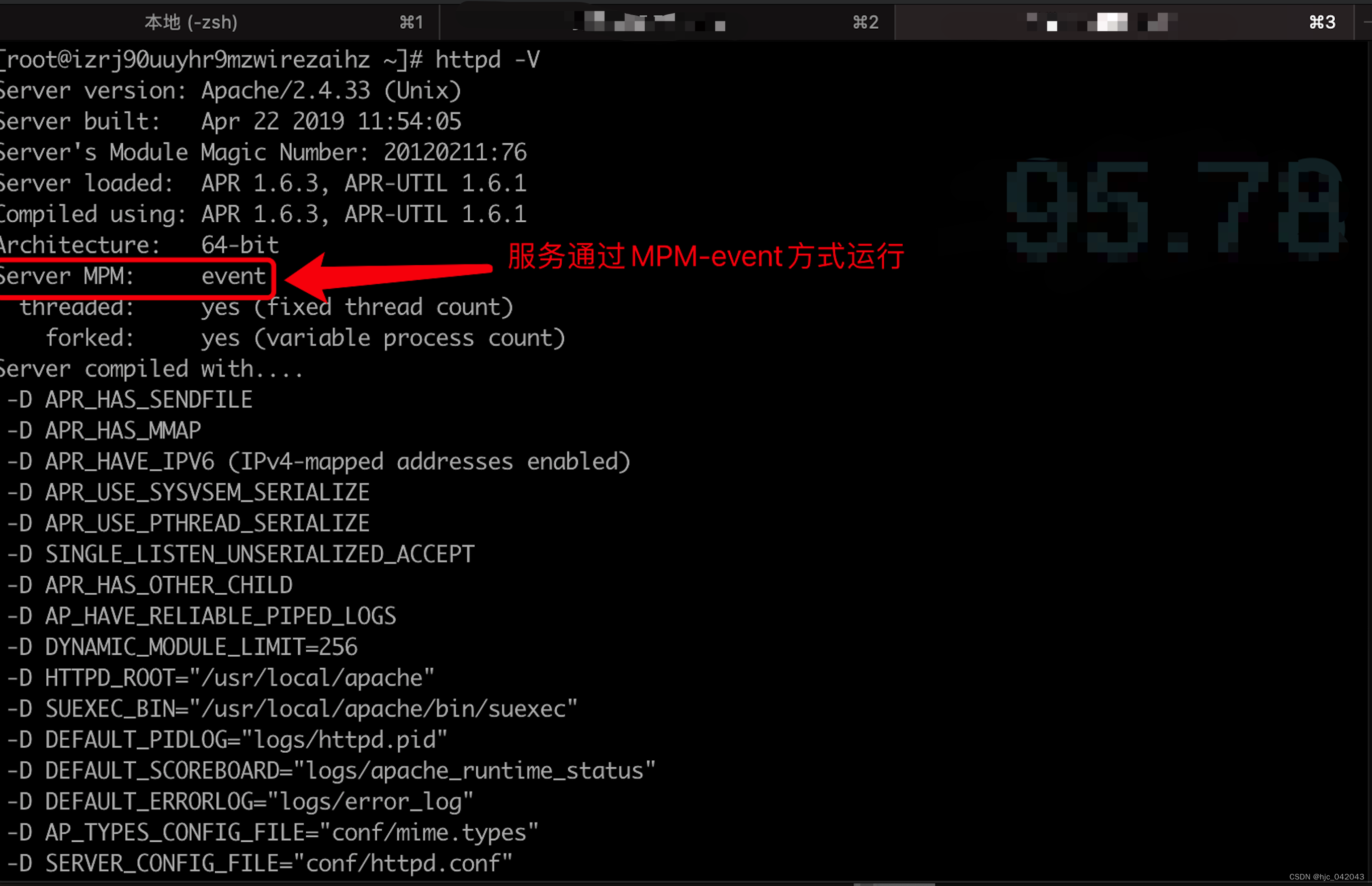

1. 什么是 Apache Paimon

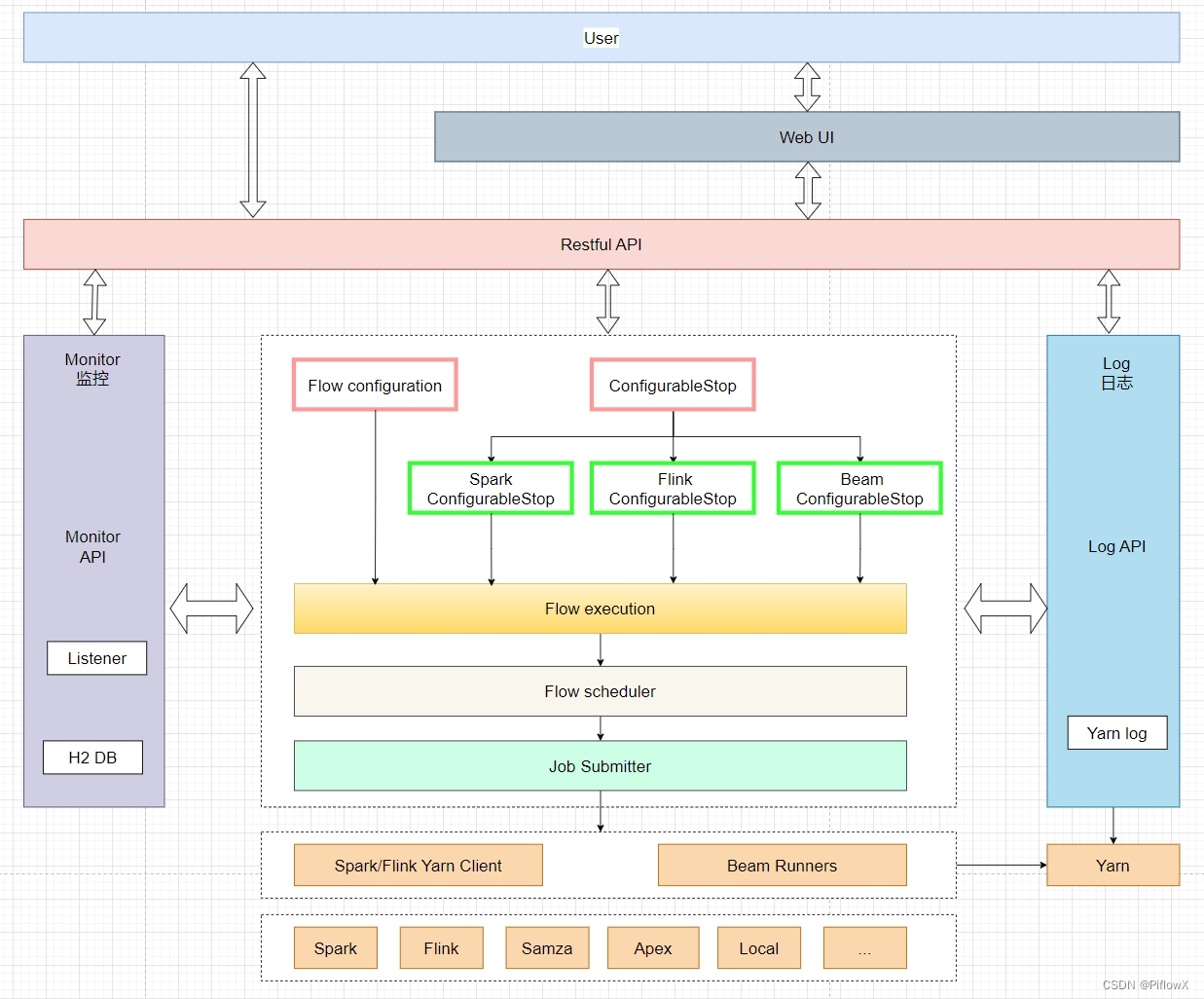

Apache Paimon (incubating) 是一项流式数据湖存储技术,可以为用户提供高吞吐、低延迟的数据摄入、流式订阅以及实时查询能力。

Paimon 采用开放的数据格式和技术理念,可以与 Apache Flink / Spark / Trino 等诸多业界主流计算引擎进行对接,共同推进 Streaming Lakehouse 架构的普及和发展。

Paimon 以湖存储的方式基于分布式文件系统管理元数据,并采用开放的 ORC、Parquet、Avro 文件格式,支持各大主流计算引擎,包括 Flink、Spark、Hive、Trino、Presto。未来会对接更多引擎,包括 Doris 和 Starrocks。

Github:https://github.com/apache/incubator-paimon

一、本地环境快速上手

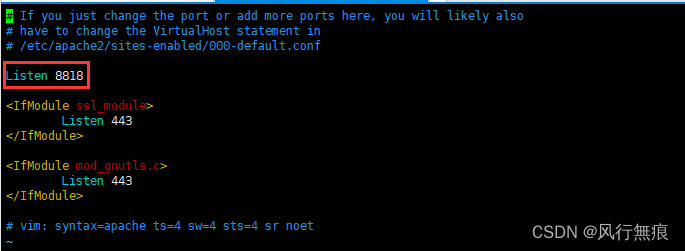

基于paimon 0.4-SNAPSHOT (Flink 1.14.4),Flink版本太低是不支持的,paimon基于最低版本1.14.6,经尝试在Flink1.14.0是不可以的!

paimon–flink-1.14-0.4-20230504.002229-50.jar

1、本地Flink伪集群

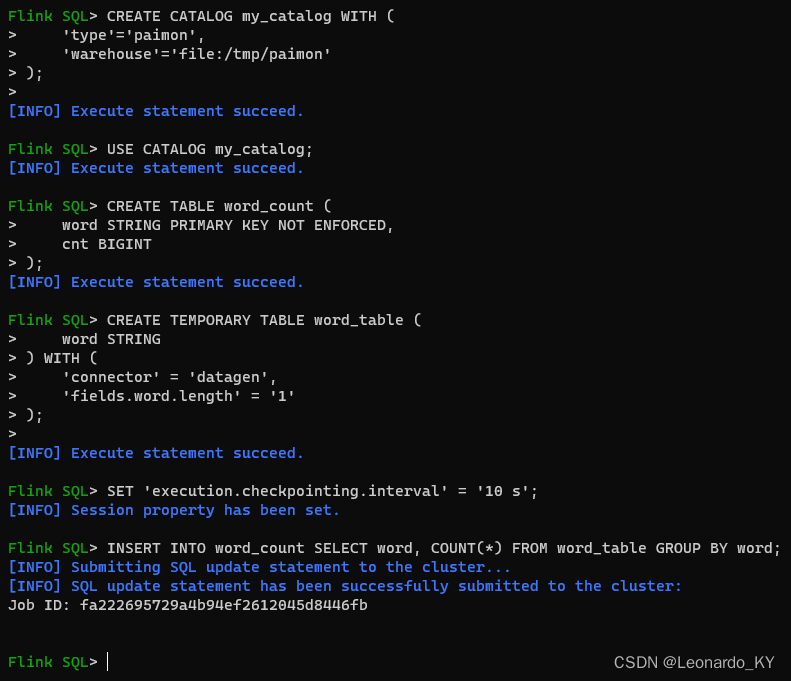

1. 根据官网demo,启动flinksql–client,创建catalog,创建表,创建数据源(视图),insert数据到表中。

2. 通过 localhost:8081 查看 Flink UI

2、IDEA中跑Paimon Demo

<dependency>

<groupId>org.apache.paimon</groupId>

<artifactId>paimon-flink-1.14</artifactId>

<version>0.4-SNAPSHOT</version>

</dependency>mvn install:install–file -DgroupId=org.apache.paimon -DartifactId=paimon-flink-1.14 -Dversion=0.4-SNAPSHOT -Dpackaging=jar -Dfile=D:softwarepaimon-flink-1.14-0.4-20230504.002229-50.jar

2.1 代码

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

/**

* @Author: YK.Leo

* @Date: 2023-05-14 15:12

* @Version: 1.0

*/

// Succeed at local !!!

public class OfficeDemoV1 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.enableCheckpointing(10000l);

env.getCheckpointConfig().setCheckpointStorage("file:/D:/tmp/paimon/");

TableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 0. Create a Catalog and a Table

tableEnv.executeSql("CREATE CATALOG my_catalog_api WITH (n" +

" 'type'='paimon',n" + // todo: !!!

" 'warehouse'='file:///D:/tmp/paimon'n" +

")");

tableEnv.executeSql("USE CATALOG my_catalog_api");

tableEnv.executeSql("CREATE TABLE IF NOT EXISTS word_count_api (n" +

" word STRING PRIMARY KEY NOT ENFORCED,n" +

" cnt BIGINTn" +

")");

// 1. Write Data

tableEnv.executeSql("CREATE TEMPORARY TABLE IF NOT EXISTS word_table_api (n" +

" word STRINGn" +

") WITH (n" +

" 'connector' = 'datagen',n" +

" 'fields.word.length' = '1'n" +

")");

// tableEnv.executeSql("SET 'execution.checkpointing.interval' = '10 s'");

tableEnv.executeSql("INSERT INTO word_count_api SELECT word, COUNT(*) FROM word_table_api GROUP BY word");

env.execute();

}

}2.2 IDEA中成功运行

3、IDEA中Stream读写

3.1 流写

代码:

package com.study.flink.table.paimon.demo;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.StatementSet;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

/**

* @Author: YK.Leo

* @Date: 2023-05-17 11:11

* @Version: 1.0

*/

// succeed at local !!!

public class OfficeStreamsWriteV2 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.enableCheckpointing(10000L);

env.getCheckpointConfig().setCheckpointStorage("file:/D:/tmp/paimon/");

TableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 0. Create a Catalog and a Table

tableEnv.executeSql("CREATE CATALOG my_catalog_local WITH (n" +

" 'type'='paimon',n" + // todo: !!!

" 'warehouse'='file:///D:/tmp/paimon'n" +

")");

tableEnv.executeSql("USE CATALOG my_catalog_local");

tableEnv.executeSql("CREATE DATABASE IF NOT EXISTS my_catalog_local.local_db");

tableEnv.executeSql("USE local_db");

// drop tbl

tableEnv.executeSql("DROP TABLE IF EXISTS paimon_tbl_streams");

tableEnv.executeSql("CREATE TABLE IF NOT EXISTS paimon_tbl_streams(n"

+ " uuid bigint,n"

+ " name VARCHAR(3),n"

+ " age int,n"

+ " ts TIMESTAMP(3),n"

+ " dt VARCHAR(10), n"

+ " PRIMARY KEY (dt, uuid) NOT ENFORCED n"

+ ") PARTITIONED BY (dt) n"

+ " WITH (n" +

" 'merge-engine' = 'partial-update',n" +

" 'changelog-producer' = 'full-compaction', n" +

" 'file.format' = 'orc', n" +

" 'scan.mode' = 'compacted-full', n" +

" 'bucket' = '5', n" +

" 'sink.parallelism' = '5', n" +

" 'sequence.field' = 'ts' n" + // todo, to check

")"

);

// datagen ====================================================================

tableEnv.executeSql("CREATE TEMPORARY TABLE IF NOT EXISTS source_A (n" +

" uuid bigint PRIMARY KEY NOT ENFORCED,n" +

" `name` VARCHAR(3)," +

" _ts1 TIMESTAMP(3)n" +

") WITH (n" +

" 'connector' = 'datagen', n" +

" 'fields.uuid.kind'='sequence',n" +

" 'fields.uuid.start'='0', n" +

" 'fields.uuid.end'='1000000', n" +

" 'rows-per-second' = '1' n" +

")");

tableEnv.executeSql("CREATE TEMPORARY TABLE IF NOT EXISTS source_B (n" +

" uuid bigint PRIMARY KEY NOT ENFORCED,n" +

" `age` int," +

" _ts2 TIMESTAMP(3)n" +

") WITH (n" +

" 'connector' = 'datagen', n" +

" 'fields.uuid.kind'='sequence',n" +

" 'fields.uuid.start'='0', n" +

" 'fields.uuid.end'='1000000', n" +

" 'rows-per-second' = '1' n" +

")");

//

//tableEnv.executeSql("insert into paimon_tbl_streams(uuid, name, _ts1) select uuid, concat(name,'_A') as name, _ts1 from source_A");

//tableEnv.executeSql("insert into paimon_tbl_streams(uuid, age, _ts1) select uuid, concat(age,'_B') as age, _ts1 from source_B");

StatementSet statementSet = tableEnv.createStatementSet();

statementSet

.addInsertSql("insert into paimon_tbl_streams(uuid, name, ts, dt) select uuid, name, _ts1 as ts, date_format(_ts1,'yyyy-MM-dd') as dt from source_A")

.addInsertSql("insert into paimon_tbl_streams(uuid, age, dt) select uuid, age, date_format(_ts2,'yyyy-MM-dd') as dt from source_B")

;

statementSet.execute();

// env.execute();

}

}结果:

如果只有一个流,上述代码完全没有问题【仅作为write demo一个流即可】,两个流会出现“写冲突”问题!

如下:

使用了官网的方法:Dedicated Compaction Job,似乎并没有奏效,至于解决方法请看下文 “二、进阶:本地(IDEA)多流拼接测试”;

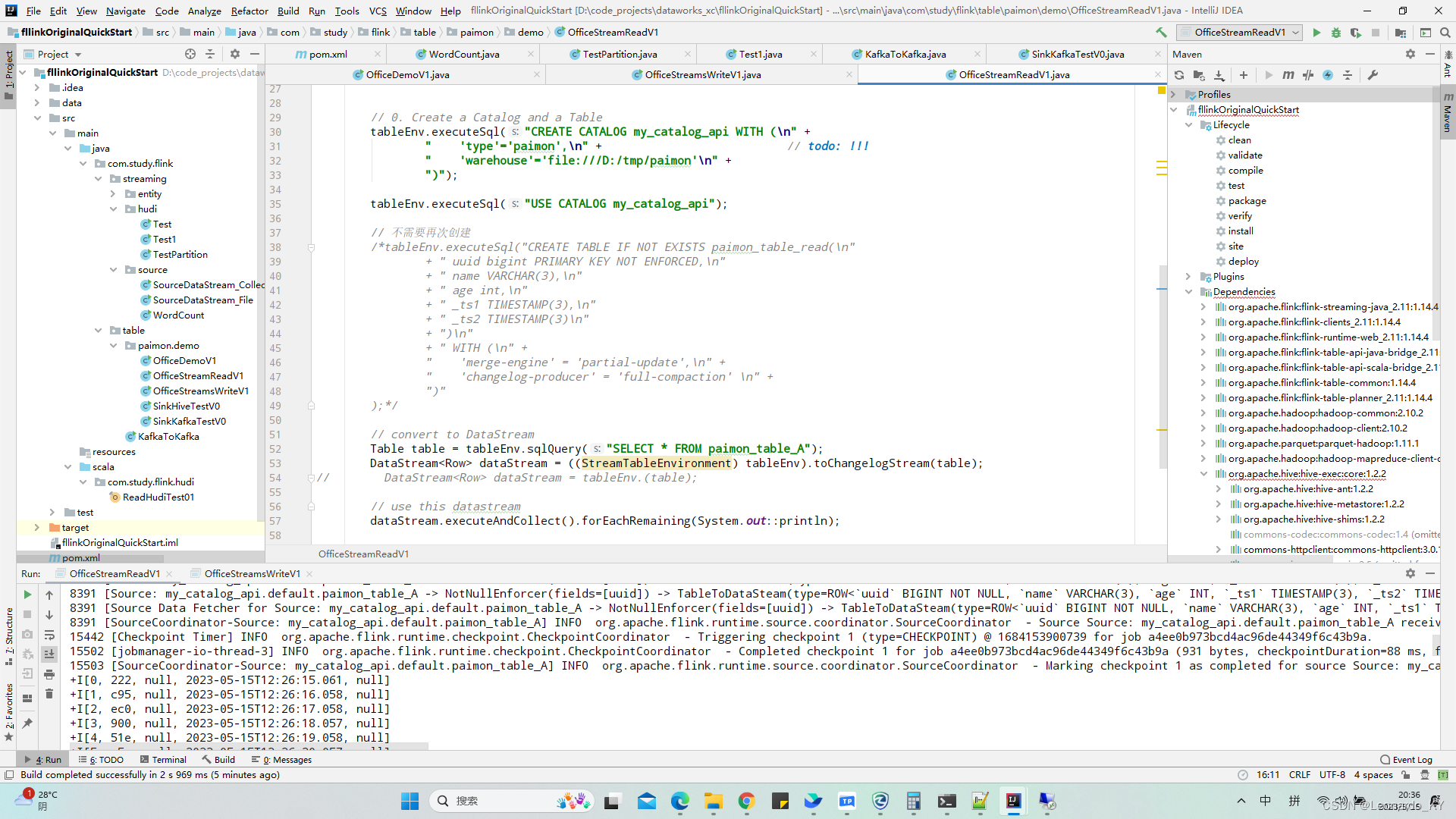

3.2 流读(toChangeLogStream)

代码:

package com.study.flink.table.paimon.demo;

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.Schema;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.connector.ChangelogMode;

import org.apache.flink.types.Row;

import org.apache.flink.types.RowKind;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

/**

* @Author: YK.Leo

* @Date: 2023-05-15 18:50

* @Version: 1.0

*/

// 流读单表OK!

public class OfficeStreamReadV1 {

public static final Logger LOGGER = LogManager.getLogger(OfficeStreamReadV1.class);

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.enableCheckpointing(10000L);

env.getCheckpointConfig().setCheckpointStorage("file:/D:/tmp/paimon/");

TableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 0. Create a Catalog and a Table

tableEnv.executeSql("CREATE CATALOG my_catalog_local WITH (n" +

" 'type'='paimon',n" + // todo: !!!

" 'warehouse'='file:///D:/tmp/paimon'n" +

")");

tableEnv.executeSql("USE CATALOG my_catalog_local");

tableEnv.executeSql("CREATE DATABASE IF NOT EXISTS my_catalog_local.local_db");

tableEnv.executeSql("USE local_db");

// 不需要再次创建表

// convert to DataStream

// Table table = tableEnv.sqlQuery("SELECT * FROM paimon_tbl_streams");

Table table = tableEnv.sqlQuery("SELECT * FROM paimon_tbl_streams WHERE name is not null and age is not null");

// DataStream<Row> dataStream = ((StreamTableEnvironment) tableEnv).toChangelogStream(table);

// todo : doesn't support consuming update and delete changes which is produced by node TableSourceScan

// DataStream<Row> dataStream = ((StreamTableEnvironment) tableEnv).toDataStream(table);

// 剔除 -U 数据(即:更新前的数据不需要重新发送,剔除)!!!

DataStream<Row> dataStream = ((StreamTableEnvironment) tableEnv)

.toChangelogStream(table, Schema.newBuilder().primaryKey("dt","uuid").build(), ChangelogMode.upsert())

.filter(new FilterFunction<Row>() {

@Override

public boolean filter(Row row) throws Exception {

boolean isNoteUpdateBefore = !(row.getKind().equals(RowKind.UPDATE_BEFORE));

if (!isNoteUpdateBefore) {

LOGGER.info("UPDATE_BEFORE: " + row.toString());

}

return isNoteUpdateBefore;

}

})

;

// use this datastream

dataStream.executeAndCollect().forEachRemaining(System.out::println);

env.execute();

}

}结果:

二、进阶:本地(IDEA)多流拼接测试

要解决的问题:

多个流拥有相同的主键,每个流更新除主键外的部分字段,通过主键完成多流拼接。

note:

如果是两个Flink Job 或者 两个 pipeline 写同一个paimon表,则直接会产生conflict,其中一条流不断exception、重启;

可以使用 “UNION ALL” 将多个流合并为一个流,最终一个Flink job写paimon表;

使用主键表,‘merge–engine‘ = ‘partial-update‘ ;

1、’changelog-producer‘ = ‘full–compaction’

(1)multiWrite代码

package com.study.flink.table.paimon.multi;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.StatementSet;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

/**

* @Author: YK.Leo

* @Date: 2023-05-18 10:17

* @Version: 1.0

*/

// Succeed as local !!!

// 而且不会产生conflict,跑5分钟没有任何异常(公司跑几天无异常)! 数据也可以在另一个job流读!

public class MultiStreamsUnionWriteV1 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.enableCheckpointing(10*1000L);

env.getCheckpointConfig().setCheckpointStorage("file:/D:/tmp/paimon/");

TableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 0. Create a Catalog and a Table

tableEnv.executeSql("CREATE CATALOG my_catalog_local WITH (n" +

" 'type'='paimon',n" + // todo: !!!

" 'warehouse'='file:///D:/tmp/paimon'n" +

")");

tableEnv.executeSql("USE CATALOG my_catalog_local");

tableEnv.executeSql("CREATE DATABASE IF NOT EXISTS my_catalog_local.local_db");

tableEnv.executeSql("USE local_db");

// drop & create tbl

tableEnv.executeSql("DROP TABLE IF EXISTS paimon_tbl_streams");

tableEnv.executeSql("CREATE TABLE IF NOT EXISTS paimon_tbl_streams(n"

+ " uuid bigint,n"

+ " name VARCHAR(3),n"

+ " age int,n"

+ " ts TIMESTAMP(3),n"

+ " dt VARCHAR(10), n"

+ " PRIMARY KEY (dt, uuid) NOT ENFORCED n"

+ ") PARTITIONED BY (dt) n"

+ " WITH (n" +

" 'merge-engine' = 'partial-update',n" +

" 'changelog-producer' = 'full-compaction', n" +

" 'file.format' = 'orc', n" +

" 'scan.mode' = 'compacted-full', n" +

" 'bucket' = '5', n" +

" 'sink.parallelism' = '5', n" +

// " 'write_only' = 'true', n" +

" 'sequence.field' = 'ts' n" + // todo, to check

")"

);

// datagen ====================================================================

tableEnv.executeSql("CREATE TEMPORARY TABLE IF NOT EXISTS source_A (n" +

" uuid bigint PRIMARY KEY NOT ENFORCED,n" +

" `name` VARCHAR(3)," +

" _ts1 TIMESTAMP(3)n" +

") WITH (n" +

" 'connector' = 'datagen', n" +

" 'fields.uuid.kind'='sequence',n" +

" 'fields.uuid.start'='0', n" +

" 'fields.uuid.end'='1000000', n" +

" 'rows-per-second' = '1' n" +

")");

tableEnv.executeSql("CREATE TEMPORARY TABLE IF NOT EXISTS source_B (n" +

" uuid bigint PRIMARY KEY NOT ENFORCED,n" +

" `age` int," +

" _ts2 TIMESTAMP(3)n" +

") WITH (n" +

" 'connector' = 'datagen', n" +

" 'fields.uuid.kind'='sequence',n" +

" 'fields.uuid.start'='0', n" +

" 'fields.uuid.end'='1000000', n" +

" 'rows-per-second' = '1' n" +

")");

//

StatementSet statementSet = tableEnv.createStatementSet();

String sqlText = "INSERT INTO paimon_tbl_streams(uuid, name, age, ts, dt) n" +

"select uuid, name, cast(null as int) as age, _ts1 as ts, date_format(_ts1,'yyyy-MM-dd') as dt from source_A n" +

"UNION ALL n" +

"select uuid, cast(null as string) as name, age, _ts2 as ts, date_format(_ts2,'yyyy-MM-dd') as dt from source_B"

;

statementSet.addInsertSql(sqlText);

statementSet.execute();

}

}读代码同上。

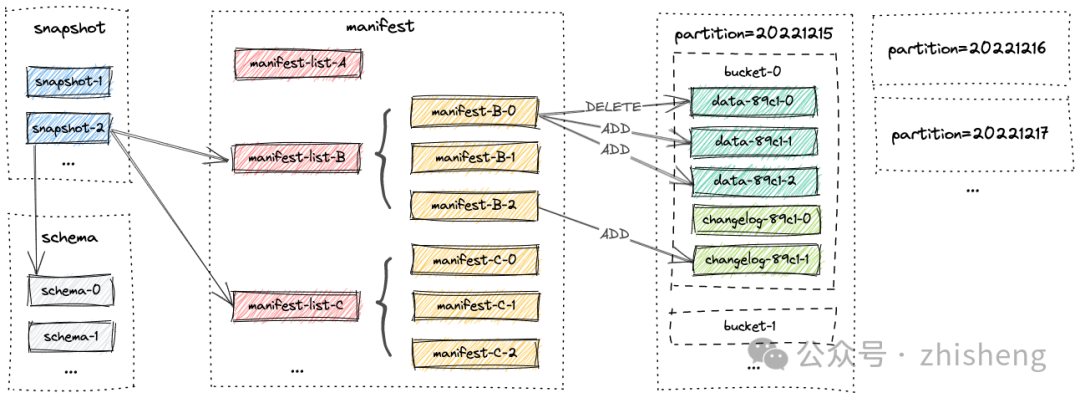

(2)读延迟

即:从client数据落到paimon,完成与server的join,再到被Flink-paimon流读到的时间延迟;

2、’changelog-producer’ = ‘lookup‘

读写同上,建表时修改参数即可: changelog-producer=’lookup‘,与此匹配的scan–mode需要分别配置为 ‘latest‘ ;

note:

经测试,在企业生产环境中full–compaction模式目前一切稳定(两条join的流QPS约3K左右,延迟2-3分钟)。

(multiWrite的checkpoint间隔为60s时)

三、可能遇到的问题

1. Caused by: java.lang.ClassCastException: org.codehaus.janino.CompilerFactory cannot be cast to org.codehaus.commons.compiler.ICompilerFactory

<exclude>org.codehaus.janino:*</exclude>

2. Caused by: java.lang.ClassNotFoundException: org.apache.flink.util.function.SerializableFunction

原因:Flink steaming版本与Flink table版本不一致 或 确实相关依赖 (这里是paimon依赖的flink版本最低为1.14.6,与1.14.0的flink不兼容)

参考Flink配置:Configuration | Apache Flink

3. Caused by: java.util.ServiceConfigurationError: org.apache.flink.table.factories.Factory: Provider org.apache.flink.table.store.connector.TableStoreManagedFactory not found

在项目的META-INF/services路径下添加 Factory 文件(这样才能匹配Flink的CatalogFactory,才能创建catalog)

4. Caused by: org.apache.flink.client.program.ProgramInvocationException: The main method caused an error: No operators defined in streaming topology. Cannot execute.

已经存在tableEnv.executeSql 或者 statementSet.execute() 时就不需要再 env.execute() 了!

5. Flink SQL不能直接使用null as,需要写成 cast(null as data_type), 如 cast(null as string);

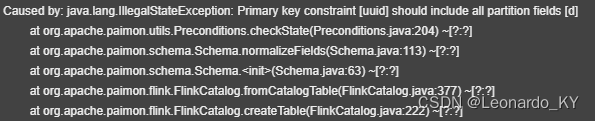

6. 如果创建paimon分区表,必须要把分区字段放在主键中!,否则建表报错:

四、展望

如果有数据格式:

主键 stream_client stream_server ts

1001 null a 1

1001 A null 2

1001 B null 3

按照paimon官方的实现,使用主键表的partial update进行多流拼接会被拼接为如下结果:

1001 B a 3;

即:主键会被去重(取每个流里边最新的一条),如果想要保留 stream_client 的全部数据,官方源码实现不了,需要进行改造!

我们已经改造并实现了非去重的效果,后续出一篇专门的文章阐述一下改造思路和方法。

想象:

stream_client为客户端数据,请求一次服务之后,可以上下滑动屏幕(或者进入后回退),使某个商品产生多次曝光(但不会多次请求server端);此时 client 端产生了多条数据,server端只有一条数据。但是,client端多次的曝光/点击是可以反应用户对某个商品的感兴趣程度的,是有意义的数据,不应该被去重掉!

【未完待续…】

原文地址:https://blog.csdn.net/LutherK/article/details/130775956

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_28214.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!