简介

什么是 Apache ShardingSphere?

Apache ShardingSphere 是一款分布式的数据库生态系统,可以将任意数据库转换为分布式数据库,并通过数据分片、弹性伸缩、加密等能力对原有数据库进行增强。

分库分表的背景

传统的将数据集中存储⾄单⼀数据节点的解决⽅案,在性能、可⽤性和运维成本这三⽅⾯已经难于满⾜互联⽹的海量数据场景。

随着业务数据量的增加,原来所有的数据都是在一个数据库上的,网络IO及文件IO都集中在一个数据库上的,因此CPU、内存、文件IO、网络IO都可能会成为系统瓶颈。

在传统的关系型数据库⽆法满⾜互联⽹场景需要的情况下,将数据存储⾄原⽣⽀持分布式的 NoSQL 的尝试越来越多。但 NoSQL 并不能包治百病。

使用

pom

<!-- sharding-jdbc -->

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>sharding-jdbc-spring-boot-starter</artifactId>

<version>4.1.1</version>

</dependency>

<!-- 数据源治理, 动态切换 -->

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>sharding-jdbc-orchestration</artifactId>

<version>4.1.1</version>

</dependency>

<!-- 分布式事务 -->

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>sharding-transaction-xa-core</artifactId>

<version>4.1.1</version>

</dependency>配置

1,application.properties配置文件

#垂直分表策略

# 配置真实数据源

spring.shardingsphere.datasource.names=m1

# 配置第 1 个数据源,数据源

spring.shardingsphere.datasource.m1.type=com.alibaba.druid.pool.DruidDataSource

spring.shardingsphere.datasource.m1.driver-class-name=com.mysql.cj.jdbc.Driver

spring.shardingsphere.datasource.m1.url=jdbc:mysql://localhost:3306/coursedb?serverTimezone=GMT%2B8

spring.shardingsphere.datasource.m1.username=root

spring.shardingsphere.datasource.m1.password=root

# 指定表的分布情况 配置表在哪个数据库里,表名是什么。水平分表,分两个表:m1.course_1,m1.course_2

spring.shardingsphere.sharding.tables.course.actual-data-nodes=m1.course_$->{1..2}

# 指定表的主键生成策略

spring.shardingsphere.sharding.tables.course.key-generator.column=cid

spring.shardingsphere.sharding.tables.course.key-generator.type=SNOWFLAKE

#雪花算法的一个可选参数

spring.shardingsphere.sharding.tables.course.key-generator.props.worker.id=1

#使用自定义的主键生成策略

#spring.shardingsphere.sharding.tables.course.key-generator.type=MYKEY

#spring.shardingsphere.sharding.tables.course.key-generator.props.mykey-offset=88

#指定分片策略 约定cid值为偶数添加到course_1表。如果是奇数添加到course_2表。

# 选定计算的字段,分片健

spring.shardingsphere.sharding.tables.course.table-strategy.inline.sharding-column= cid

# 根据计算的字段算出对应的表名。分片算法 course_$->{cid%2+1},2进courese1, 1进course2

spring.shardingsphere.sharding.tables.course.table-strategy.inline.algorithm-expression=course_$->{cid%2+1}

# 打开sql日志输出。

spring.shardingsphere.props.sql.show=true

spring.main.allow-bean-definition-overriding=true

2,创建配置类

package com.example.demo.config;

import java.sql.SQLException;

import java.util.HashMap;

import java.util.Map;

import java.util.Properties;

import javax.sql.DataSource;

import org.apache.ibatis.session.SqlSessionFactory;

import org.apache.shardingsphere.api.config.sharding.ShardingRuleConfiguration;

import org.apache.shardingsphere.api.config.sharding.TableRuleConfiguration;

import org.apache.shardingsphere.api.config.sharding.strategy.ComplexShardingStrategyConfiguration;

import org.apache.shardingsphere.shardingjdbc.api.ShardingDataSourceFactory;

import org.apache.shardingsphere.underlying.common.config.properties.ConfigurationPropertyKey;

import org.mybatis.spring.SqlSessionTemplate;

import org.mybatis.spring.transaction.SpringManagedTransactionFactory;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.core.env.Environment;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import org.springframework.jdbc.datasource.DataSourceTransactionManager;

import org.springframework.transaction.annotation.EnableTransactionManagement;

import com.alibaba.druid.pool.DruidDataSource;

import com.baomidou.mybatisplus.extension.MybatisMapWrapperFactory;

import com.baomidou.mybatisplus.extension.spring.MybatisSqlSessionFactoryBean;

/**

* @author admin

* @version 1.0

* @since 2022/12/13 20:30

*/

@Configuration

@EnableTransactionManagement

public class MyDbConfig {

/**

* 主数据源, 默认注入

*/

@Bean(name = "dataSource")

@Primary

public DataSource druidDataSource(Environment environment) {

return createDruidDataSource(environment);

}

/**

* 主数据源 分表

*/

@Bean(name = "dataSourceSharding")

public DataSource getShardingDataSource(@Qualifier("dataSource") DataSource dataSource, Environment environment) throws SQLException {

ShardingRuleConfiguration shardingRuleConfig = new ShardingRuleConfiguration();

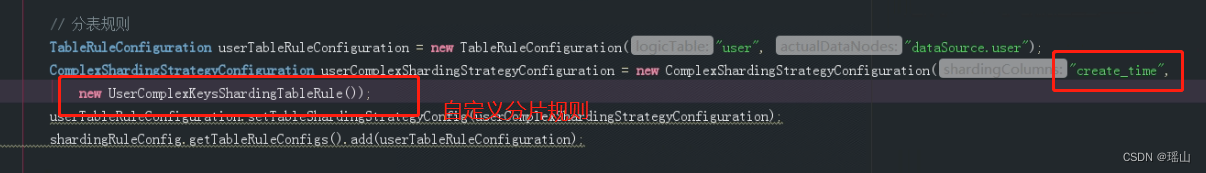

// 分表规则

TableRuleConfiguration userTableRuleConfiguration = new TableRuleConfiguration("user", "dataSource.user");

ComplexShardingStrategyConfiguration userComplexShardingStrategyConfiguration = new ComplexShardingStrategyConfiguration("create_time",

new UserComplexKeysShardingTableRule());

userTableRuleConfiguration.setTableShardingStrategyConfig(userComplexShardingStrategyConfiguration);

shardingRuleConfig.getTableRuleConfigs().add(userTableRuleConfiguration);

//数据源

Map<String, DataSource> result = new HashMap<>(1);

result.put("dataSource", dataSource);

Properties properties = new Properties();

properties.put(ConfigurationPropertyKey.MAX_CONNECTIONS_SIZE_PER_QUERY.getKey(), 5);

return ShardingDataSourceFactory.createDataSource(result, shardingRuleConfig, properties);

}

@Bean("mybatisMapWrapperFactory")

public MybatisMapWrapperFactory createMybatisMapWrapperFactory() {

return new MybatisMapWrapperFactory();

}

@Bean(name = "sqlSessionFactory")

public MybatisSqlSessionFactoryBean createMybatisSqlSessionFactoryBean(@Qualifier("dataSourceSharding") DataSource dataSource,

MybatisMapWrapperFactory mybatisMapWrapperFactory) throws Exception {

MybatisSqlSessionFactoryBean bean = new MybatisSqlSessionFactoryBean();

bean.setDataSource(dataSource);

bean.setTransactionFactory(new SpringManagedTransactionFactory());

// 扫描指定目录的xml

bean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath:mapper/**/*Mapper.xml"));

Properties prop = new Properties();

//转驼峰

prop.setProperty("mapUnderscoreToCamelCase", "true");

//允许使用自动生成主键

prop.setProperty("useGeneratedKeys", "true");

prop.setProperty("logPrefix", "dao.");

bean.setConfigurationProperties(prop);

// 扫描包

bean.setTypeAliasesPackage("com.example.demo.dao");

bean.setObjectWrapperFactory(mybatisMapWrapperFactory);

return bean;

}

@Bean(name = "sqlSessionTemplate")

public SqlSessionTemplate sqlSessionTemplate(@Qualifier("sqlSessionFactory") SqlSessionFactory sqlSessionFactory) throws Exception {

return new SqlSessionTemplate(sqlSessionFactory);

}

@Bean("transactionManager")

public DataSourceTransactionManager createTransactionManager(@Qualifier("dataSourceSharding") DataSource dynamicDataSource) {

return new DataSourceTransactionManager(dynamicDataSource);

}

/**

* 创建数据源

* @param env env

* @return DruidDataSource

*/

private static DruidDataSource createDruidDataSource(Environment env) {

DruidDataSource dataSource = new DruidDataSource();

dataSource.setUrl(env.getProperty("spring.datasource.druid.url"));

dataSource.setUsername(env.getProperty("spring.datasource.druid.username"));

dataSource.setPassword(env.getProperty("spring.datasource.druid.password"));

dataSource.setInitialSize(env.getProperty("spring.datasource.druid.initial-size", Integer.class));

dataSource.setMaxActive(env.getProperty("spring.datasource.druid.max-active", Integer.class));

dataSource.setMinIdle(env.getProperty("spring.datasource.druid.min-idle", Integer.class));

dataSource.setMaxWait(env.getProperty("spring.datasource.druid.maxWait", Integer.class));

dataSource.setValidationQuery(env.getProperty("spring.datasource.druid.validationQuery"));

return dataSource;

}

}

spring.datasource.druid.initial-size = 10

spring.datasource.druid.min-idle = 10

spring.datasource.druid.max-active = 20

spring.datasource.druid.maxWait = 6000

spring.datasourceslave.druid.initial-size = 10

spring.datasourceslave.druid.min-idle = 10

spring.datasourceslave.druid.max-active = 20

spring.datasourceslave.druid.maxWait = 6000

spring.datasource.druid.validationQuery = SELECT 1 FROM DUAL

spring.datasource.druid.url = jdbc:mysql://127.0.0.1:3306/test?useUnicode=true&characterEncoding=utf8&characterSetResults=utf8&serverTimezone=GMT%2B8&useSSL=false

spring.datasource.druid.username = root

spring.datasource.druid.password = 123分表

UserComplexKeysShardingTableRule

package com.example.demo.config;

import java.text.SimpleDateFormat;

import java.util.*;

import org.apache.shardingsphere.api.sharding.complex.ComplexKeysShardingAlgorithm;

import org.apache.shardingsphere.api.sharding.complex.ComplexKeysShardingValue;

import org.springframework.util.CollectionUtils;

import com.google.common.collect.Range;

/**

* 多列分片规则定义

* @author admin

* @version 1.0

* @since 2023/01/05 15:15

*/

public class UserComplexKeysShardingTableRule implements ComplexKeysShardingAlgorithm<String> {

private static final String TABLE_COLUMN_TIME = "create_time";

private static final String TABLE_PREFIX = "user_";

@Override

public Collection<String> doSharding(Collection<String> availableTargetNames, ComplexKeysShardingValue<String> shardingValue) {

return getShardingValue(shardingValue);

}

/**

* 获取分片键对应的值

*

* @param shardingValue shardingValue

* @return Collection<String>

*/

private Collection<String> getShardingValue(ComplexKeysShardingValue<String> shardingValue) {

Collection<String> times = new HashSet<>();

Map<String, Collection<String>> columnNameAndShardingValuesMap = shardingValue.getColumnNameAndShardingValuesMap();

Map<String, Range<String>> columnNameAndRangeValuesMap = shardingValue.getColumnNameAndRangeValuesMap();

if (columnNameAndShardingValuesMap.containsKey(TABLE_COLUMN_TIME)) {

Collection<String> collection = columnNameAndShardingValuesMap.get(TABLE_COLUMN_TIME);

Object next = collection.iterator().next();

times.add(next.toString().substring(2, 4));

}

if (columnNameAndRangeValuesMap.containsKey(TABLE_COLUMN_TIME)) {

Range<String> range = columnNameAndRangeValuesMap.get(TABLE_COLUMN_TIME);

Collection<String> values = getTimeList(range);

if (values.isEmpty()) {

throw new UnsupportedOperationException("分片规则键不能为空");

} else {

times.addAll(values);

}

}

if (CollectionUtils.isEmpty(times)) {

throw new UnsupportedOperationException("分片规则键不能为空");

}

Set<String> tableNames = new HashSet<>();

for (String billTime : times) {

tableNames.add(getTableName(billTime));

}

return tableNames;

}

/**

* 获取时间

*

* @param valueRange valueRange

* @return Collection<String>

*/

private Collection<String> getTimeList(Range<String> valueRange) {

Set<String> prefixes = new HashSet<>();

if (valueRange.isEmpty()) {

throw new UnsupportedOperationException("分片规则键不能为空");

} else {

int start = Integer.parseInt(valueRange.lowerEndpoint().substring(2, 4));

int end = Integer.parseInt(valueRange.upperEndpoint().substring(2, 4));

int max = Integer.parseInt(dateToString(new Date(), "yy"));

// 只能查2018年开始到当前年份的数据

start = Math.max(start, 18);

start = Math.min(start, max);

end = Math.max(end, 18);

end = Math.min(end, max);

while (start <= end) {

prefixes.add(String.valueOf(start));

start++;

}

return prefixes;

}

}

/**

* 转格式

* @param date date

* @param format format

* @return String

*/

private String dateToString(Date date, String format) {

SimpleDateFormat formater = new SimpleDateFormat(format);

return formater.format(date);

}

/**

* 根据学校编码分表

*

* @param simpleYear 年份的第3、4位

* @return String

*/

private static String getTableName(String simpleYear) {

if (simpleYear.length() != 2) {

throw new UnsupportedOperationException("分片规则键不能为空");

}

System.out.println("getTableName=" + TABLE_PREFIX + simpleYear);

return TABLE_PREFIX + simpleYear;

}

}

getColumnNameAndShardingValuesMap() // 分片键= shardingValue.getColumnNameAndRangeValuesMap();// 分片键IN和范围查询

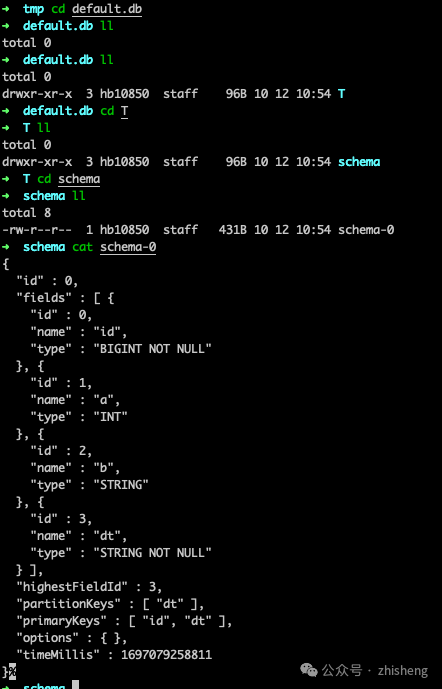

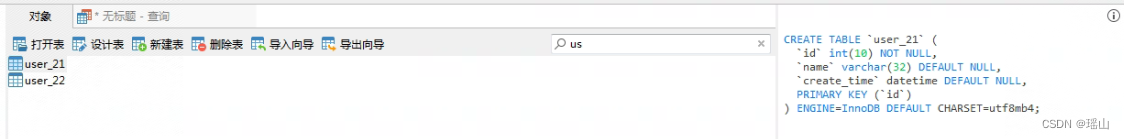

验证分表

表是这样的

CREATE TABLE `user_21` (

`id` int(10) NOT NULL,

`name` varchar(32) DEFAULT NULL,

`create_time` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;实体类和service这里就不赘述了,这里贴下查询TestController

@RequestMapping("select")

public String select() {

LambdaQueryWrapper<User> queryWrapper = Wrappers.lambdaQuery();

queryWrapper.eq(User::getCreateTime, new Date());

userService.list(queryWrapper);

return "ok";

}

当前时间是二三年,这里的报错是因为没有创建对应表,创建user_23后则正常

常见问题

自定义分表规则未生效

解决: 去掉sql上的字段格式化处理, 在服务层对传入参数进行处理

and pay_time between DATE_FORMAT(#{param.billDate,jdbcType=VARCHAR}, '%Y-%m-%d 00:00:00')

AND DATE_FORMAT(#{param.billDate,jdbcType=VARCHAR}, '%Y-%m-%d 23:59:59')

and pay_time between CONCAT(#{param.billDate,jdbcType=VARCHAR}, ' 00:00:00') AND

CONCAT(#{param.billDate,jdbcType=VARCHAR}, ' 23:59:59')

AND DATE_FORMAT(pay_time, '%Y-%m-%d') = #{param.billDate,jdbcType=VARCHAR}and pay_time between #{param.payTimeStart,jdbcType=VARCHAR} AND #{param.payTimeEnd,jdbcType=VARCHAR}使用sum函数无法获取值

sum本身可以, 但是不能加ifnull

ifnull(sum(money), 0)

改成

sum(ifnull(money, 0))

原文地址:https://blog.csdn.net/qq_44695727/article/details/128185741

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_35228.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!