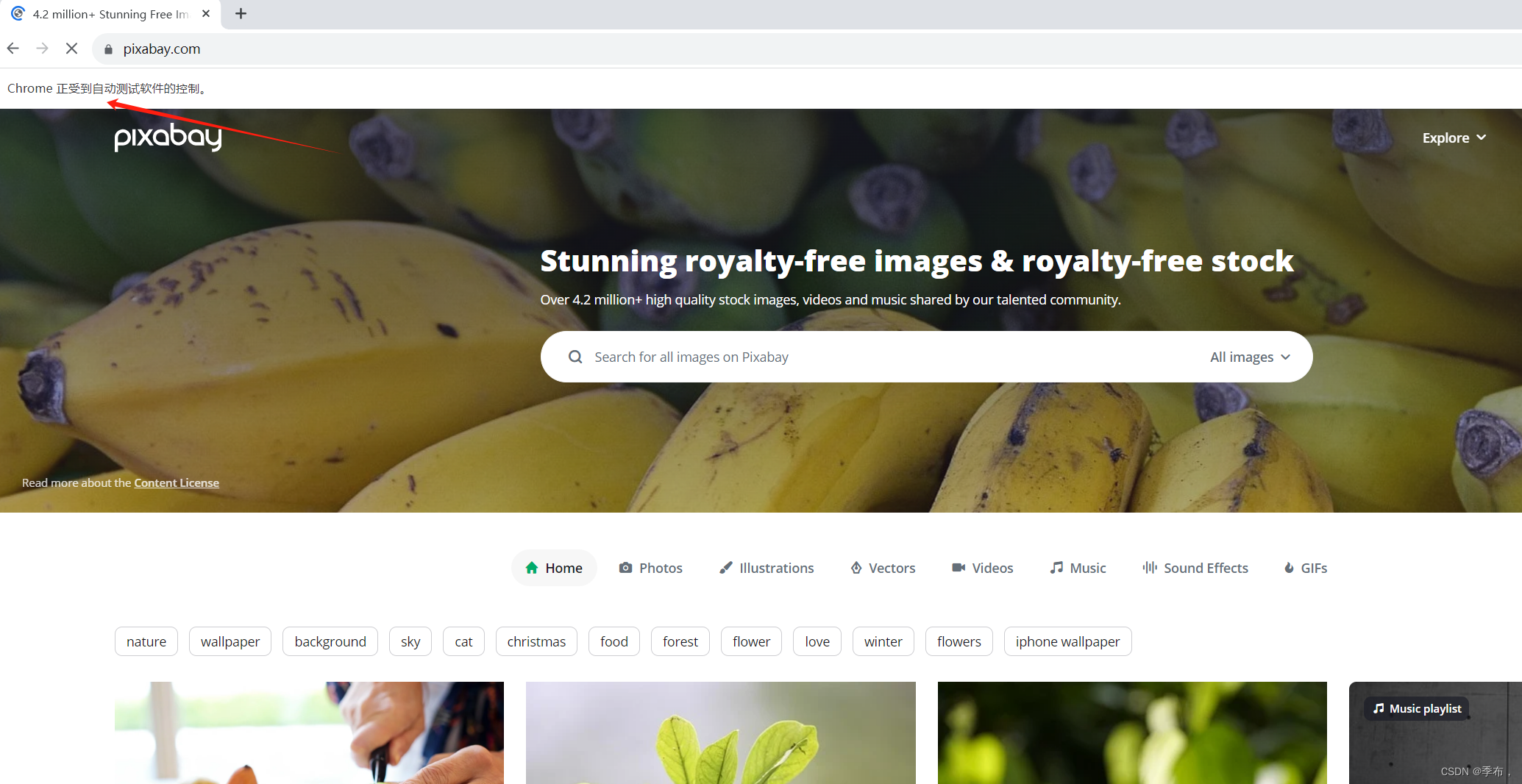

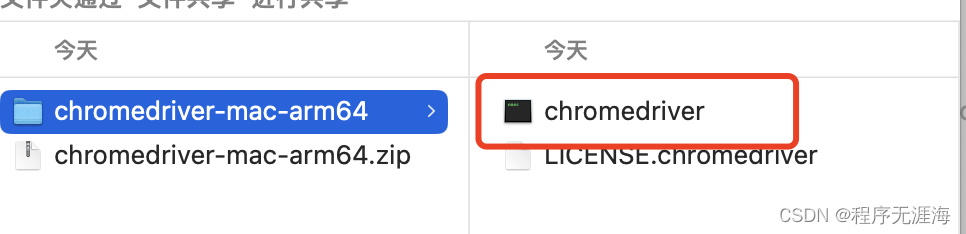

实现访问首页

from os.path import dirname

from selenium import webdriver

class ImageAutoSearchAndSave:

"""图片自动搜索保存"""

def __init__(self):

"""初始化"""

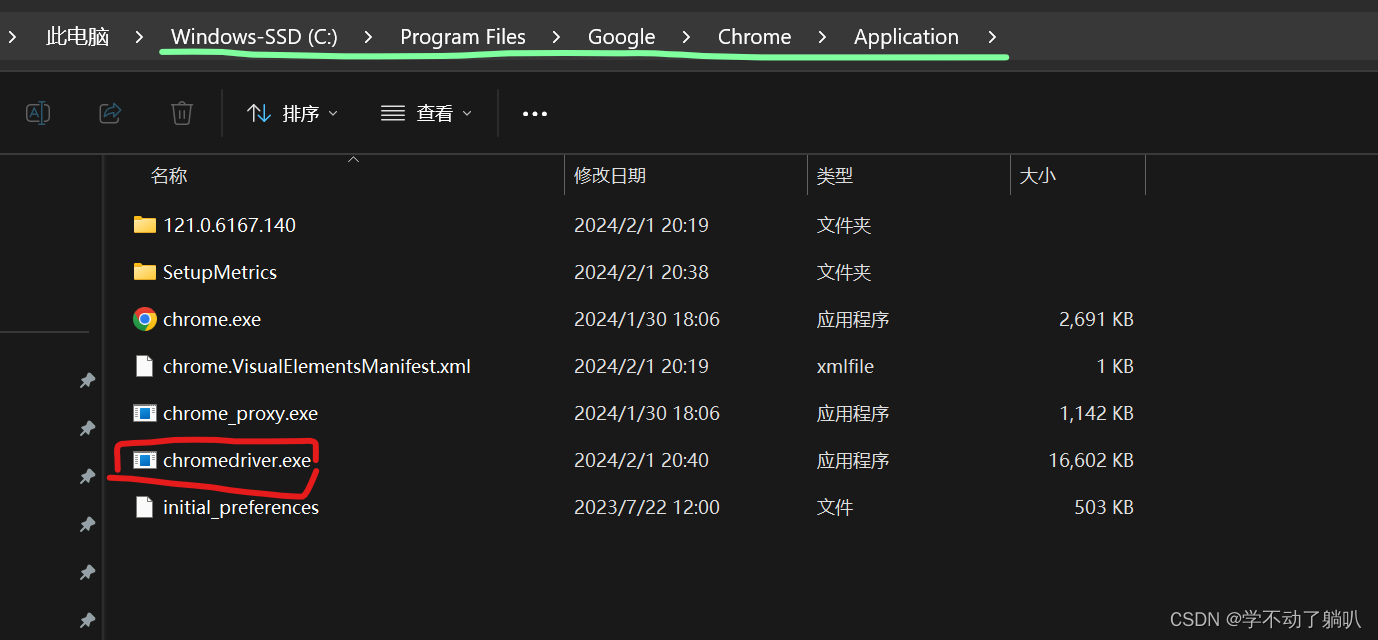

self.driver = webdriver.Chrome(executable_path=dirname(__file__) + "/chromedriver.exe")

def run(self):

"""开始运行"""

print("=======开始=======")

# 访问首页

self.driver.get("https://pixabay.com/")

print("=======结束=======")

if __name__ == '__main__':

ImageAutoSearchAndSave().run()

实现图片自动欧索

from os.path import dirname

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

class ImageAutoSearchAndSave:

"""图片自动搜索保存"""

def __init__(self, keyword):

"""初始化"""

self.keyword = keyword

self.driver = webdriver.Chrome(executable_path=dirname(__file__) + "/chromedriver.exe")

def run(self):

"""开始运行"""

print("=======开始=======")

# 访问首页

self.driver.get("https://pixabay.com/")

# 搜索图片

self._search_image()

print("=======结束=======")

def _search_image(self):

'''搜索图片'''

elem = self.driver.find_element_by_css_selector("input[name]")

elem.send_keys(self.keyword + Keys.ENTER)

if __name__ == '__main__':

keyword = "cat"

ImageAutoSearchAndSave(keyword).run()

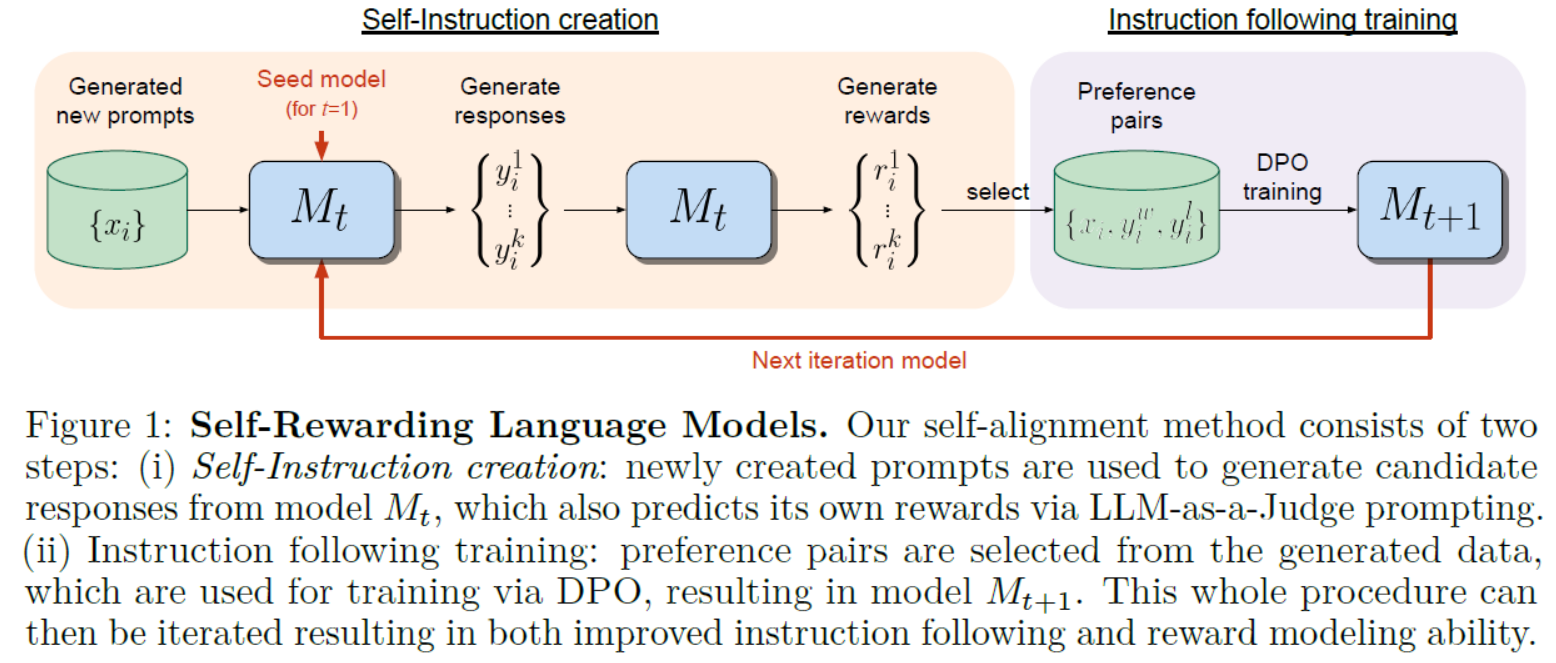

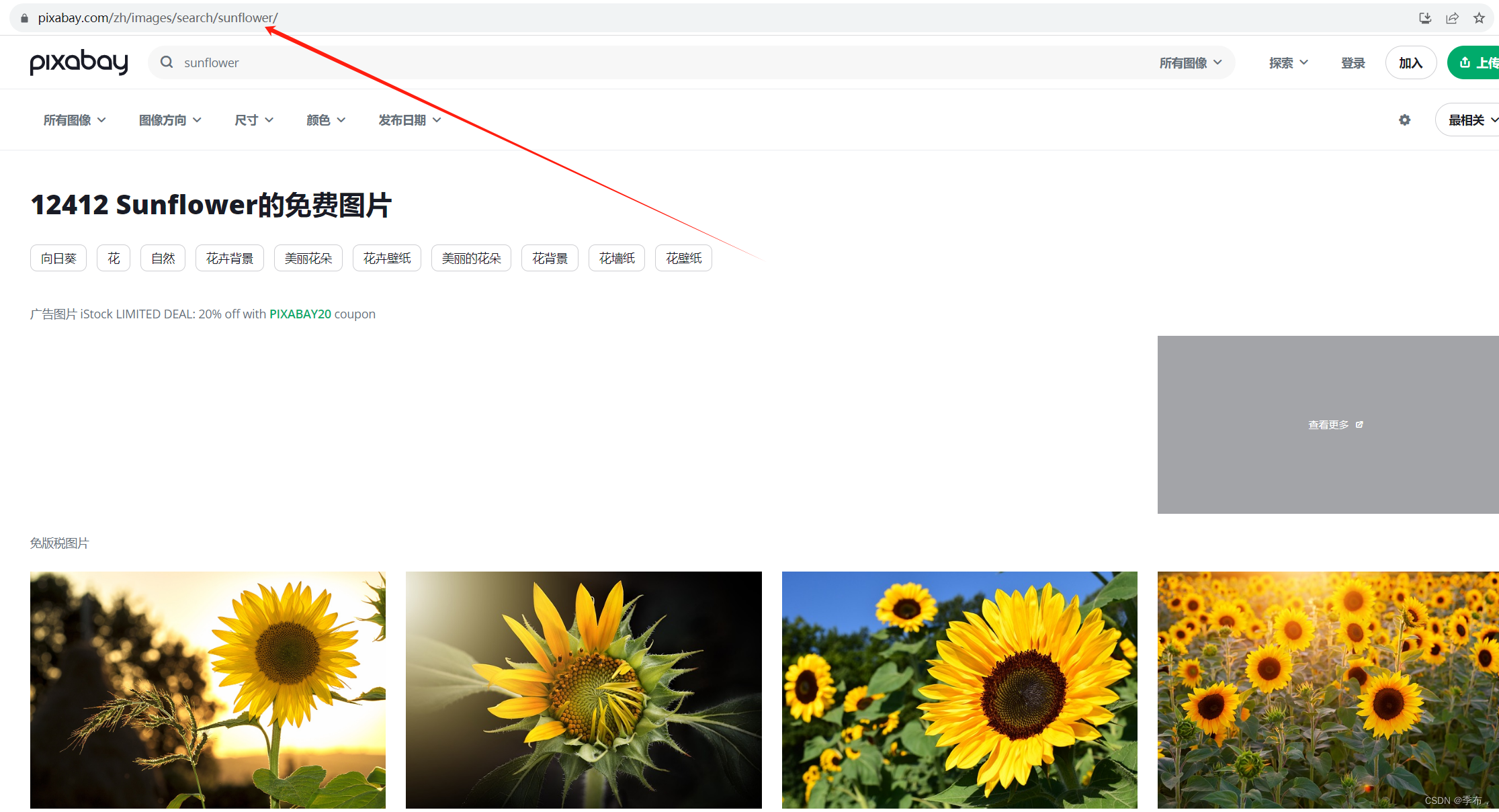

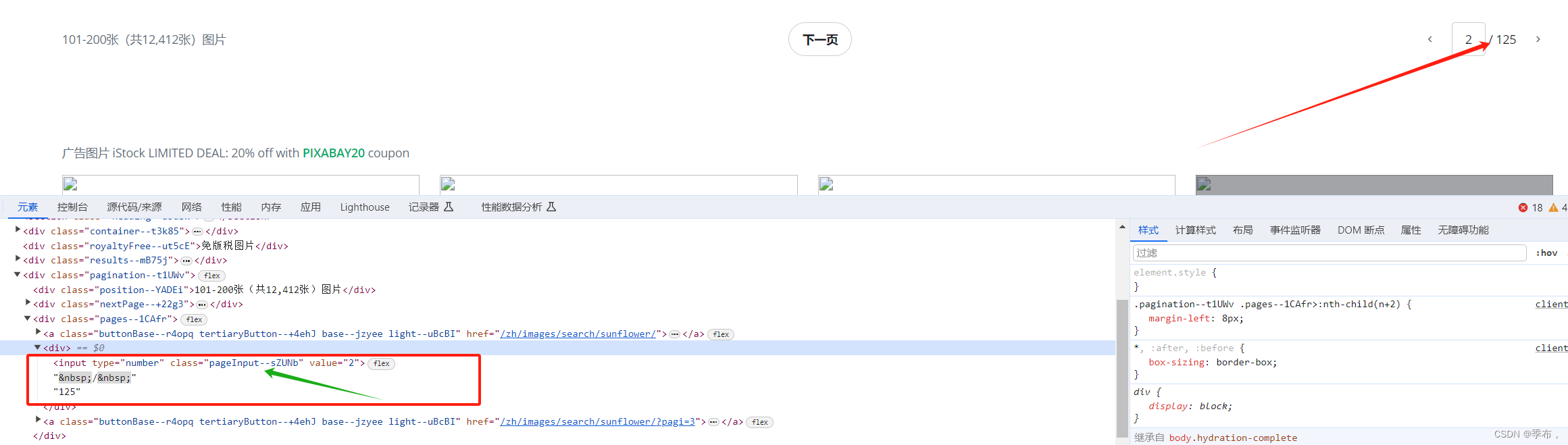

遍历所有图片列表页面

页面分析

第一页

第二页

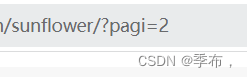

由此可得出变化的只有这里,根据pagi= 展示不同页面

红色箭头定位到页数,绿色的不要使用 是反爬虫的限制,不断变化的

from os.path import dirname

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

class ImageAutoSearchAndSave:

"""图片自动搜索保存"""

def __init__(self, keyword):

"""初始化"""

self.keyword = keyword

self.driver = webdriver.Chrome(executable_path=dirname(__file__) + "/chromedriver.exe")

def run(self):

"""开始运行"""

print("=======开始=======")

# 访问首页

self.driver.get("https://pixabay.com/")

# 搜索图片

self._search_image()

# 遍历所有页面

self._iter_all_page()

print("=======结束=======")

def _search_image(self):

'''搜索图片'''

elem = self.driver.find_element_by_css_selector("input[name]")

elem.send_keys(self.keyword + Keys.ENTER)

def _iter_all_page(self):

'''遍历所有页面'''

# 获取总页面

elem = self.driver.find_element_by_css_selector("input[class^=pageInput]")

page_total = int(elem.text.strip("/ "))

# 遍历所有页面

base_url = self.driver.current_url

for page_num in range(1,page_total+1):

if page_num>1:

self.driver.get(f"{base_url}?pagi={page_num}&")

if __name__ == '__main__':

keyword = "sunflower"

ImageAutoSearchAndSave(keyword).run()

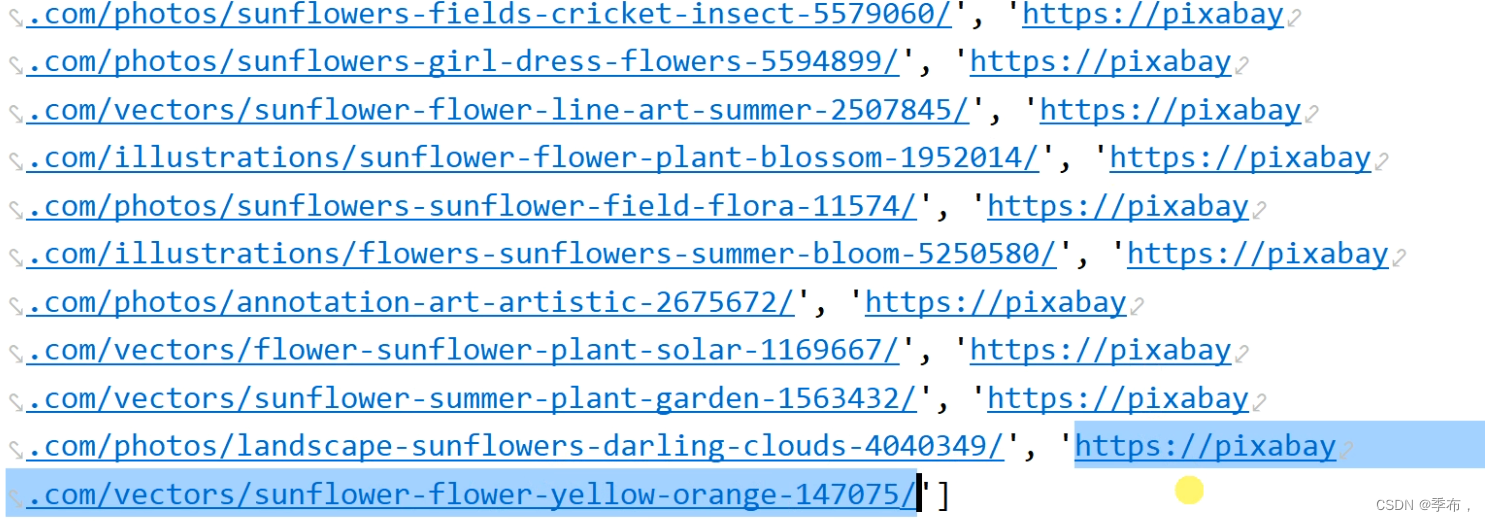

获取所有图片详情页链接

from os.path import dirname

from lxml.etree import HTML

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

class ImageAutoSearchAndSave:

"""图片自动搜索保存"""

def __init__(self, keyword):

"""初始化"""

self.keyword = keyword

self.all_detail_link = []

self.driver = webdriver.Chrome(executable_path=dirname(__file__) + "/chromedriver.exe")

def run(self):

"""开始运行"""

print("=======开始=======")

# 访问首页

self.driver.get("https://pixabay.com/")

# 搜索图片

self._search_image()

# 遍历所有页面

self._iter_all_page()

print("=======结束=======")

def _search_image(self):

'''搜索图片'''

elem = self.driver.find_element_by_css_selector("input[name]")

elem.send_keys(self.keyword + Keys.ENTER)

def _iter_all_page(self):

'''遍历所有页面'''

# 获取总页面

elem = self.driver.find_element_by_css_selector("input[class^=pageInput]")

page_total = int(elem.text.strip("/ "))

# 遍历所有页面

base_url = self.driver.current_url

for page_num in range(1, page_total + 1):

if page_num > 1:

self.driver.get(f"{base_url}?pagi={page_num}&")

# href 属性

root = HTML(self.driver.page_source)

# a标签中的href属性

detail_links = root.xpath("//div[start-with(@class,'result')]//a[start-with(@class,'link')]/@href")

for detail_link in detail_links:

self.all_detail_link.append(detail_link)

if __name__ == '__main__':

keyword = "sunflower"

ImageAutoSearchAndSave(keyword).run()

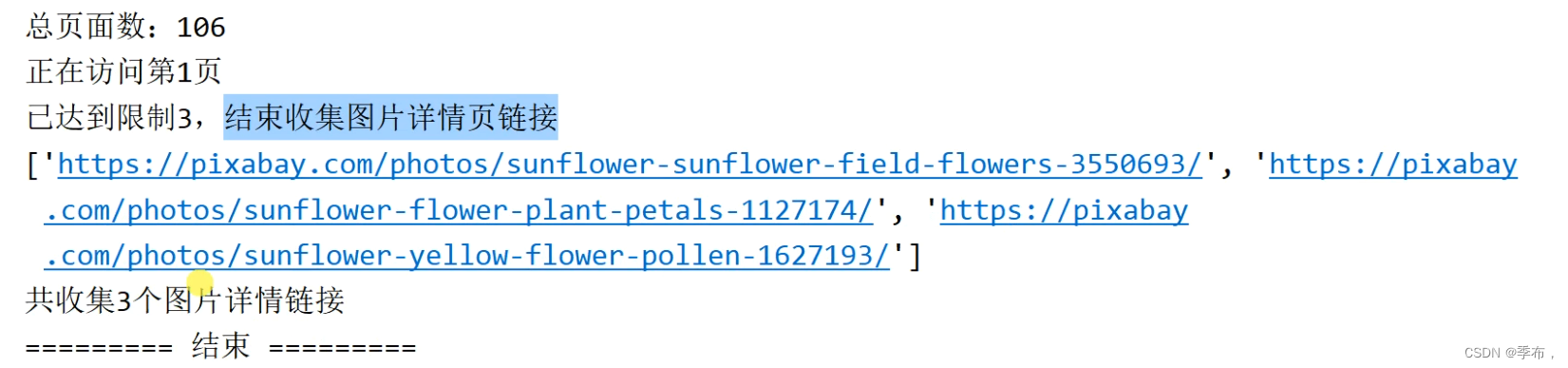

增加下载数量的限制

from os.path import dirname

from lxml.etree import HTML

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

class ImageAutoSearchAndSave:

"""图片自动搜索保存"""

def __init__(self, keyword,limit=0):

"""初始化"""

self.keyword = keyword

self.all_detail_link = []

self.limit=limit #0表示没有限制

self.count = 0 #用来计数

self.driver = webdriver.Chrome(executable_path=dirname(__file__) + "/chromedriver.exe")

def run(self):

"""开始运行"""

print("=======开始=======")

# 访问首页

self.driver.get("https://pixabay.com/")

# 搜索图片

self._search_image()

# 遍历所有页面

self._iter_all_page()

print("=======结束=======")

def _search_image(self):

'''搜索图片'''

elem = self.driver.find_element_by_css_selector("input[name]")

elem.send_keys(self.keyword + Keys.ENTER)

def _iter_all_page(self):

'''遍历所有页面'''

# 获取总页面

elem = self.driver.find_element_by_css_selector("input[class^=pageInput]")

# page_total = int(elem.text.strip("/ "))

page_total = 5

# 遍历所有页面

base_url = self.driver.current_url

for page_num in range(1, page_total + 1):

if page_num > 1:

self.driver.get(f"{base_url}?pagi={page_num}&")

# href 属性

root = HTML(self.driver.page_source)

is_reach_limit = False

# 获取每一页详情链接 a标签中的href属性

detail_links = root.xpath("//div[start-with(@class,'result')]//a[start-with(@class,'link')]/@href")

for detail_link in detail_links:

self.all_detail_link.append(detail_link)

self.count += 1

if self.limit >0 and self.count == self.limit:

is_reach_limit = True

break

if is_reach_limit:

break

if __name__ == '__main__':

keyword = "sunflower"

limit = 3

ImageAutoSearchAndSave(keyword,limit).run()

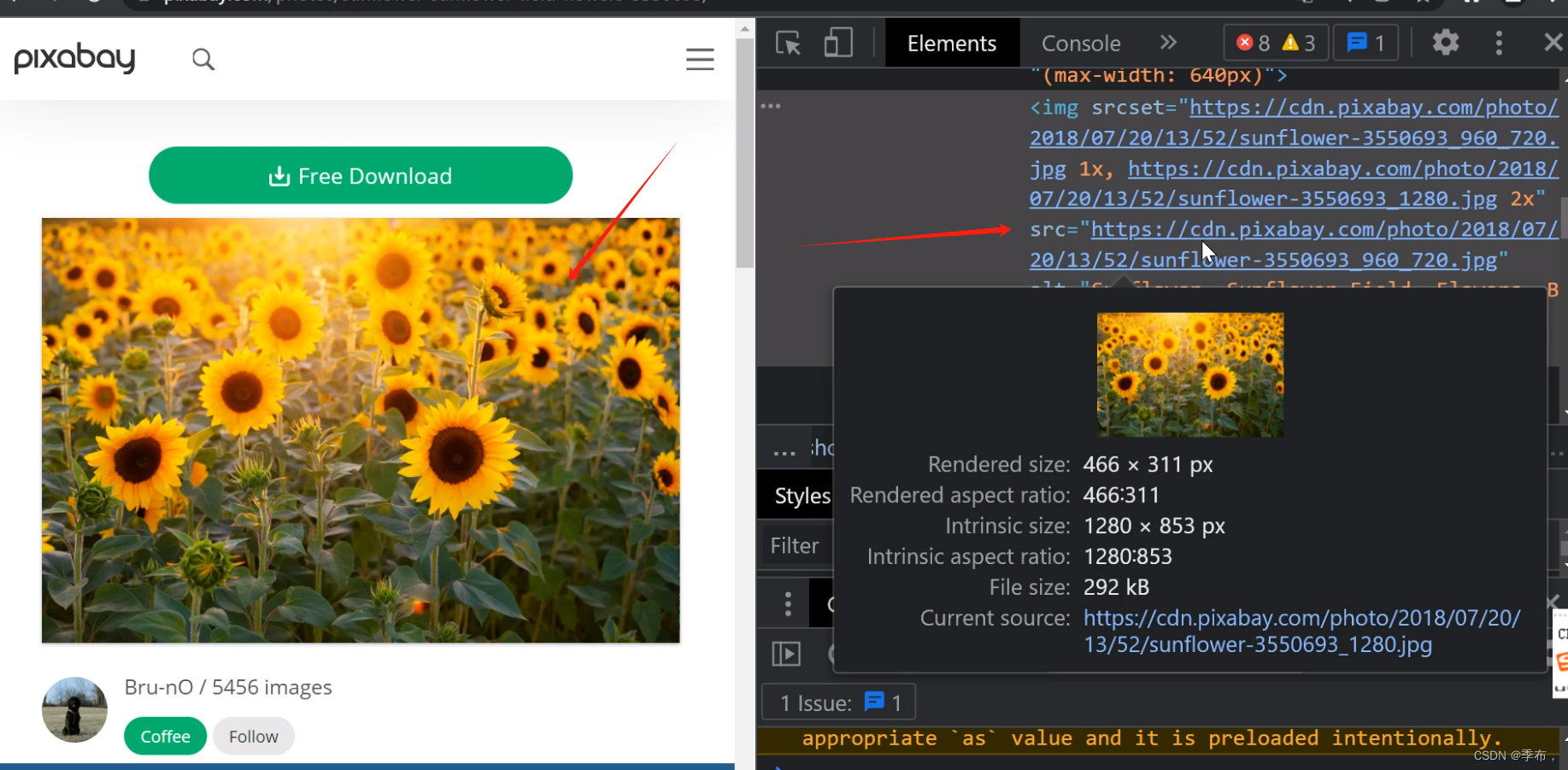

获取所有图片详情、获取图片下载链接

from os.path import dirname

from lxml.etree import HTML

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

class ImageAutoSearchAndSave:

"""图片自动搜索保存"""

def __init__(self, keyword, limit=0):

"""初始化"""

self.keyword = keyword

self.all_detail_link = []

self.limit = limit # 0表示没有限制

self.count = 0 # 用来计数

self.all_download_link = [] # 图片详情的下载链接

self.driver = webdriver.Chrome(executable_path=dirname(__file__) + "/chromedriver.exe")

def run(self):

"""开始运行"""

print("=======开始=======")

# 访问首页

self.driver.get("https://pixabay.com/")

# 搜索图片

self._search_image()

# 遍历所有页面

self._iter_all_page()

# 访问图片详情页

self._visit_image_detail()

# 释放资源

self.driver.close()

del self.all_detail_link

print("=======结束=======")

def _search_image(self):

'''搜索图片'''

elem = self.driver.find_element_by_css_selector("input[name]")

elem.send_keys(self.keyword + Keys.ENTER)

def _iter_all_page(self):

'''遍历所有页面'''

# 获取总页面

elem = self.driver.find_element_by_css_selector("input[class^=pageInput]")

# page_total = int(elem.text.strip("/ "))

page_total = 5

# 遍历所有页面

base_url = self.driver.current_url

for page_num in range(1, page_total + 1):

if page_num > 1:

self.driver.get(f"{base_url}?pagi={page_num}&")

# href 属性

root = HTML(self.driver.page_source)

is_reach_limit = False

# 获取每一页详情链接 a标签中的href属性

detail_links = root.xpath("//div[start-with(@class,'result')]//a[start-with(@class,'link')]/@href")

for detail_link in detail_links:

self.all_detail_link.append(detail_link)

self.count += 1

if self.limit > 0 and self.count == self.limit:

is_reach_limit = True

break

if is_reach_limit:

break

def _visit_image_detail(self):

'''访问图片详情页'''

for detail_link in self.all_detail_link:

self.driver.get(detail_link)

elem = self.driver.find_element_by_css_selector("#media_container > picture > img")

download_link = elem.get_attribute("src")

self.all_download_link.append(download_link)

if __name__ == '__main__':

keyword = "sunflower"

limit = 3

ImageAutoSearchAndSave(keyword, limit).run()

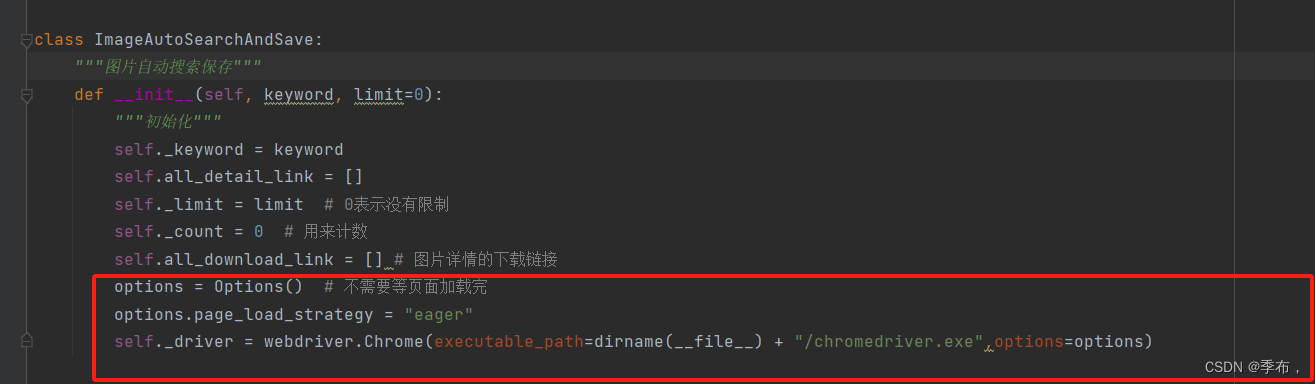

下载所有图片

from io import BytesIO

from os import makedirs

from os.path import dirname

from time import strftime

import requests

from PIL import Image

from lxml.etree import HTML

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

class ImageAutoSearchAndSave:

"""图片自动搜索保存"""

def __init__(self, keyword, limit=0):

"""初始化"""

self._keyword = keyword

self.all_detail_link = []

self._limit = limit # 0表示没有限制

self._count = 0 # 用来计数

self.all_download_link = [] # 图片详情的下载链接

self._driver = webdriver.Chrome(executable_path=dirname(__file__) + "/chromedriver.exe")

def run(self):

"""开始运行"""

print("=======开始=======")

# 访问首页

self._driver.get("https://pixabay.com/")

# 搜索图片

self._search_image()

# 遍历所有页面

self._iter_all_page()

# 访问图片详情页

self._visit_image_detail()

# 释放资源

self._driver.close()

del self.all_detail_link

# 下载所有图片

self._download_all_image()

print("=======结束=======")

def _search_image(self):

'''搜索图片'''

elem = self._driver.find_element_by_css_selector("input[name]")

elem.send_keys(self._keyword + Keys.ENTER)

def _iter_all_page(self):

'''遍历所有页面'''

# 获取总页面

elem = self._driver.find_element_by_css_selector("input[class^=pageInput]")

# page_total = int(elem.text.strip("/ "))

page_total = 5

# 遍历所有页面

base_url = self._driver.current_url

for page_num in range(1, page_total + 1):

if page_num > 1:

self._driver.get(f"{base_url}?pagi={page_num}&")

# href 属性

root = HTML(self._driver.page_source)

is_reach_limit = False

# 获取每一页详情链接 a标签中的href属性

detail_links = root.xpath("//div[start-with(@class,'result')]//a[start-with(@class,'link')]/@href")

for detail_link in detail_links:

self.all_detail_link.append(detail_link)

self._count += 1

if self._limit > 0 and self._count == self._limit:

is_reach_limit = True

break

if is_reach_limit:

break

def _visit_image_detail(self):

'''访问图片详情页 获取对应的图片链接'''

for detail_link in self.all_detail_link:

self._driver.get(detail_link)

elem = self._driver.find_element_by_css_selector("#media_container > picture > img")

download_link = elem.get_attribute("src")

self.all_download_link.append(download_link)

def _download_all_image(self):

'''下载所有图片'''

download_dir = f"{dirname(__file__)}/download/{strftime('%Y%m%d-%H%M%S')}-{self._keyword}"

makedirs(download_dir)

#下载所有图片

count = 0

for download_link in self.all_download_link:

response = requests.get(download_link)

count += 1

# response.content 二进制内容 response.text 文本内容

image = Image.open(BytesIO(response.content))

# rjust 000001.png

filename = f"{str(count).rjust(6,'0')}.png"

file_path = f"{download_dir}/{filename}"

image.save(file_path)

if __name__ == '__main__':

keyword = "sunflower"

limit = 3

ImageAutoSearchAndSave(keyword, limit).run()

原文地址:https://blog.csdn.net/weixin_47906106/article/details/134639240

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_3943.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!

主题授权提示:请在后台主题设置-主题授权-激活主题的正版授权,授权购买:RiTheme官网

声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。