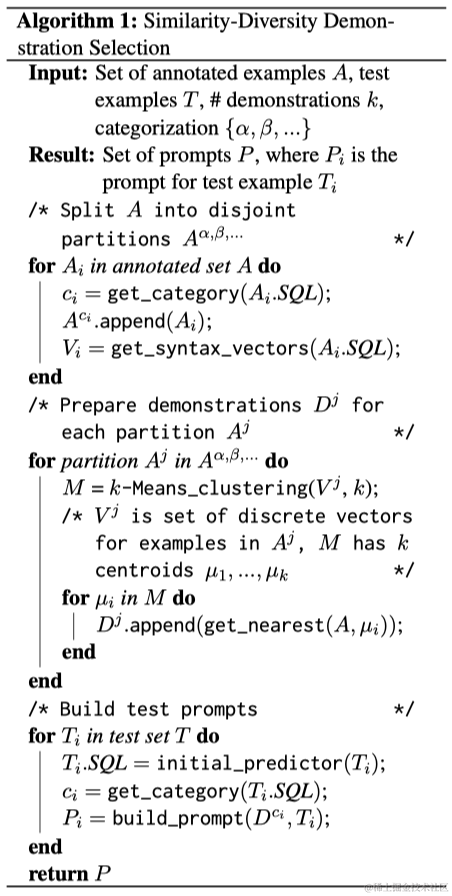

1.应用示例思路

(1) 标注数据并获取halcon字典形式的训练数据;(2) 数据预处理;

(3) 模型训练;(4) 模型评估和验证;(5) 模型推理。

https://blog.csdn.net/ctu_sue/article/details/127280183

2.应用示例相关参数说明

(1) 创建目标检测模型(create_dl_model_detection)之前应设置的参数

|

1 |

||

|

2 |

||

|

3 |

||

|

4 |

||

|

5 |

||

|

6 |

||

|

7 |

||

|

8 |

||

|

9 |

||

|

10 |

‘capacity‘ |

该参数决定了在目标检测网络的较深部分(在骨干之后)中参数的数量(或过滤权重)。它的可能值是’high‘, ‘medium‘和’low‘。它可以用来在检测性能和速度之间进行权衡。 |

|

解释 |

||

|

1 |

||

|

2 |

||

|

3 |

‘momentum‘ |

|

|

4 |

||

|

5 |

‘device‘ |

|

参数 |

解释 |

|

|

1 |

批大小 |

|

|

2 |

||

|

3 |

||

|

4 |

两个不同类的预测边界框的IoU所允许的最大值。当两个边界框的IoU高于‘max_overlap_class_agnostic‘时 ,具有较低置信度值的那个将被抑制。 |

|

|

5 |

3.应用示例相关代码

import os

import xml.etree.ElementTree as ET

def Get_TrainData(xmlfile):

TrainDataList = []

Classes = []

xml_file_name = os.path.basename(xmlfile)

file_name = xml_file_name.split('.')[0]

img_file_name = file_name + '.png'

TrainDataList.append(img_file_name)

with open(xmlfile,"r", encoding="utf-8") as in_file:

tree = ET.parse(in_file)

root = tree.getroot()

for obj in root.iter('object'):

cls = obj.find('name').text

if cls not in Classes:

Classes.append(cls)

cls_id = Classes.index(cls)

xmlbox = obj.find('bndbox')

b = (int(xmlbox.find('xmin').text),int(xmlbox.find('ymin').text), int(xmlbox.find('xmax').text),int(xmlbox.find('ymax').text))

list_file = "" + ",".join([str(a) for a in b]) + ',' + str(cls_id)

TrainDataList.append(list_file)

return TrainDataList,Classes

SaveDir1='./pill data/DataLabel'

xml_file_path='./pill data/DataLabel'

xml_file_list = os.listdir(xml_file_path)

TotalTrainDataList=[]

TotalClasses=[]

for xmlfile in list(xml_file_list):

xml_file_path = os.path.join(SaveDir1, xmlfile)

TrainDataList, Classes = Get_TrainData(xml_file_path)

TotalTrainDataList.append(TrainDataList)

TotalClasses.append(Classes)

SaveDir2='./pill data/'

SaveDir3='./pill data/'

with open(os.path.join(SaveDir2,'TrainList.txt'), encoding="utf-8", mode="w") as f:

for each_TrainDataList in TotalTrainDataList:

f.write(" ".join(each_TrainDataList)+"n")

with open(os.path.join(SaveDir3,'classes.txt'), encoding="utf-8", mode="w") as f:

for each_Classes in TotalClasses:

f.write(",".join(each_Classes) + "n")

*1、变量定义

* 前边生成的类别文件

class_txt:='./InputFile/classes.txt'

* 前边生成的数据标注文件

train_txt:='./InputFile/TrainList.txt'

* 基于halcon转化脚本下的图像保存路径

ImageDir:='./DataImage'

* 基于halcon训练脚本下的图像保存路径

BaseImgDir:='./DataImage'

* 保存为halcon识别的训练文件

dict_File:='./InputFile/dl_dataset.hdict'

*2、读取种类

ClassID:=[]

ClassName:=[]

open_file (class_txt, 'input', FileHandle)

repeat

fread_line(FileHandle, oneline, IsEOF)

if(IsEOF == 1)

break

endif

if(oneline == ' ' or oneline=='n')

continue

endif

tuple_regexp_replace (oneline, 'n', '', oneline)

tuple_length (ClassID, Length)

ClassID[Length]:=Length+1

tuple_concat (ClassName, oneline, ClassName)

until (IsEOF)

*3、解析trainList.txt

TrainDataList:=[]

open_file (train_txt, 'input', FileHandle)

repeat

fread_line(FileHandle, oneline, IsEOF)

if(IsEOF == 1)

break

endif

if(oneline == ' ' or oneline=='n')

continue

endif

tuple_regexp_replace (oneline, 'n', '', oneline)

tuple_concat (TrainDataList, oneline, TrainDataList)

until (IsEOF)

*4、生成字典

class_ids:=[1,2,3,4,5,6,7,8,9,10]

class_names:=['黑圆','土圆','赭圆','白圆','鱼肝油','棕白胶囊','蓝白胶囊','白椭圆','黑椭圆','棕色胶囊']

AllSamples:=[]

for Index1 := 0 to |TrainDataList|-1 by 1

EachTrainList:=TrainDataList[Index1]

tuple_split (EachTrainList, ' ', DataList)

imageFile:=DataList[0]

tuple_length (DataList, Length)

DataList:=DataList[1:Length-1]

create_dict (SampleImage)

set_dict_tuple (SampleImage, 'image_id', Index1+1)

set_dict_tuple (SampleImage, 'image_file_name', imageFile)

bbox_label_id:=[]

bbox_row1:=[]

bbox_col1:=[]

bbox_row2:=[]

bbox_col2:=[]

class_names_temp1:=[ClassName[Index1]]

tuple_split (class_names_temp1, ',', class_names_temp2)

for bbox_index:=0 to |DataList|-1 by 1

bbox_data:=DataList[bbox_index]

tuple_split (bbox_data, ',', bbox_data_list)

tuple_number (bbox_data_list[4], Number)

class_name:=class_names_temp2[Number]

tuple_find_first (class_names, class_name, class_name_index)

tuple_concat (bbox_label_id, class_name_index+1, bbox_label_id)

tuple_number (bbox_data_list[1], Number)

tuple_concat (bbox_row1, Number, bbox_row1)

tuple_number (bbox_data_list[0], Number)

tuple_concat (bbox_col1, Number, bbox_col1)

tuple_number (bbox_data_list[3], Number)

tuple_concat (bbox_row2, Number, bbox_row2)

tuple_number (bbox_data_list[2], Number)

tuple_concat (bbox_col2, Number, bbox_col2)

endfor

set_dict_tuple (SampleImage, 'bbox_label_id', bbox_label_id)

set_dict_tuple (SampleImage, 'bbox_row1', bbox_row1)

set_dict_tuple (SampleImage, 'bbox_col1', bbox_col1)

set_dict_tuple (SampleImage, 'bbox_row2', bbox_row2)

set_dict_tuple (SampleImage, 'bbox_col2', bbox_col2)

tuple_concat (AllSamples, SampleImage, AllSamples)

endfor

*5、生成最终字典形式的训练数据

create_dict (DLDataset)

set_dict_tuple (DLDataset, 'image_dir', './DataImage')

set_dict_tuple (DLDataset, 'class_ids', class_ids)

set_dict_tuple (DLDataset, 'class_names', class_names)

set_dict_tuple (DLDataset, 'samples', AllSamples)

write_dict (DLDataset, dict_File, [], [])

dev_close_window ()

dev_update_off ()

set_system ('seed_rand', 42)

***0.) 设置输入输出路径(SET INPUT/OUTPUT PATHS) ***

get_system ('example_dir', HalconExampleDir)

PillBagJsonFile := HalconExampleDir + '/hdevelop/Deep-Learning/Detection/pill_bag.json'

InputImageDir := HalconExampleDir + '/images/'

OutputDir := 'detect_pills_data'

*设置为true,如果运行此程序后应删除结果。

RemoveResults := false

***1.)准备(PREPARE )***

*我们从一个COCO文件中读取数据集。

*或者读取由MVTec深度学习工具使用read_dict()创建的DLDataset字典。

read_dl_dataset_from_coco (PillBagJsonFile, InputImageDir, dict{read_segmentation_masks: false}, DLDataset)

*创建适合数据集参数的检测模型。

DLModelDetectionParam := dict{image_dimensions: [512, 320, 3], max_level: 4}

DLModelDetectionParam.class_ids := DLDataset.class_ids

DLModelDetectionParam.class_names := DLDataset.class_names

create_dl_model_detection ('pretrained_dl_classifier_compact.hdl', |DLModelDetectionParam.class_ids|, DLModelDetectionParam, DLModelHandle)

*对DLDataset中的数据进行预处理。

split_dl_dataset (DLDataset, 60, 20, [])

*如有需要,现有的预处理数据将被覆盖。

PreprocessSettings := dict{overwrite_files: 'auto'}

create_dl_preprocess_param_from_model (DLModelHandle, 'none', 'full_domain', [], [], [], DLPreprocessParam)

preprocess_dl_dataset (DLDataset, OutputDir, DLPreprocessParam, PreprocessSettings, DLDatasetFileName)

*随机挑选10个预处理的样本进行可视化

WindowDict := dict{}

for Index := 0 to 9 by 1

SampleIndex := round(rand(1) * (|DLDataset.samples| - 1))

read_dl_samples (DLDataset, SampleIndex, DLSample)

dev_display_dl_data (DLSample, [], DLDataset, 'bbox_ground_truth', [], WindowDict)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'right', 'black', [], [])

stop ()

endfor

dev_close_window_dict (WindowDict)

***2.) 训练(TRAIN) ***

*训练可以在GPU或CPU上执行。

*本例中尽可能使用GPU。*如果你明确希望在CPU上运行这个例子,选择CPU设备。

query_available_dl_devices (['runtime', 'runtime'], ['gpu', 'cpu'], DLDeviceHandles)

if (|DLDeviceHandles| == 0)

throw ('No supported device found to continue this example.')

endif

*由于query_available_dl_devices中使用了过滤,如果可用,第一个设备是GPU。

DLDevice := DLDeviceHandles[0]

get_dl_device_param (DLDevice, 'type', DLDeviceType)

if (DLDeviceType == 'cpu')

*使用的线程数可能会对训练持续时间产生影响。

NumThreadsTraining := 4

set_system ('thread_num', NumThreadsTraining)

endif

*详细信息参见set_dl_model_param()和get_dl_model_param()的文档。

set_dl_model_param (DLModelHandle, 'batch_size', 1)

set_dl_model_param (DLModelHandle, 'learning_rate', 0.001)

set_dl_model_param (DLModelHandle, 'device', DLDevice)

*在这里,我们进行10次的短训练。为了更好的模型性能,增加epoch的数量,例如从10到60。

create_dl_train_param (DLModelHandle, 10, 1, 'true', 42, [], [], TrainParam)

*使用以下函数调用train_dl_model_batch()完成训练

train_dl_model (DLDataset, DLModelHandle, TrainParam, 0, TrainResults, TrainInfos, EvaluationInfos)

*读取由by train_dl_model保存的最佳模型文件。

read_dl_model ('model_best.hdl', DLModelHandle)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'left', 'black', [], [])

stop ()

dev_close_window ()

dev_close_window ()

***3.) 评估(EVALUATE) ***

*

GenParamEval := dict{detailed_evaluation: true, show_progress: true}

set_dl_model_param (DLModelHandle, 'device', DLDevice)

evaluate_dl_model (DLDataset, DLModelHandle, 'split', 'test', GenParamEval, EvaluationResult, EvalParams)

DisplayMode := dict{display_mode: ['pie_charts_precision', 'pie_charts_recall']}

dev_display_detection_detailed_evaluation (EvaluationResult, EvalParams, DisplayMode, WindowDict)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'right', 'black', [], [])

stop ()

dev_close_window_dict (WindowDict)

*优化模型进行推理,也就是说,减少它的内存消耗。

set_dl_model_param (DLModelHandle, 'optimize_for_inference', 'true')

set_dl_model_param (DLModelHandle, 'batch_size', 1)

*保存此优化状态下的模型。

write_dl_model (DLModelHandle, 'model_best.hdl')

***4.) 推理(INFER) ***

*为了演示推理步骤,我们将训练好的模型应用于一些随机选择的示例图像。

list_image_files (InputImageDir + 'pill_bag', 'default', 'recursive', ImageFiles)

tuple_shuffle (ImageFiles, ImageFilesShuffled)

*创建用于可视化的窗口字典。

WindowDict := dict{}

DLDatasetInfo := dict{}

get_dl_model_param (DLModelHandle, 'class_ids', DLDatasetInfo.class_ids)

get_dl_model_param (DLModelHandle, 'class_names', DLDatasetInfo.class_names)

for IndexInference := 0 to 9 by 1

read_image (Image, ImageFilesShuffled[IndexInference])

gen_dl_samples_from_images (Image, DLSampleInference)

preprocess_dl_samples (DLSampleInference, DLPreprocessParam)

apply_dl_model (DLModelHandle, DLSampleInference, [], DLResult)

dev_display_dl_data (DLSampleInference, DLResult, DLDataset, 'bbox_result', [], WindowDict)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'right', 'black', [], [])

stop ()

endfor

dev_close_window_dict (WindowDict)

***5.) 删除文件(REMOVE FILES) ***

clean_up_output (OutputDir, RemoveResults)如果不想自己标注数据的,可以下载我整理好的资源:https://download.csdn.net/download/qq_44744164/88595779

原文地址:https://blog.csdn.net/qq_44744164/article/details/134781532

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_44050.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!

主题授权提示:请在后台主题设置-主题授权-激活主题的正版授权,授权购买:RiTheme官网

声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。