“私密离线聊天新体验!llama-gpt聊天机器人:极速、安全、搭载Llama 2,尽享Code Llama支持!”

一个自托管的、离线的、类似chatgpt的聊天机器人。由美洲驼提供动力。100%私密,没有数据离开您的设备。

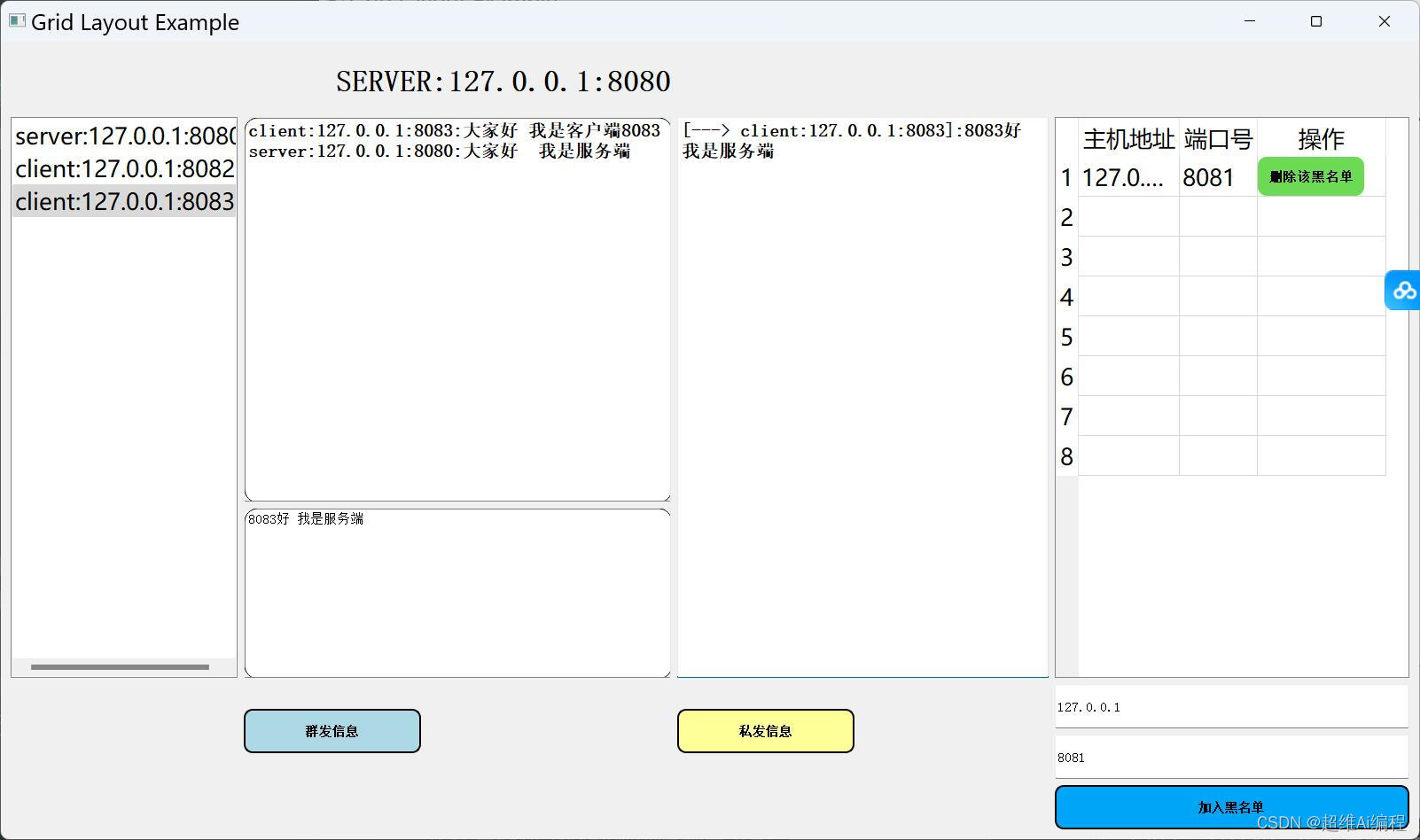

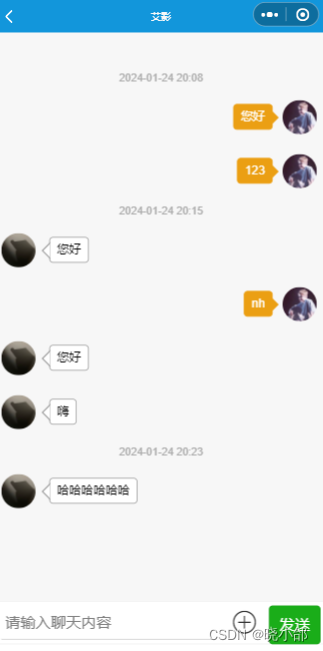

Demo

https://github.com/getumbrel/llama–gpt/assets/10330103/5d1a76b8-ed03-4a51-90bd-12ebfaf1e6cd

1.支持模型

Currently, LlamaGPT supports the following models. Support for running custom models is on the roadmap.

| Model name | Model size | Model download size | Memory required |

|---|---|---|---|

| Nous Hermes Llama 2 7B Chat (GGML q4_0) | 7B | 3.79GB | 6.29GB |

| Nous Hermes Llama 2 13B Chat (GGML q4_0) | 13B | 7.32GB | 9.82GB |

| Nous Hermes Llama 2 70B Chat (GGML q4_0) | 70B | 38.87GB | 41.37GB |

| Code Llama 7B Chat (GGUF Q4_K_M) | 7B | 4.24GB | 6.74GB |

| Code Llama 13B Chat (GGUF Q4_K_M) | 13B | 8.06GB | 10.56GB |

| Phind Code Llama 34B Chat (GGUF Q4_K_M) | 34B | 20.22GB | 22.72GB |

1.1 安装LlamaGPT 在 umbrelOS

Running LlamaGPT on an umbrelOS home server is one click. Simply install it from the Umbrel App Store.

1.2 安装LlamaGPT on M1/M2 Mac

Make sure your have Docker and Xcode installed.

Then, clone this repo and cd into it:

git clone https://github.com/getumbrel/llama-gpt.git

cd llama-gpt

Run LlamaGPT with the following command:

./run-mac.sh --model 7b

You can access LlamaGPT at http://localhost:3000.

To run 13B or 70B chat models, replace

7bwith13bor70brespectively.

To run 7B, 13B or 34B Code Llama models, replace7bwithcode-7b,code-13borcode-34brespectively.

To stop LlamaGPT, do Ctrl + C in Terminal.

1.3 在 Docker上安装

You can run LlamaGPT on any x86 or arm64 system. Make sure you have Docker installed.

Then, clone this repo and cd into it:

git clone https://github.com/getumbrel/llama-gpt.git

cd llama-gpt

Run LlamaGPT with the following command:

./run.sh --model 7b

Or if you have an Nvidia GPU, you can run LlamaGPT with CUDA support using the --with-cuda flag, like:

./run.sh --model 7b --with-cuda

You can access LlamaGPT at http://localhost:3000.

To run 13B or 70B chat models, replace

7bwith13bor70brespectively.

To run Code Llama 7B, 13B or 34B models, replace7bwithcode-7b,code-13borcode-34brespectively.

To stop LlamaGPT, do Ctrl + C in Terminal.

Note: On the first run, it may take a while for the model to be downloaded to the

/modelsdirectory. You may also see lots of output like this for a few minutes, which is normal:llama-gpt-llama-gpt-ui-1 | [INFO wait] Host [llama-gpt-api-13b:8000] not yet available...After the model has been automatically downloaded and loaded, and the API server is running, you’ll see an output like:

llama-gpt-ui_1 | ready - started server on 0.0.0.0:3000, url: http://localhost:3000

1.4 在Kubernetes安装

First, make sure you have a running Kubernetes cluster and kubectl is configured to interact with it.

Then, clone this repo and cd into it.

To deploy to Kubernetes first create a namespace:

kubectl create ns llama

Then apply the manifests under the /deploy/kubernetes directory with

kubectl apply -k deploy/kubernetes/. -n llama

Expose your service however you would normally do that.

2.OpenAI兼容API

Thanks to llama-cpp-python, a drop-in replacement for OpenAI API is available at http://localhost:3001. Open http://localhost:3001/docs to see the API documentation.

- 基线

We’ve tested LlamaGPT models on the following hardware with the default system prompt, and user prompt: “How does the universe expand?” at temperature 0 to guarantee deterministic results. Generation speed is averaged over the first 10 generations.

Feel free to add your own benchmarks to this table by opening a pull request.

2.1 Nous Hermes Llama 2 7B Chat (GGML q4_0)

| Device | Generation speed |

|---|---|

| M1 Max MacBook Pro (64GB RAM) | 54 tokens/sec |

| GCP c2-standard-16 vCPU (64 GB RAM) | 16.7 tokens/sec |

| Ryzen 5700G 4.4GHz 4c (16 GB RAM) | 11.50 tokens/sec |

| GCP c2-standard-4 vCPU (16 GB RAM) | 4.3 tokens/sec |

| Umbrel Home (16GB RAM) | 2.7 tokens/sec |

| Raspberry Pi 4 (8GB RAM) | 0.9 tokens/sec |

2.2 Nous Hermes Llama 2 13B Chat (GGML q4_0)

| Device | Generation speed |

|---|---|

| M1 Max MacBook Pro (64GB RAM) | 20 tokens/sec |

| GCP c2-standard-16 vCPU (64 GB RAM) | 8.6 tokens/sec |

| GCP c2-standard-4 vCPU (16 GB RAM) | 2.2 tokens/sec |

| Umbrel Home (16GB RAM) | 1.5 tokens/sec |

2.3 Nous Hermes Llama 2 70B Chat (GGML q4_0)

| Device | Generation speed |

|---|---|

| M1 Max MacBook Pro (64GB RAM) | 4.8 tokens/sec |

| GCP e2-standard-16 vCPU (64 GB RAM) | 1.75 tokens/sec |

| GCP c2-standard-16 vCPU (64 GB RAM) | 1.62 tokens/sec |

2.4 Code Llama 7B Chat (GGUF Q4_K_M)

| Device | Generation speed |

|---|---|

| M1 Max MacBook Pro (64GB RAM) | 41 tokens/sec |

2.5 Code Llama 13B Chat (GGUF Q4_K_M)

| Device | Generation speed |

|---|---|

| M1 Max MacBook Pro (64GB RAM) | 25 tokens/sec |

2.6 Phind Code Llama 34B Chat (GGUF Q4_K_M)

| Device | Generation speed |

|---|---|

| M1 Max MacBook Pro (64GB RAM) | 10.26 tokens/sec |

4_K_M)

| Device | Generation speed |

|---|---|

| M1 Max MacBook Pro (64GB RAM) | 10.26 tokens/sec |

更多优质内容请关注公号:汀丶人工智能;会提供一些相关的资源和优质文章,免费获取阅读。

原文地址:https://blog.csdn.net/sinat_39620217/article/details/133762478

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_4971.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!