创建RDD

textfile

调用SparkContext.textFile()方法,从外部存储中读取数据来创建 RDD

parallelize

调用SparkContext 的 parallelize()方法,将一个存在的集合,变成一个RDD

makeRDD

方法一

方法二:分配一个本地Scala集合形成一个RDD,为每个集合对象创建一个最佳分区。

举例

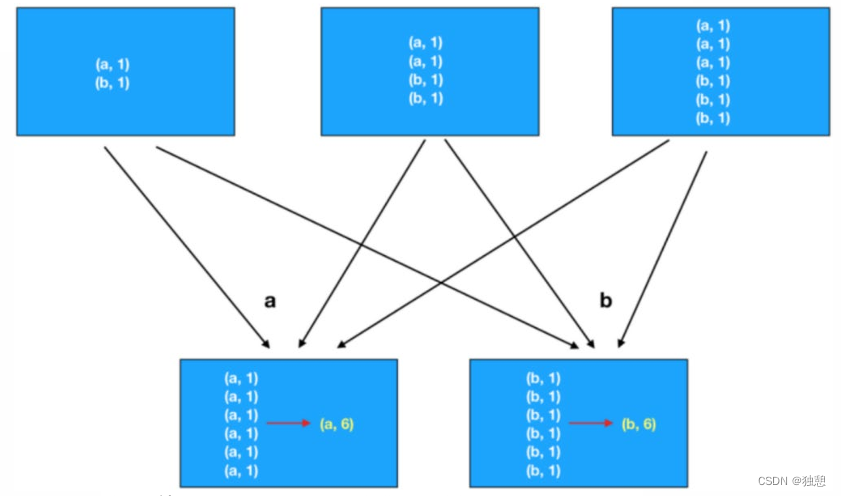

转换算子

举例

行动算子

声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。