环境说明

| IP | 主机名 | 角色 |

存储设备 |

|

192.168.2.100 |

master100 |

大于5G的空设备 | |

| 192.168.2.101 | node101 | mon,mgr,osd,mds,rgw | 大于5G的空设备 |

| 192.168.2.102 | node102 | mon,mgr,osd,mds,rgw | 大于5G的空设备 |

关闭防火墙

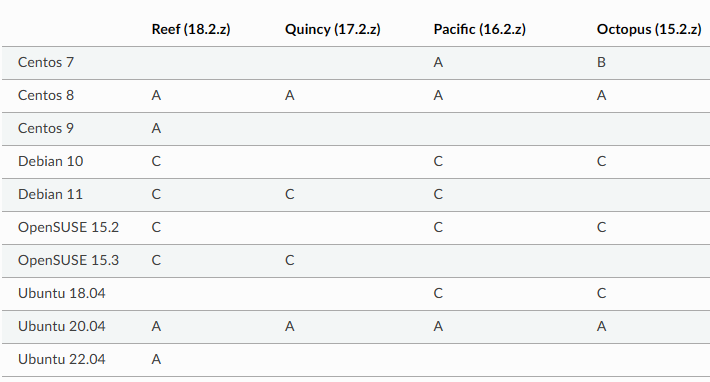

配置ceph国内镜像 (ubuntu server 20.04)

wget -q -O- ‘https://mirrors.aliyun.com/ceph/keys/release.asc‘ | apt–key add –

apt–add-repository ‘deb https://mirrors.aliyun.com/ceph/debian-quincy/ focal main‘

参考Get Packages — Ceph Documentation

curl https://ghproxy.com/https://raw.githubusercontent.com/ceph/ceph/quincy/src/cephadm/cephadm -o cephadm

./cephadm add-repo —release quincy

./cephadm install ceph-common ceph

./cephadm install

which cephadm

which ceph

cephadm bootstrap –mon-ip 192.168.2.100

ssh–copy–id -f -i /etc/ceph/ceph.pub root@node101

ssh–copy–id -f -i /etc/ceph/ceph.pub root@node102

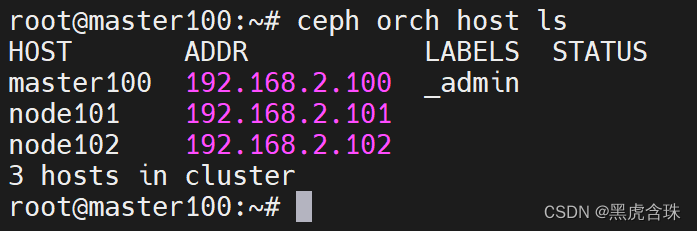

ceph orch host add node101 或 ceph orch host add node101 192.168.2.101

ceph orch host add node102 或 ceph orch host add node102 192.168.2.102

部署MON

ceph orch apply mon master100,node101,node102

部署OSD

ceph orch device ls

ceph orch apply osd –all-available-devices

ceph orch daemon add osd master100:/dev/sdb

ceph orch daemon add osd node101:/dev/sdb

ceph orch daemon add osd node102:/dev/sdb

ceph orch apply mds cephfs –placement=”3 master100 node101 node102”

部署rgw

ceph orch apply rgw myrealm myzone –placement=”3 master100 node101 node102”

部署mgr

ceph orch apply mgr master100,node101,node102

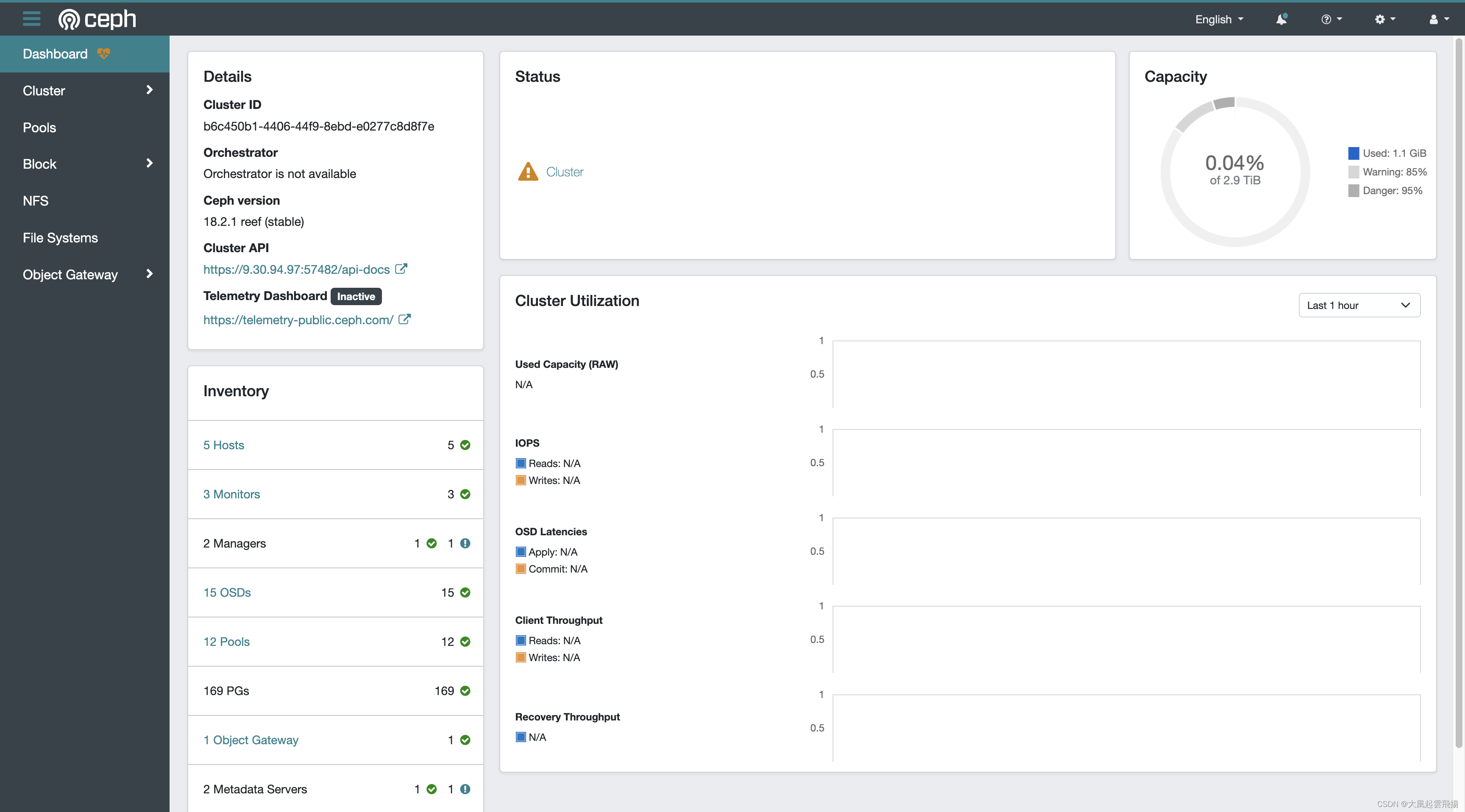

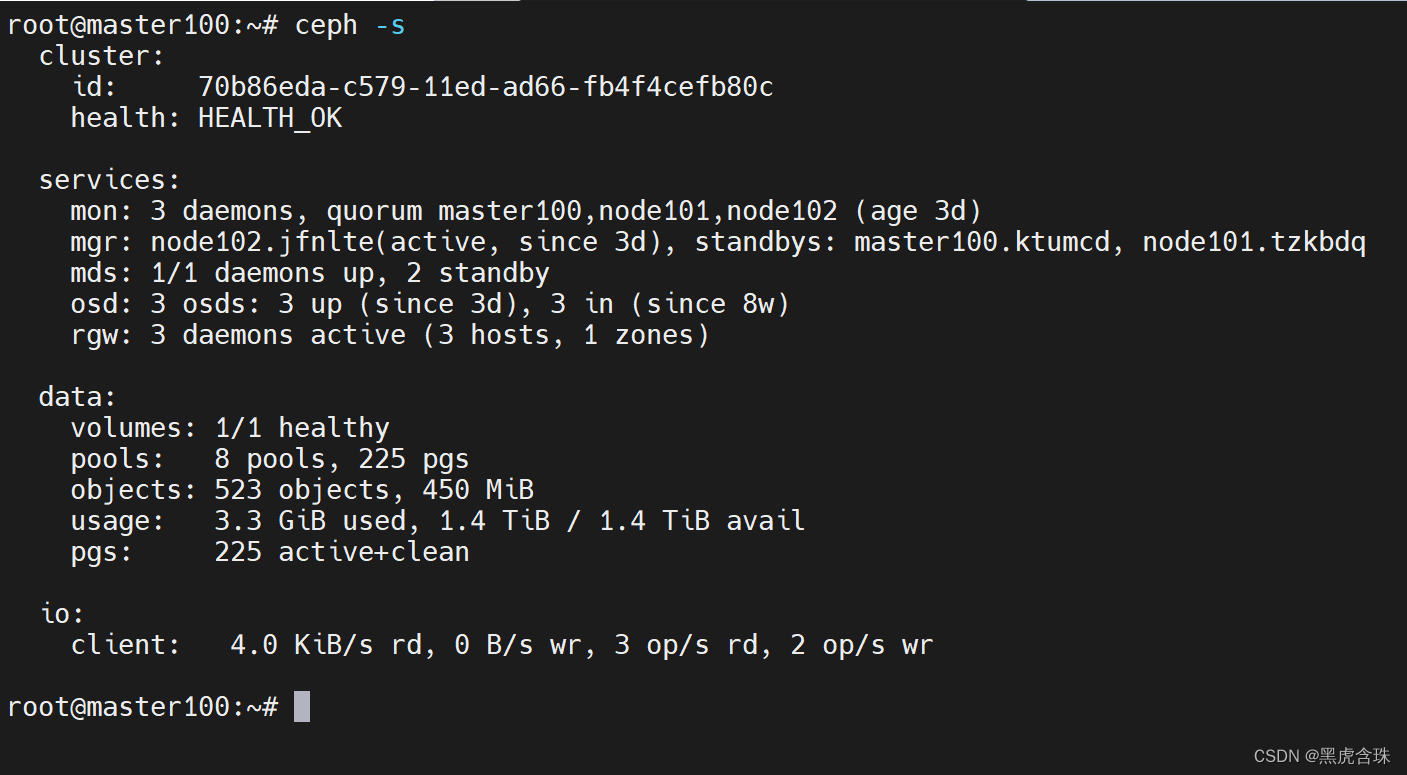

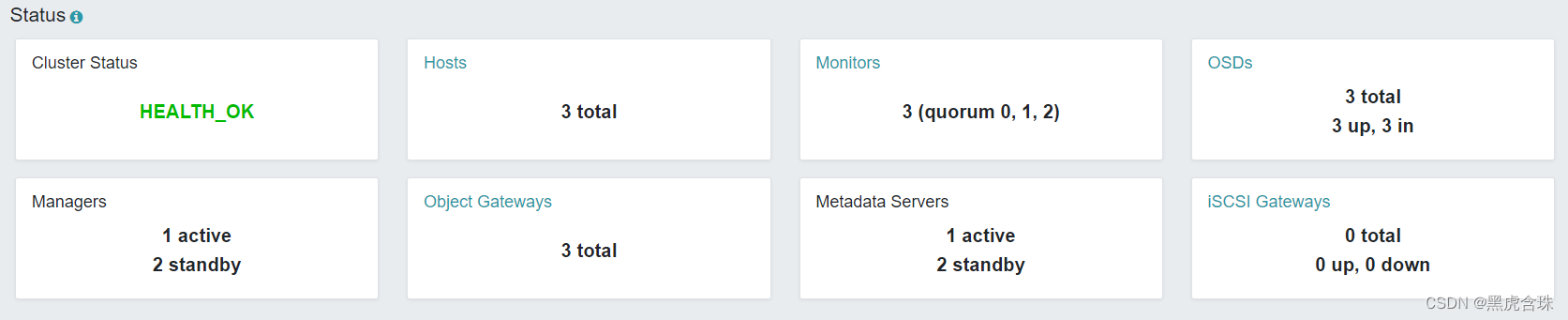

访问ceph dashboard

https://192.168.2.100:8443

git clone -b release–v3.8 https://github.com/ceph/ceph-csi.git

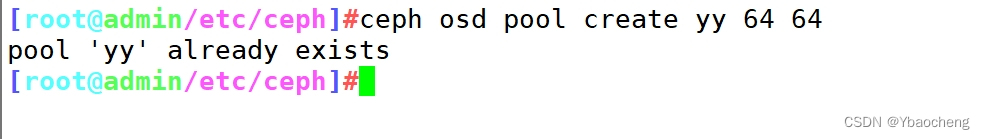

创建一个pool

ceph osd pool create kubernetes

rbd pool init kubernetes

kubectl apply -f csi-config–map.yaml

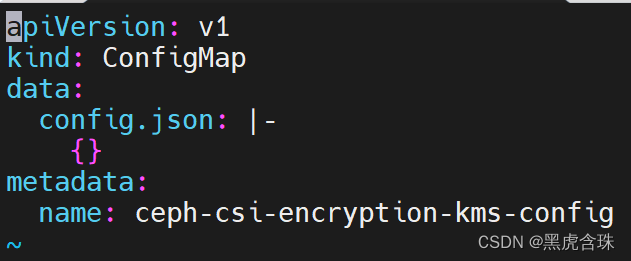

kubectl apply -f csi-kms-config–map.yaml

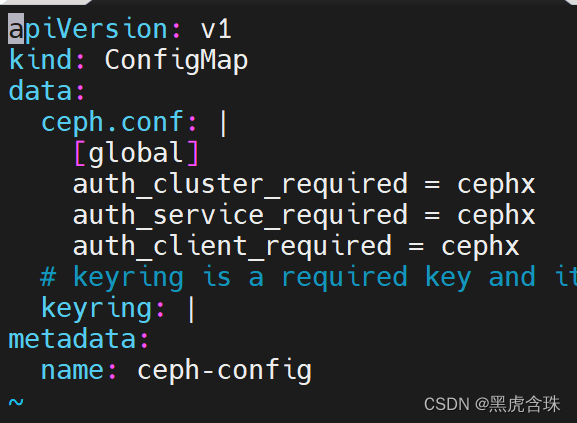

kubectl apply -f ceph-config-map.yaml

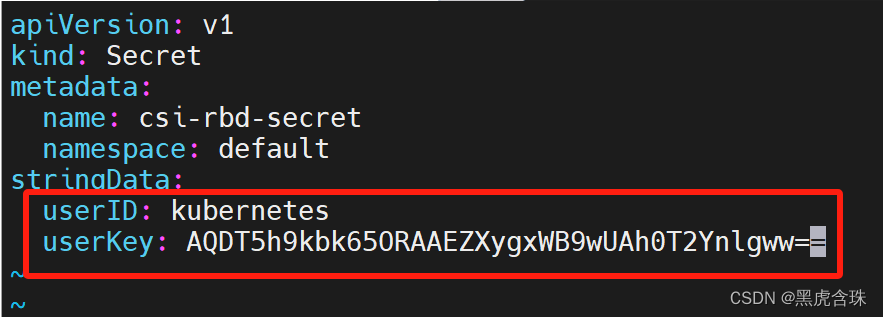

创建Secret

kubectl apply -f csi-rbd-secret.yaml

配置ceph-csi插件

kubectl apply -f deploy/rbd/kubernetes/csi-provisioner-rbac.yaml

kubectl apply -f deploy/rbd/kubernetes/csi-nodeplugin-rbac.yaml

修改csi-rbdplugin–provisioner.yaml

sed -i ‘s/cephcsi:canary/cephcsi:v3.8.0/g’ csi-rbdplugin–provisioner.yaml

sed -i ‘s/registry.k8s.io/sig–storage/registry.aliyuncs.com/google_containers/g’ csi-rbdplugin–provisioner.yaml

kubectl apply -f csi-rbdplugin–provisioner.yaml

sed -i ‘s/cephcsi:canary/cephcsi:v3.8.0/g’ csi-rbdplugin.yaml

sed -i ‘s/registry.k8s.io/sig–storage/registry.aliyuncs.com/google_containers/g’ csi-rbdplugin.yaml

kubectl apply -f csi-rbdplugin.yaml

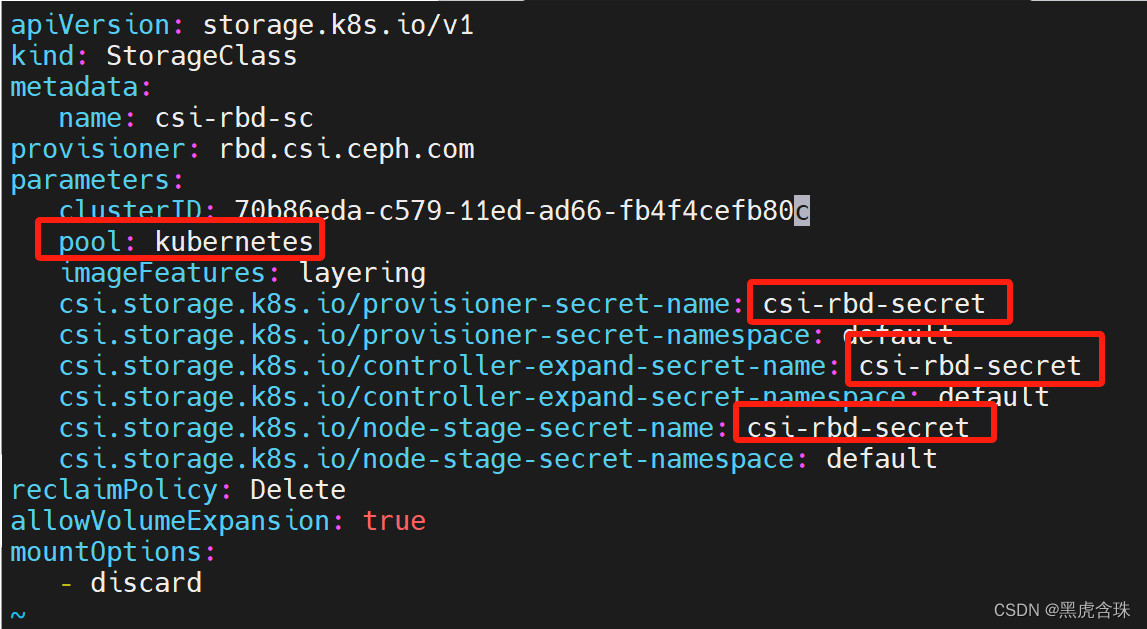

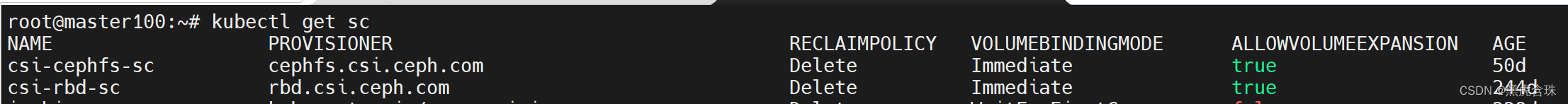

创建StorageClass

ceph-csi-rbd-sc.yaml

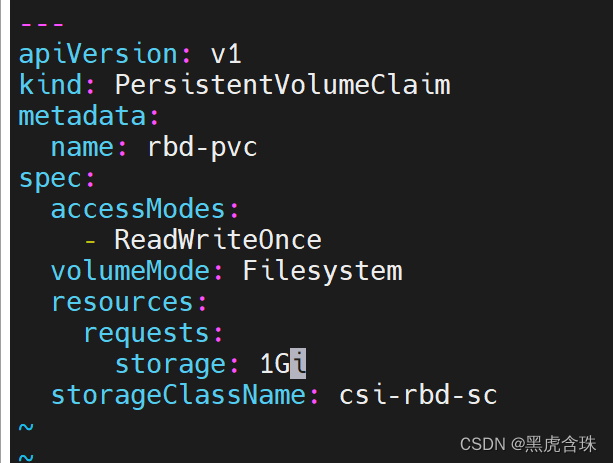

创建pvc

ceph-csi-rbd-pvc.yaml

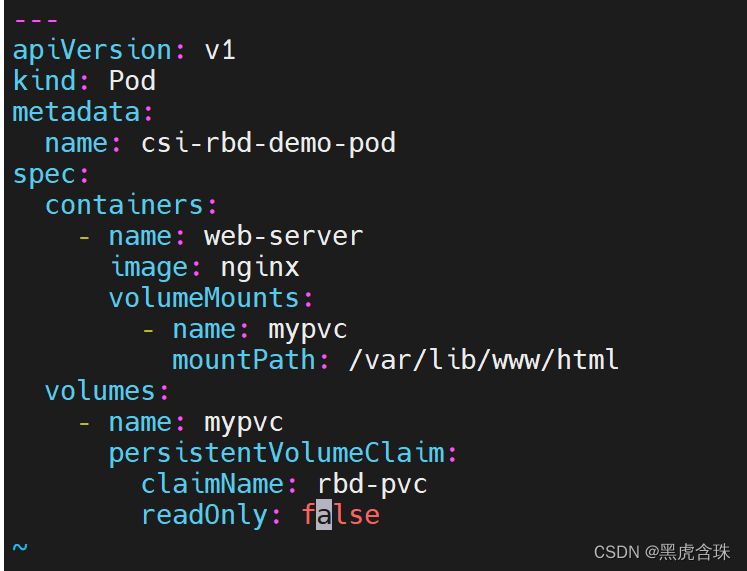

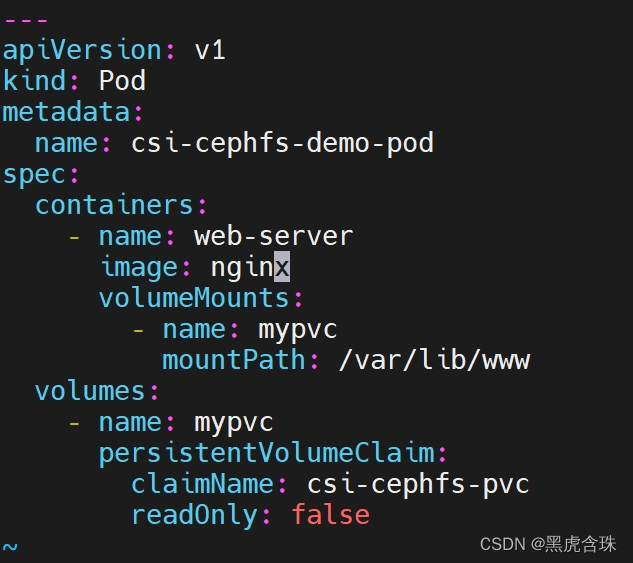

创建pod

ceph-csi-rbd-pod.yaml

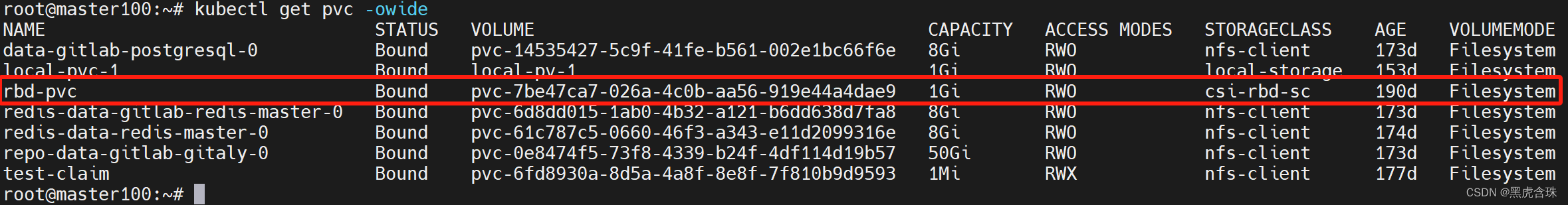

查看创建的PVC

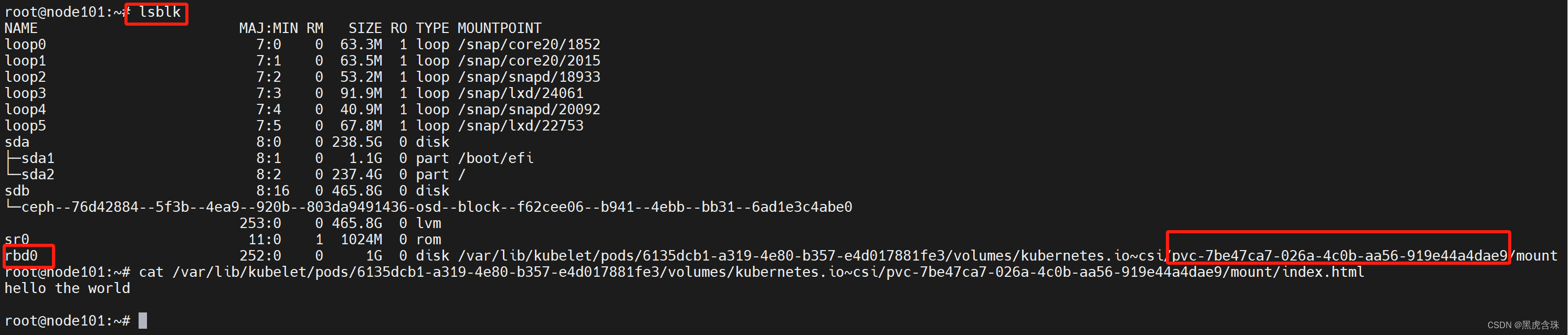

查看rbd设备

创建cephfs

ceph osd pool create cephfs_data 32 32

ceph osd pool create cephfs_metadata 32 32

ceph fs new cephfs cephfs_metadata cephfs_data

生成ceph-csi ConfigMap

和rbd相同,不用重复执行。

和rbd相同,不用重复执行。

和rbd相同,不用重复执行。

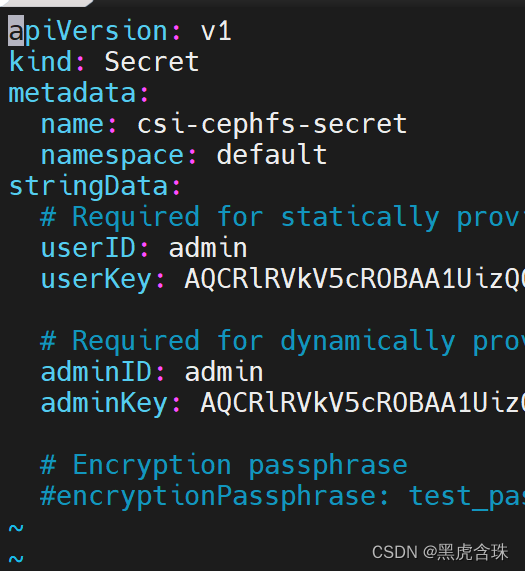

ceph-csi-cephfs-secret.yaml

kubectl apply -f ceph-csi-cephfs-secret.yaml

配置ceph-csi插件

kubectl apply -f deploy/cephfs/kubernetes/csi-nodeplugin-rbac.yaml

kubectl apply -f deploy/cephfs/kubernetes/csi-provisioner-rbac.yaml

修改csi-cephfsplugin-provisioner.yaml

sed -i ‘s/cephcsi:canary/cephcsi:v3.8.0/g’ csi-cephfsplugin-provisioner.yaml

sed -i ‘s/registry.k8s.io/sig–storage/registry.aliyuncs.com/google_containers/g’ csi-cephfsplugin-provisioner.yaml

kubectl apply -f csi-cephfsplugin-provisioner.yaml

修改csi-cephfsplugin.yaml

sed -i ‘s/cephcsi:canary/cephcsi:v3.8.0/g’ csi-cephfsplugin.yaml

sed -i ‘s/registry.k8s.io/sig-storage/registry.aliyuncs.com/google_containers/g’ csi-cephfsplugin.yaml

kubectl apply -f csi-cephfsplugin.yaml

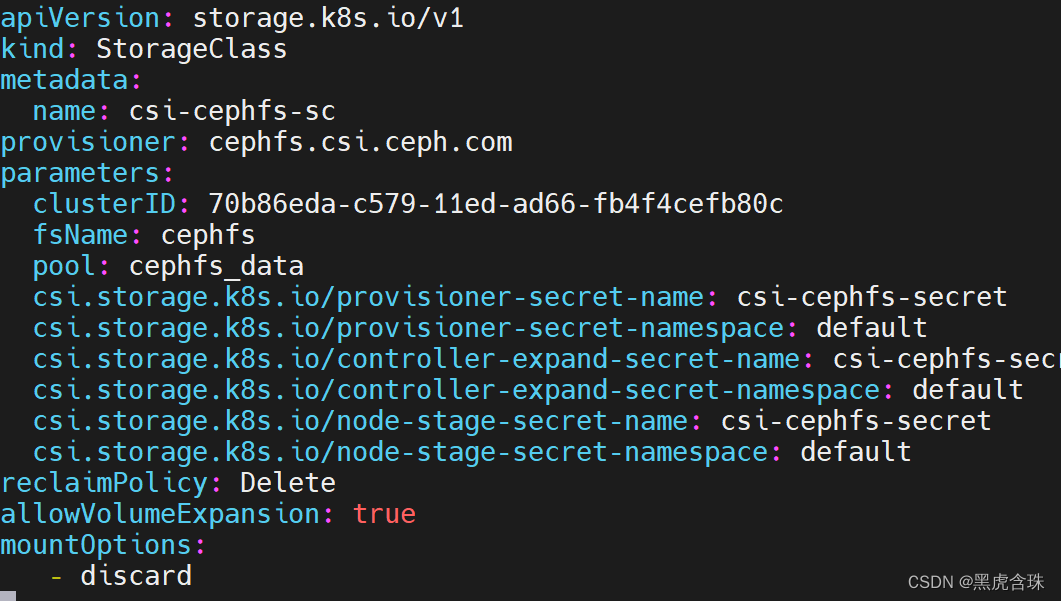

创建StorageClass

ceph-csi-cephfs-sc.yaml

创建pvc

创建pod

参考资料

https://github.com/ceph/ceph-csi/blob/devel/docs/deploy-rbd.md

https://github.com/ceph/ceph-csi/blob/devel/docs/deploy-cephfs.md

原文地址:https://blog.csdn.net/chenhaifeng2016/article/details/134620521

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_5407.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!