本文介绍: 机器学习评估模型

,b)=−mtest1i=1∑mtest[ytest(i)log(f(xtest(i)))+(1−ytest(i))log(1−f(xtest(i))]

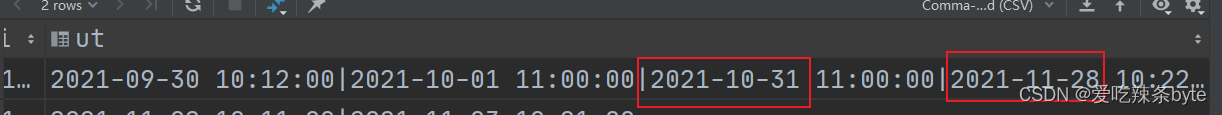

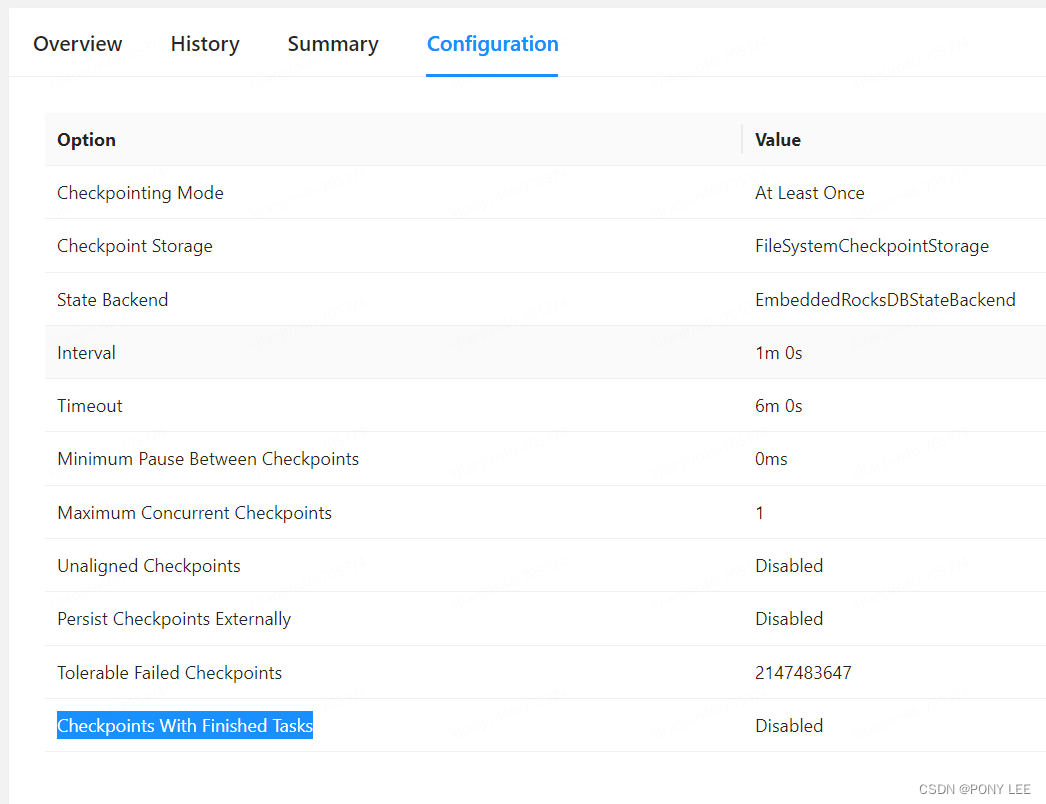

2 cross-validation set

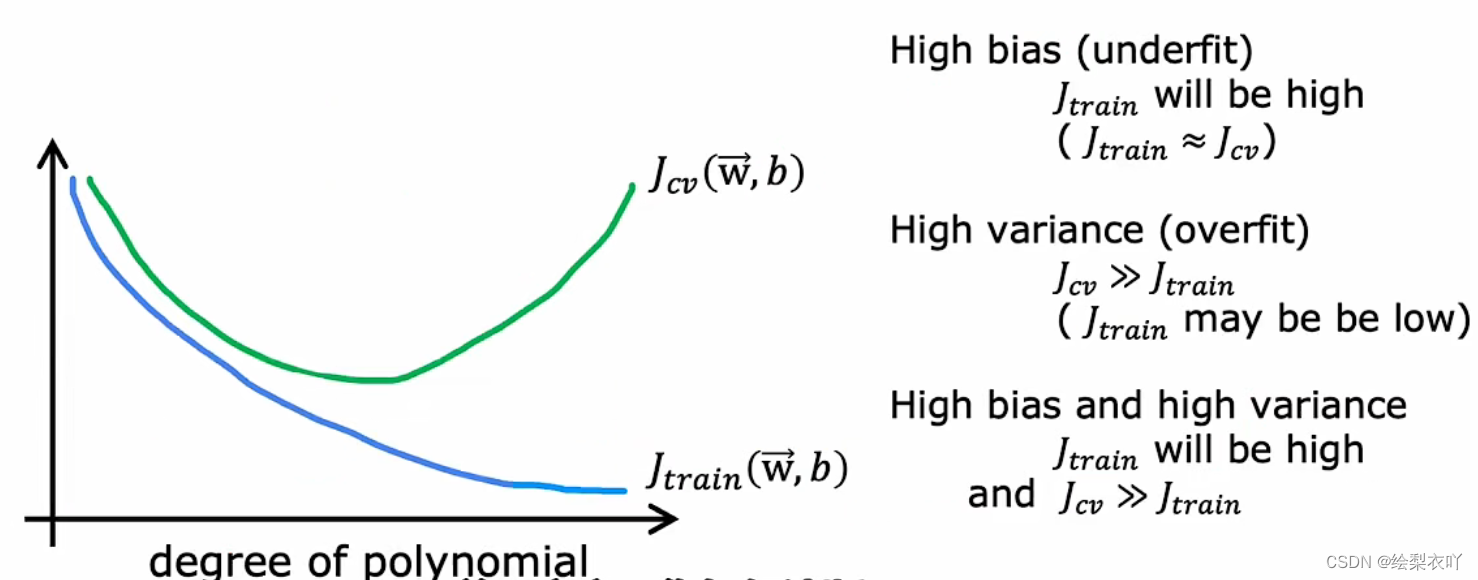

3 bias and variance

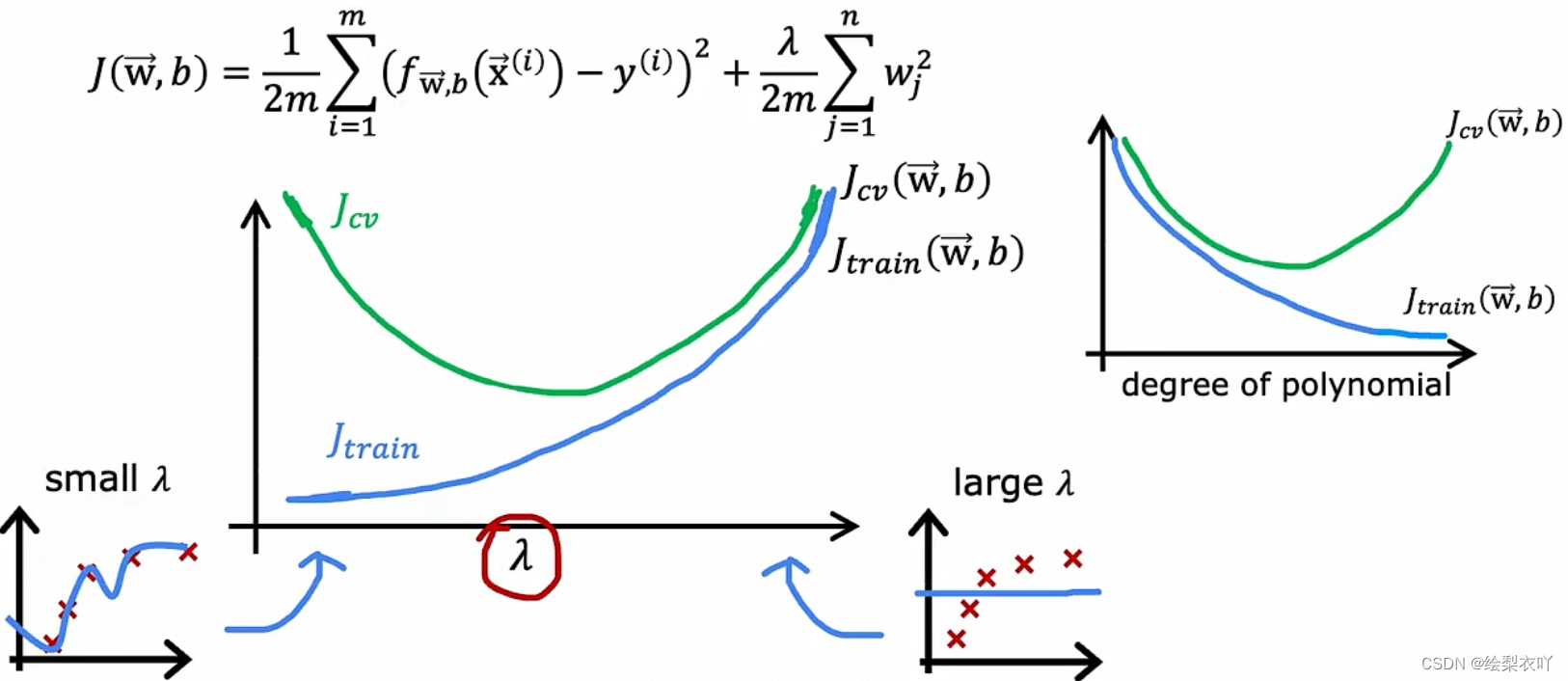

4 regularization

5 method

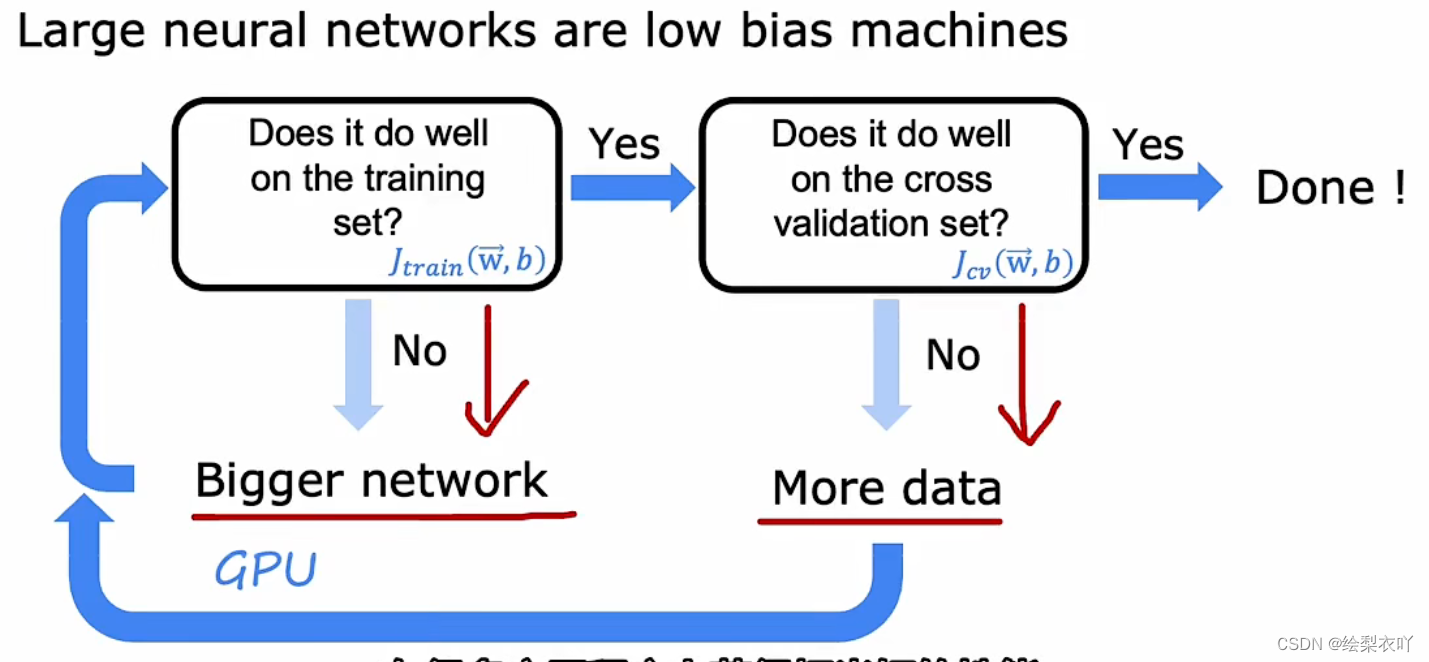

6 neural network and bias variance

声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。