本文介绍: 启动拓扑图时需要指定stormStatics.jar 包,如果使用新storm安装包没有,请自行找测试部要stormStatics.jar包。zookeeper设置用户密码,并设置连接zookeeper白名单。单独配置zookeeper 支持acl 设置用户和密码,在storm不修改代码情况下和kafka支持。当前版本不支持连接kafka支持 ACL, 需要修改storm jar包,待研发布支持版本。当kafka 开启ACL时,storm 和ccod模块不清楚配置用户和密码。

部署storm统计服务开启zookeeper、kafka 、Storm(sasl认证)

- 当前测试验证结果:

- 单独配置zookeeper 支持acl 设置用户和密码,在storm不修改代码情况下和kafka支持

- 当kafka 开启ACL时,storm 和ccod模块不清楚配置用户和密码。

- 使用python脚本连接kafka用户和密码是能成功发送消息。

- 当前部署环境服务版本

| 服务IP | 模块 | 版本信息 |

|---|---|---|

| 10.130.41.42 | zookeeper | zookeeper-3.6.3 |

| 10.130.41.43 | kafka | kafka_2.11-2.3.1 |

| 10.130.41.44 | storm | apache-storm-1.2.4 |

zookeeper部署

- 部署mongodb_1服务器的zookeeper

- 传安装部署包和配置文件

[root@mongodb_1 ~]# su - storm

[storm@mongodb_1 ~]$ rz -be ====> mongodb_1 ~]$ rz -be storm_node1.tar.gz

[storm@mongodb_1 ~]$ tar xvf storm_node1.tar.gz

[storm@mongodb_1 ~]$ cd storm_node1

[storm@mongodb_1 storm_node1]$ mv * .bash_profile ../

[storm@mongodb_1 ~]$ source .bash_profile ;java -version

java version "1.8.0_91"

Java(TM) SE Runtime Environment (build 1.8.0_91-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.91-b14, mixed mode)

- 修改zookeeper配置文件,然后在启动

[storm@mongodb_1 ~]$ cd zookeeper-3.6.3/conf/

[storm@mongodb_1 conf]$ vim zoo.cfg

dataDir=/home/storm/zookeeper-3.6.3/data

dataLogDir=/home/storm/zookeeper-3.6.3/log

server.1:mongodb_1:2182:3181

server.2:mongodb_2:2182:3181

server.3:mongodb_3:2182:3181

#peerType=observer

autopurge.purgeInterval=1

autopurge.snapRetainCount=3

4lw.commands.whitelist=*

jaasLoginRenew=3600000

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl

[storm@mongodb_1 conf]$ vim zk_server_jaas.conf

Server {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="zk_cluster"

password="zk_cluster_passwd"

user_admin="Admin@123";

};

#拷贝kafka acl lib

[storm@mongodb_1 zookeeper-3.6.3]$ cd /home/storm/zookeeper-3.6.3

[storm@mongodb_1 zookeeper-3.6.3]$ mkdir zk_sasl_dependencies

[storm@mongodb_1 libs]$ cd /home/storm/kafka_2.11-2.3.1/libs

[storm@mongodb_1 libs]$ cp kafka-clients-2.3.1.jar lz4-java-1.6.0.jar slf4j-api-1.7.26.jar snappy-java-1.1.7.3.jar ~/zookeeper-3.6.3/lib/

[storm@mongodb_1 ~]$ mkdir -p /home/storm/zookeeper-3.6.3/log /home/storm/zookeeper-3.6.3/data

[storm@mongodb_1 ~]$ echo "1" > /home/storm/zookeeper-3.6.3/data/myid

[storm@mongodb_1 ~]$ cd ~/zookeeper-3.6.3/bin

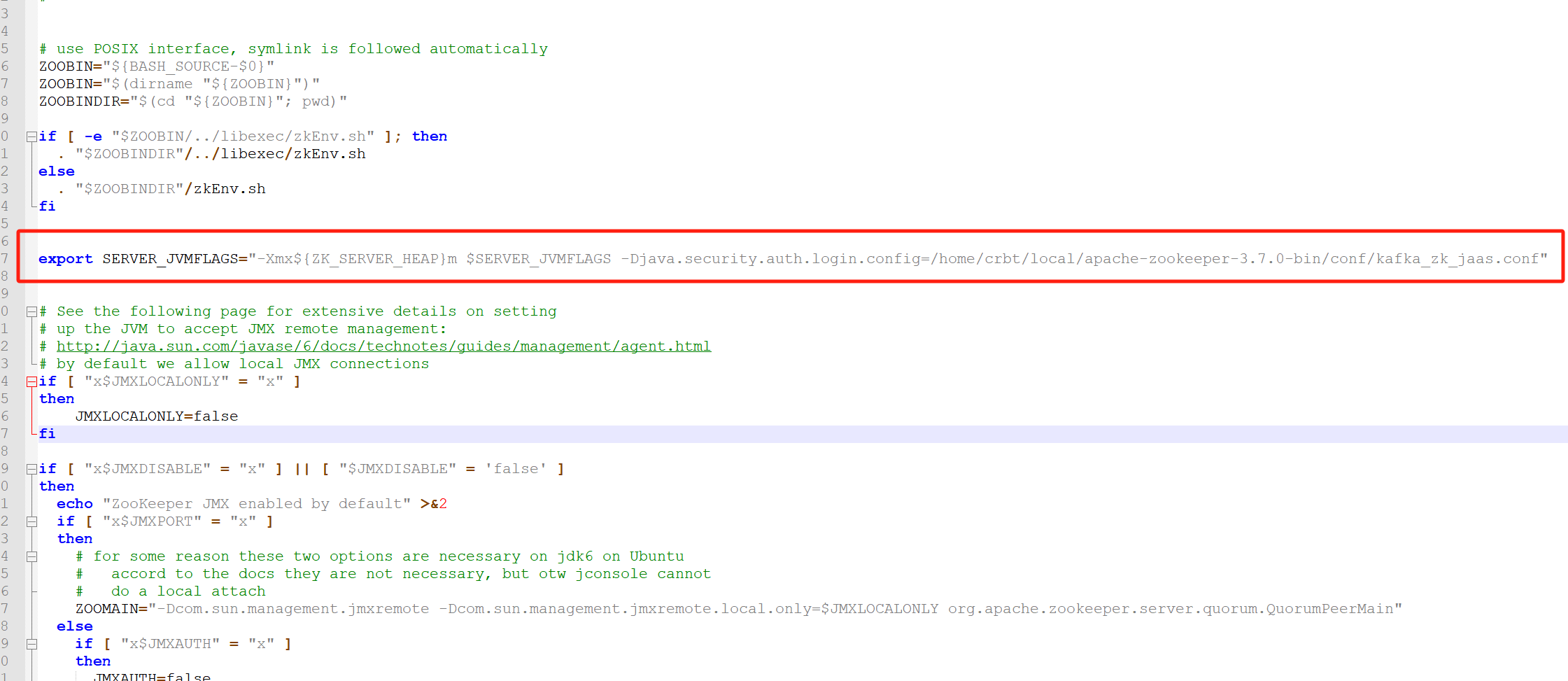

[storm@mongodb_1 bin]$ vim zkEnv.sh #最下面添加即可

#为zookeeper添加SASL支持

for i in ~/zookeeper-3.6.3/zk_sasl_dependencies/*.jar;

do

CLASSPATH="$i:$CLASSPATH"

done

SERVER_JVMFLAGS=" -Djava.security.auth.login.config=$HOME/zookeeper-3.6.3/conf/zk_server_jaas.conf"

[storm@mongodb_1 bin]$ ./zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

#需要登录三台zookepper启动完成之后,在查看状态

[storm@mongodb_1 bin]$ ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

- 部署mongodb_2服务器的zookeeper

- 上传安装部署包和配置文件

[root@mongodb_2 ~]# su - storm

[storm@mongodb_2 ~]$ rz -be ====> mongodb_1 ~]$ rz -be storm_node2.tar.gz

[storm@mongodb_2 ~]$ tar xvf storm_node2.tar.gz

[storm@mongodb_2 ~]$ cd storm_node2

[storm@mongodb_2 storm_node1]$ mv * .bash_profile ../

[storm@mongodb_2 ~]$ source .bash_profile ;java -version

java version "1.8.0_91"

Java(TM) SE Runtime Environment (build 1.8.0_91-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.91-b14, mixed mode)

- 修改zookeeper配置文件,然后在启动

[storm@mongodb_2 ~]$ cd zookeeper-3.6.3/conf/

[storm@mongodb_2 conf]$ vim zoo.cfg

dataDir=/home/storm/zookeeper-3.6.3/data

dataLogDir=/home/storm/zookeeper-3.6.3/log

server.1:mongodb_2:2182:3181

server.2:mongodb_2:2182:3181

server.3:mongodb_3:2182:3181

#peerType=observer

autopurge.purgeInterval=1

autopurge.snapRetainCount=3

4lw.commands.whitelist=*

jaasLoginRenew=3600000

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl

[storm@mongodb_2 conf]$ vim zk_server_jaas.conf

Server {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="zk_cluster"

password="zk_cluster_passwd"

user_admin="Admin@123";

};

#拷贝kafka acl lib

[storm@mongodb_2 zookeeper-3.6.3]$ cd /home/storm/zookeeper-3.6.3

[storm@mongodb_2 zookeeper-3.6.3]$ mkdir zk_sasl_dependencies

[storm@mongodb_2 libs]$ cd /home/storm/kafka_2.11-2.3.1/libs

[storm@mongodb_2 libs]$ cp kafka-clients-2.3.1.jar lz4-java-1.6.0.jar slf4j-api-1.7.26.jar snappy-java-1.1.7.3.jar ~/zookeeper-3.6.3/lib/

[storm@mongodb_2 ~]$ mkdir -p /home/storm/zookeeper-3.6.3/log /home/storm/zookeeper-3.6.3/data

[storm@mongodb_2 ~]$ echo "1" > /home/storm/zookeeper-3.6.3/data/myid

[storm@mongodb_2 ~]$ cd ~/zookeeper-3.6.3/bin

[storm@mongodb_2 bin]$ vim zkEnv.sh #最下面添加即可

#为zookeeper添加SASL支持

for i in ~/zookeeper-3.6.3/zk_sasl_dependencies/*.jar;

do

CLASSPATH="$i:$CLASSPATH"

done

SERVER_JVMFLAGS=" -Djava.security.auth.login.config=$HOME/zookeeper-3.6.3/conf/zk_server_jaas.conf"

[storm@mongodb_2 bin]$ ./zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

#需要登录三台zookepper启动完成之后,在查看状态

[storm@mongodb_2 bin]$ ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

- 部署mongodb_3服务器的zookeeper

- 上传安装部署包和配置文件

[root@mongodb_3 ~]# su - storm

[storm@mongodb_3 ~]$ rz -be ====> mongodb_1 ~]$ rz -be storm_node3.tar.gz

[storm@mongodb_3 ~]$ tar xvf storm_node3.tar.gz

[storm@mongodb_3 ~]$ cd storm_node3

[storm@mongodb_3 storm_node1]$ mv * .bash_profile ../

[storm@mongodb_3 ~]$ source .bash_profile ;java -version

java version "1.8.0_91"

Java(TM) SE Runtime Environment (build 1.8.0_91-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.91-b14, mixed mode)

- 修改zookeeper配置文件,然后在启动

[storm@mongodb_3 ~]$ cd zookeeper-3.6.3/conf/

[storm@mongodb_3 conf]$ vim zoo.cfg

dataDir=/home/storm/zookeeper-3.6.3/data

dataLogDir=/home/storm/zookeeper-3.6.3/log

server.1:mongodb_3:2182:3181

server.2:mongodb_3:2182:3181

server.3:mongodb_3:2182:3181

#peerType=observer

autopurge.purgeInterval=1

autopurge.snapRetainCount=3

4lw.commands.whitelist=*

jaasLoginRenew=3600000

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl

[storm@mongodb_3 conf]$ vim zk_server_jaas.conf

Server {

org.apache.zookeeper.server.auth.DigestLoginModule required

admin="Admin@123";

};

#拷贝kafka acl lib

[storm@mongodb_3 zookeeper-3.6.3]$ cd /home/storm/zookeeper-3.6.3

[storm@mongodb_3 zookeeper-3.6.3]$ mkdir zk_sasl_dependencies

[storm@mongodb_3 libs]$ cd /home/storm/kafka_2.11-2.3.1/libs

[storm@mongodb_3 libs]$ cp kafka-clients-2.3.1.jar lz4-java-1.6.0.jar slf4j-api-1.7.26.jar snappy-java-1.1.7.3.jar ~/zookeeper-3.6.3/lib/

[storm@mongodb_3 ~]$ mkdir -p /home/storm/zookeeper-3.6.3/log /home/storm/zookeeper-3.6.3/data

[storm@mongodb_3 ~]$ echo "1" > /home/storm/zookeeper-3.6.3/data/myid

[storm@mongodb_3 ~]$ cd ~/zookeeper-3.6.3/bin

[storm@mongodb_3 bin]$ vim zkEnv.sh #最下面添加即可

#为zookeeper添加SASL支持

for i in ~/zookeeper-3.6.3/zk_sasl_dependencies/*.jar;

do

CLASSPATH="$i:$CLASSPATH"

done

SERVER_JVMFLAGS=" -Djava.security.auth.login.config=$HOME/zookeeper-3.6.3/conf/zk_server_jaas.conf"

[storm@mongodb_3 bin]$ ./zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

#需要登录三台zookepper启动完成之后,在查看状态

[storm@mongodb_3 bin]$ ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

- zookeeper设置用户密码,并设置连接zookeeper白名单。(如已经开启sals认证,则选acl认证跳过)

- 当前新部署平台,zookeeper未做下面操作

addauth

- 当前新部署平台,zookeeper未做下面操作

[storm@mongodb_2 bin]$ ./zkCli.sh -server mongodb_2:2182,mongodb_1:2182,mongodb_3:2182

[zk: localhost:2181(CONNECTED) 0] addauth digest admin:123456 #admin为用户名, 123456为密码。

[zk: localhost:2181(CONNECTED) 1] setAcl / auth:admin:123456:cdrwa

[zk: localhost:2181(CONNECTED) 2] getAcl /

'digest,'admin:0uek/hZ/V9fgiM35b0Z2226acMQ=

: cdrwa

[storm@mongodb_2 bin]$ ./zkCli.sh #登录连接成功后每次连接需要输入 addauth digest admin:123456

[zk: localhost:2181(CONNECTED) 0] ls /

Insufficient permission : /

[zk: localhost:2181(CONNECTED) 1] addauth digest admin:123456

[zk: localhost:2181(CONNECTED) 2] ls /

[test, zookeeper]

[zk: localhost:2181(CONNECTED) 8] setAcl / ip:10.130.41.42:cdrwa,ip:10.130.41.43:cdrwa,ip:10.130.41.44:cdrwa,ip:10.130.41.86:cdrwa,ip:10.130.41.119:cdrwa,ip:127.0.0.1:cdrwa,auth:kafka:cdrwa #设置连接zookeeper白名单

初始化storm配置

- 需要三个随便找一个zookeeper进行登录

[storm@mongodb_1 ~]$ cd ~/zookeeper-3.6.3/bin

[storm@mongodb_1 bin]$ ./zkCli.sh

[zk: localhost:2181(CONNECTED) 2] addauth digest admin:123456

create /storm config

create /storm/config 1

create /umg_cloud cluster

create /umg_cloud/cluster 1

create /umg_cloud/cfg 1

create /umg_cloud/cfg/notify 1

create /storm/config/ENT_CALL_RECORD_LIST 0103290030,0103290035,0103290023,0103290021,0103290022,0103290031

create /storm/config/KAFKA_BROKER_LIST mongodb_1:9092,mongodb_2:9092,mongodb_3:9092

create /storm/config/KAFKA_ZOOKEEPER_CONNECT mongodb_1:2181,mongodb_2:2181,mongodb_3:2181

create /storm/config/MAX_SPOUT_PENDING 100

create /storm/config/MONGO_ADMIN admin

create /storm/config/MONGO_BLOCKSIZE 100

create /storm/config/MONGO_HOST mongodb_1:30000;mongodb_2:30000;mongodb_3:30000

create /storm/config/MONGO_NAME ""

create /storm/config/MONGO_PASSWORD ""

create /storm/config/MONGO_POOLSIZE 100

create /storm/config/MONGO_SWITCH 0

create /storm/config/OFF_ON_DTS 0

create /storm/config/REDIS_HOST mongodb_3:27381

create /storm/config/REDIS_PASSWORD ""

create /storm/config/SEND_MESSAGE 0

create /storm/config/TOPO_COMPUBOLT_NUM 4

create /storm/config/TOPO_MONGOBOLT_NUM 16

create /storm/config/TOPO_SPOUT_NUM 3

create /storm/config/TOPO_WORK_NUM 2

create /storm/config/ENTID_MESSAGE 1

create /storm/config/MESSAGE_TOPIC 1

kafka部署

- 部署mongodb_1服务器的kafka

时[storm@mongodb_1 ~]$ cd ~/kafka_2.11-2.3.1/config/

[storm@mongodb_1 config]$ cat server.properties

broker.id=1

listeners=SASL_PLAINTEXT://10.130.41.42:9092

sasl.mechanism.inter.broker.protocol=PLAIN

sasl.enabled.mechanisms=PLAIN

security.inter.broker.protocol=SASL_PLAINTEXT

allow.everyone.if.no.acl.found=false

authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

super.users=User:admin;User:kafkacluster

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/home/storm//kafka_2.11-2.3.1/logs

num.partitions=3

default.replication.factor=3

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=mongodb_1:2181,mongodb_2:2181,mongodb_3:2181

compression.type=producer

zookeeper.set.acl=true

zookeeper.defaultAcl=root;123456:rwcd

zookeeper.connection.timeout.ms=6000

zookeeper.digest.enabled=true

group.initial.rebalance.delay.ms=0

[storm@mongodb_1 config]$ more sasl.conf

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="Admin@123";

[storm@mongodb_1 config]$ cat kafka_server_jaas.conf

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kafkacluster"

password="Admin@123"

user_kafkacluster="Admin@123"

user_admin="Admin@123"

};

Client{

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="Admin@123";

};

[storm@mongodb_1 ~]$mkdir /home/storm/kafka_2.11-2.3.1/log -p

[storm@mongodb_1 ~]$cd /home/storm/kafka_2.11-2.3.1/bin

[storm@mongodb_1 bin]$ vim kafka-server-start.sh #把创建的文件 kafka_server_jaas.conf 加载到启动环境中

export KAFKA_HEAP_OPTS=$KAFKA_HEAP_OPTS" -Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf"

[storm@mongodb_1 ~]$ ./kafka-server-start.sh -daemon config/server.properties

- 部署mongodb_2服务器的kafka

[storm@mongodb_2 ~]$ cd ~/kafka_2.11-2.3.1/config/

[storm@mongodb_2 config]$ cat server.properties

broker.id=1

listeners=SASL_PLAINTEXT://10.130.41.42:9092

sasl.mechanism.inter.broker.protocol=PLAIN

sasl.enabled.mechanisms=PLAIN

security.inter.broker.protocol=SASL_PLAINTEXT

allow.everyone.if.no.acl.found=false

authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

super.users=User:admin;User:kafkacluster

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/home/storm//kafka_2.11-2.3.1/logs

num.partitions=3

default.replication.factor=3

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=mongodb_2:2181,mongodb_2:2181,mongodb_3:2181

compression.type=producer

zookeeper.set.acl=true

zookeeper.defaultAcl=root;123456:rwcd

zookeeper.connection.timeout.ms=6000

zookeeper.digest.enabled=true

group.initial.rebalance.delay.ms=0

[storm@mongodb_2 config]$ more sasl.conf

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="Admin@123";

[storm@mongodb_2 config]$ cat kafka_server_jaas.conf

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kafkacluster"

password="Admin@123"

user_kafkacluster="Admin@123"

user_admin="Admin@123"

};

Client{

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="Admin@123";

};

[storm@mongodb_2 ~]$mkdir /home/storm/kafka_2.11-2.3.1/log -p

[storm@mongodb_2 ~]$cd /home/storm/kafka_2.11-2.3.1/bin

[storm@mongodb_2 bin]$ vim kafka-server-start.sh #把创建的文件 kafka_server_jaas.conf 加载到启动环境中

export KAFKA_HEAP_OPTS=$KAFKA_HEAP_OPTS" -Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf"

[storm@mongodb_2 ~]$ ./kafka-server-start.sh -daemon config/server.properties

- 部署mongodb_3服务器的kafka

[storm@mongodb_3 ~]$ cd ~/kafka_2.11-2.3.1/config/

[storm@mongodb_3 config]$ cat server.properties

broker.id=1

listeners=SASL_PLAINTEXT://10.130.41.42:9092

sasl.mechanism.inter.broker.protocol=PLAIN

sasl.enabled.mechanisms=PLAIN

security.inter.broker.protocol=SASL_PLAINTEXT

allow.everyone.if.no.acl.found=false

authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

super.users=User:admin;User:kafkacluster

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/home/storm//kafka_2.11-2.3.1/logs

num.partitions=3

default.replication.factor=3

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=mongodb_3:2181,mongodb_2:2181,mongodb_3:2181

compression.type=producer

zookeeper.set.acl=true

zookeeper.defaultAcl=root;123456:rwcd

zookeeper.connection.timeout.ms=6000

zookeeper.digest.enabled=true

group.initial.rebalance.delay.ms=0

[storm@mongodb_3 config]$ more sasl.conf

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="Admin@123";

[storm@mongodb_3 config]$ cat kafka_server_jaas.conf

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kafkacluster"

password="Admin@123"

user_kafkacluster="Admin@123"

user_admin="Admin@123"

};

Client{

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="Admin@123";

};

[storm@mongodb_3 ~]$mkdir /home/storm/kafka_2.11-2.3.1/log -p

[storm@mongodb_3 ~]$cd /home/storm/kafka_2.11-2.3.1/bin

[storm@mongodb_3 bin]$ vim kafka-server-start.sh #把创建的文件 kafka_server_jaas.conf 加载到启动环境中

export KAFKA_HEAP_OPTS=$KAFKA_HEAP_OPTS" -Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf"

[storm@mongodb_3 ~]$ ./kafka-server-start.sh -daemon config/server.properties

- 初始化创建topics,在其中一台kafka上面操作即可

[storm@mongodb_1 kafka_2.11-2.3.1]$ cd kafka_2.11-2.3.1/

[storm@mongodb_1 kafka_2.11-2.3.1]$ bin/kafka-topics.sh --create --zookeeper mongodb_1:2181,mongodb_2:2181,mongodb_3:2181 --replication-factor 3 --partitions 1 --topic ent_record_fastdfs_url

- 给对应topics配置acls权限

[storm@mongodb_1 bin]$ ./kafka-acls.sh --authorizer-properties zookeeper.connect=10.130.41.43:2181 --add --allow-principal User:admin --producer --topic ent_record_fastdfs_url

- 查看那些topic设置alcs

[storm@mongodb_1 bin]$ ./kafka-acls.sh --authorizer-properties zookeeper.connect=10.130.41.43:2181 --list

Current ACLs for resource `Topic:LITERAL:ent_record_fastdfs_url`:

User:admin has Allow permission for operations: Describe from hosts: *

User:admin has Allow permission for operations: Create from hosts: *

User:admin has Allow permission for operations: Write from hosts: *

storm部署

-

当前版本不支持连接kafka支持 ACL, 需要修改storm jar包,待研发布支持版本。

-

下面配置文件配置已支持zookeeper连接设置ACL

-

部署mongodb_1服务器的storm

[storm@mongodb_1 ~]$ cd ~/apache-storm-1.2.4/conf/

[storm@mongodb_1 conf]$ vim storm.yaml

storm.zookeeper.factory.class: "org.apache.storm.security.auth.SASLAuthenticationProvider"

storm.zookeeper.auth.provider.1: "org.apache.storm.security.auth.digest.DigestSaslServerCallbackHandler"

storm.zookeeper.auth.provider.2: "org.apache.zookeeper.server.auth.SASLAuthenticationProvider"

storm.zookeeper.superACL: "sasl:admin"

storm.zookeeper.servers:

- "10.130.41.42"

- "10.130.41.43"

- "10.130.41.44"

nimbus.seeds: ["10.130.41.42","10.130.41.43","10.130.41.44"]

ui.port: 8888

storm.local.dir: "/home/storm/apache-storm-1.2.4/data"

supervisor.slots.ports:

- 6700

- 6701

- 6702

- 6703

- 6704

- 6705

- 6706

storm.zookeeper.authProvider.1: org.apache.zookeeper.server.auth.DigestAuthenticationProvider

storm.zookeeper.auth.user: admin

storm.zookeeper.auth.password: Admin@123

storm.zookeeper.auth.digest.1: admin=Admin@123

java.security.auth.login.config: /home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf

worker.childopts: '-Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf'

worker.childopts: '-Djava.security.auth.login.config=/home/storm/zookeeper-3.6.3/conf/jaas.conf'

[storm@mongodb_1 ~]$ mkdir -p /home/storm/apache-storm-1.2.4/data

[storm@mongodb_1 ~]$ cd /home/storm/apache-storm-1.2.4/bin

[storm@mongodb_1 bin]$ nohup ./storm nimbus &

[storm@mongodb_1 bin]$ nohup ./storm ui &

[storm@mongodb_1 bin]$ nohup ./storm supervisor &

[storm@mongodb_1 bin]$ jps #查看是否正常运行

1808 worker

1792 LogWriter

1907 worker

29171 Supervisor

1891 LogWriter

20328 core

25224 Kafka

31867 ZooKeeperMain

2444 Jps

18957 QuorumPeerMain

32334 nimbus

- 部署mongodb_2服务器的storm

[storm@mongodb_2 ~]$ cd ~/apache-storm-1.2.4/conf/

[storm@mongodb_2 conf]$ vim storm.yaml

storm.zookeeper.factory.class: "org.apache.storm.security.auth.SASLAuthenticationProvider"

storm.zookeeper.auth.provider.1: "org.apache.storm.security.auth.digest.DigestSaslServerCallbackHandler"

storm.zookeeper.auth.provider.2: "org.apache.zookeeper.server.auth.SASLAuthenticationProvider"

storm.zookeeper.superACL: "sasl:admin"

storm.zookeeper.servers:

- "10.130.41.42"

- "10.130.41.43"

- "10.130.41.44"

nimbus.seeds: ["10.130.41.42","10.130.41.43","10.130.41.44"]

ui.port: 8888

storm.local.dir: "/home/storm/apache-storm-1.2.4/data"

supervisor.slots.ports:

- 6700

- 6701

- 6702

- 6703

- 6704

- 6705

- 6706

storm.zookeeper.authProvider.1: org.apache.zookeeper.server.auth.DigestAuthenticationProvider

storm.zookeeper.auth.user: admin

storm.zookeeper.auth.password: Admin@123

storm.zookeeper.auth.digest.1: admin=Admin@123

java.security.auth.login.config: /home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf

worker.childopts: '-Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf'

worker.childopts: '-Djava.security.auth.login.config=/home/storm/zookeeper-3.6.3/conf/jaas.conf'

[storm@mongodb_2 ~]$ mkdir -p /home/storm/apache-storm-1.2.4/data

[storm@mongodb_2 ~]$ cd /home/storm/apache-storm-1.2.4/bin

[storm@mongodb_2 bin]$ nohup ./storm nimbus &

[storm@mongodb_2 bin]$ nohup ./storm ui &

[storm@mongodb_2 bin]$ nohup ./storm supervisor &

[storm@mongodb_2 bin]$ jps #查看是否正常运行

1808 worker

1792 LogWriter

1907 worker

29171 Supervisor

1891 LogWriter

20328 core

25224 Kafka

31867 ZooKeeperMain

2444 Jps

18957 QuorumPeerMain

32334 nimbus

- 部署mongodb_3服务器的storm

[storm@mongodb_3 ~]$ cd ~/apache-storm-1.2.4/conf/

[storm@mongodb_3 conf]$ vim storm.yaml

storm.zookeeper.factory.class: "org.apache.storm.security.auth.SASLAuthenticationProvider"

storm.zookeeper.auth.provider.1: "org.apache.storm.security.auth.digest.DigestSaslServerCallbackHandler"

storm.zookeeper.auth.provider.2: "org.apache.zookeeper.server.auth.SASLAuthenticationProvider"

storm.zookeeper.superACL: "sasl:admin"

storm.zookeeper.servers:

- "10.130.41.42"

- "10.130.41.43"

- "10.130.41.44"

nimbus.seeds: ["10.130.41.42","10.130.41.43","10.130.41.44"]

ui.port: 8888

storm.local.dir: "/home/storm/apache-storm-1.2.4/data"

supervisor.slots.ports:

- 6700

- 6701

- 6702

- 6703

- 6704

- 6705

- 6706

storm.zookeeper.authProvider.1: org.apache.zookeeper.server.auth.DigestAuthenticationProvider

storm.zookeeper.auth.user: admin

storm.zookeeper.auth.password: Admin@123

storm.zookeeper.auth.digest.1: admin=Admin@123

java.security.auth.login.config: /home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf

worker.childopts: '-Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf'

worker.childopts: '-Djava.security.auth.login.config=/home/storm/zookeeper-3.6.3/conf/jaas.conf'

[storm@mongodb_3 ~]$ mkdir -p /home/storm/apache-storm-1.2.4/data

[storm@mongodb_3 ~]$ cd /home/storm/apache-storm-1.2.4/bin

[storm@mongodb_3 bin]$ nohup ./storm nimbus &

[storm@mongodb_3 bin]$ nohup ./storm ui &

[storm@mongodb_3 bin]$ nohup ./storm supervisor &

[storm@mongodb_3 bin]$ jps #查看是否正常运行

1808 worker

1792 LogWriter

1907 worker

29171 Supervisor

1891 LogWriter

20328 core

25224 Kafka

31867 ZooKeeperMain

2444 Jps

18957 QuorumPeerMain

32334 nimbus

- storm的主节点nimbus在第一台上启动拓扑图

- 启动拓扑图时需要指定stormStatics.jar 包,如果使用新storm安装包没有,请自行找测试部要stormStatics.jar包

[storm@mongodb_1 bin]$ cd /home/storm/apache-storm-1.2.4/bin

[storm@mongodb_1 bin]$ ./storm jar ../lib/stormStatics.jar com.channelsoft.stormStatics.topology.FastdfsUrlTopology FastdfsUrlTopology mongodb_1,mongodb_2,mongodb_3 2181 ent_record_fastdfs_url /brokers

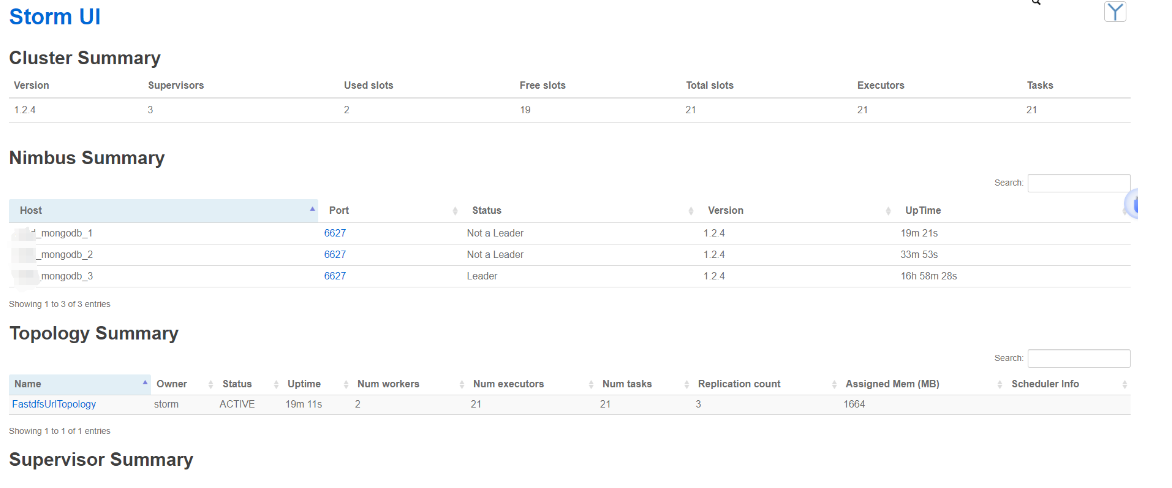

- 使用storm-ui查看刚启动拓扑是否正常

- 访问第一台的服务器ip端口是8888

本文由mdnice多平台发布

原文地址:https://blog.csdn.net/zhao138969/article/details/135670251

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_58078.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!

声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。