本文介绍: pytorch中loss function损失函数的使用举例,以及损失函数中backward()的使用

损失函数

torch提供了很多损失函数,可查看官方文档Loss Functions部分

- 作用:

- 计算实际输出和目标输出之间的差距

- 为更新输出提供一定的依据(反向传播),grad

损失函数用法差不多,这里以L1Loss和MSEloss为例

- L1Loss

注意传入的数据要为float类型,不然会报错,所以inputs和outputs处要加上类型转换

L1Loss的参数reduction,设置了计算loss值的方式,默认为差距绝对值的均值,也可以设置为’sum’,这是输出就为2 - MSELoss 平方差损失函数

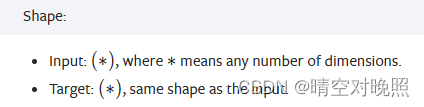

先看要求的输入输出

也是batch_size的那种形式

import torch

from torch.nn import L1Loss

from torch.nn import MSELoss

inputs = torch.tensor([1,2,3],dtype = torch.float32)

outputs = torch.tensor([1,2,5],dtype = torch.float32)

inputs = torch.reshape(inputs, (1,1,1,3))

outputs = torch.reshape(outputs, (1,1,1,3))

# L1Loss()

loss = L1Loss()

result = loss(inputs, outputs)

print(result)

# MSELoss()

loss_mse = MSELoss()

result_mse = loss_mse(inputs, outputs)

print(result_mse)

反向传播

from torch import nn

import torch

from torch.utils.tensorboard import SummaryWriter

import torchvision

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10('./dataset', train=False, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset=dataset, batch_size=1)

class Test(nn.Module):

def __init__(self):

super(Test,self).__init__()

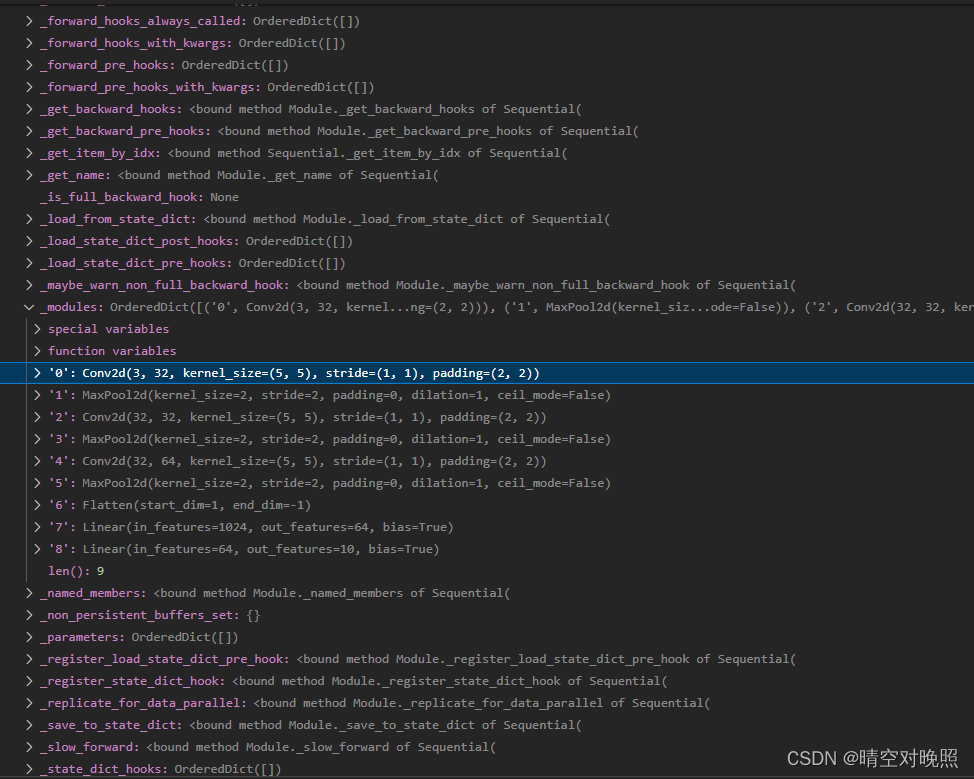

self.model1 = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2), # 计算同上

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2),

nn.MaxPool2d(kernel_size=2),

nn.Flatten() ,

nn.Linear(1024, 64),

nn.Linear(64, 10),

)

def forward(self, x):

x = self.model1(x)

return x

net = Test()

loss = nn.CrossEntropyLoss()

for data in dataloader:

imgs, targets = data

output = net(imgs)

resulr_loss = loss(output, targets)

print(resulr_loss)

加上反向传播后:

from torch import nn

import torch

from torch.utils.tensorboard import SummaryWriter

import torchvision

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10('./dataset', train=False, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset=dataset, batch_size=1)

class Test(nn.Module):

def __init__(self):

super(Test,self).__init__()

self.model1 = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2), # 计算同上

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2),

nn.MaxPool2d(kernel_size=2),

nn.Flatten() ,

nn.Linear(1024, 64),

nn.Linear(64, 10),

)

def forward(self, x):

x = self.model1(x)

return x

# 这就不需要像之前那种一样一个一个调用了

# 这样网络就写完了

net = Test()

loss = nn.CrossEntropyLoss()

for data in dataloader:

imgs, targets = data

output = net(imgs)

result_loss = loss(output, targets)

result_loss.backward() # 注意不是loss.backward(),而是result_loss.backward()

print('ok')

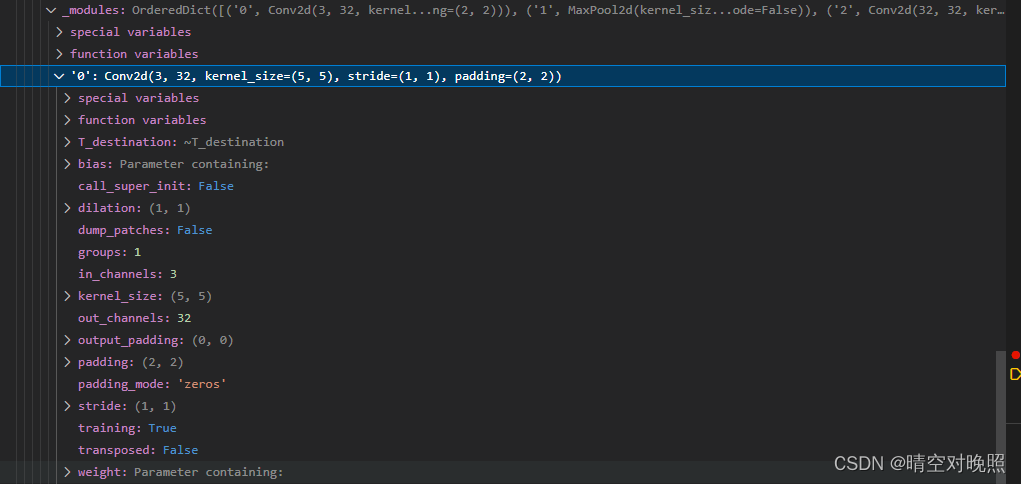

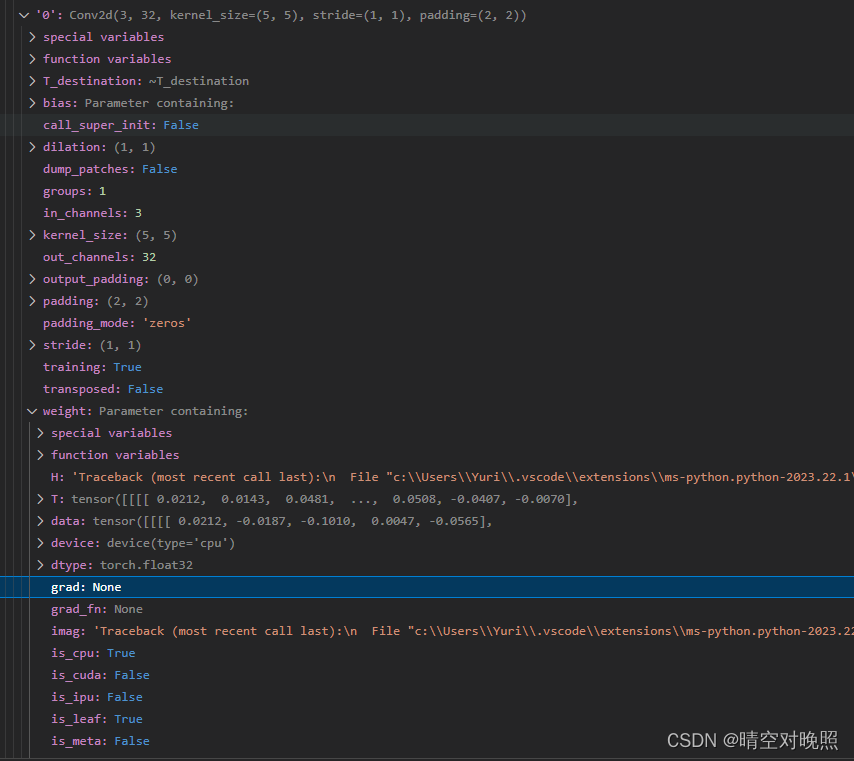

backward行打断点,进入调试界面可以查看网络内部的参数

weighr里面有grad

运行到backward之前,grad里是none

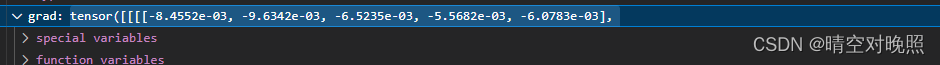

运行完之后,计算出梯度

后面可以使用优化器,利用计算出来的梯度,对神经网络进行更新

原文地址:https://blog.csdn.net/yuri5151/article/details/135826891

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_61457.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!

声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。