This post was originally published on the Mellanox blog.

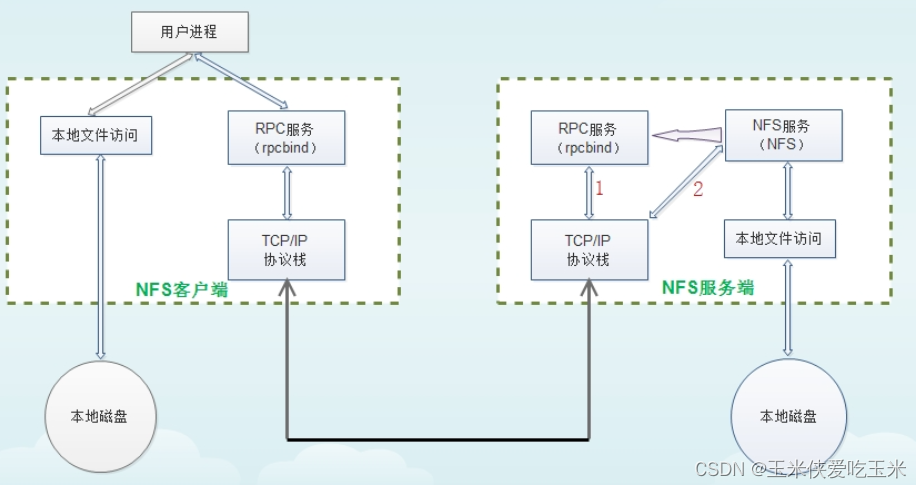

Network File System (NFS) is a ubiquitous component of most modern clusters. It was initially designed as a work-group filesystem, making a central file store available to and shared among several client servers. As NFS became more popular, it was used for mission-critical applications, which required access to storage. Next, migration to higher performing networks was implemented to improve client-to-NFS communications. In addition to higher networking speeds (today 100 GbE and soon 200 GbE), the industry has been looking for technologies that offload stateless networking functions that run on the CPU to the IO subsystems. This leaves more CPU cycles free to run business applications and maximizes the data center efficiency.

One of the more popular networking offload technologies is RDMA (Remote Direct Memory Access). RDMA makes data transfers more efficient and enables fast data movement between servers and storage without involving its CPU. Throughput is increased, latency reduced, and CPU power is freed up for the applications. RDMA technology is already widely used for efficient data transfer in render farms and large cloud deployments, including the following:

- Microsoft Azure

- HPC solutions (including machine learning and deep learning)

- iSER and NVMe-oF-based storage

- Mission-critical SQL database solutions such as Oracle RAC (Exadata)

- IBM DB2 pureScale

- Microsoft SQL solutions and Teradata

Figure 1. Data communication over TCP vs. RDMA.

Figure 1 shows why IT managers have been deploying RoCE (RDMA over Converged Ethernet). RoCE uses advances in Ethernet to enable more efficient RDMA over Ethernet and enables widespread deployment of RDMA technologies in mainstream data center applications.

The growing deployment of RDMA-enabled networking solutions in public and private clouds—like RoCE that enables ruining RDMA over Ethernet, plus the recent NFS protocol extensions—enables NFS communication over RoCE. For more information, see the Open Source NFS/RDMA Roadmap presentation given at the OpenFabrics Workshop in 2017 by Chuck Lever, an upstream Linux contributor and Linux kernel architect at Oracle. For more information about how to run NFS over RoCE, see How to Configure NFS over RDMA (RoCE).

To evaluate the boost that RoCE enables compared to TCP, we ran the IOzone test, measured the read/write IOPS, and throughput of multi-thread read or write tests. The tests were performed on a single client against a Linux NFS server using tmpfs, so that storage latency was removed from the picture and transport behavior exposed.

- Client server: Intel Core i5-3450S CPU @ 2.80GHz one socket, four cores, HT disabled 16-GB RAM, 1333 MHz DDR3, non-ECC HCA together with the NVIDIA Mellanox ConnectX-5 100 GbE NIC (SW version 16.20.1010) plugged into in a PCIe 3.0 x16 slot.

- NFS server: Intel Xeon CPU E5-1620 v4 @ 3.50GHz one socket, four cores, HT disabled 64-GB RAM, 2400 MHz DDR4 HCA, together with the ConnectX-5 100 GbE NIC (16.20.1010) plugged into in a PCIe 3.0 x16 slot.

The client and the NFS server were connected over a single 100-GbE NVIDIA Mellanox LinkX copper cable to the NVIDIA Mellanox Spectrum switch using the SN2700 model with its 32 x 100-GbE ports, which is the lowest latency Ethernet switch available in the market today. This makes it ideal for running latency-sensitive applications over Ethernet.

The following charts show the bandwidth and IOPS measured for performance over RoCE vs. TCP, running the IOzone test.

Figure 2. Running NFS over RoCE enables 2X to 3X higher bandwidth (using a 128-KB block size, read and write with 16 threads, aggregate throughput).

Figure 3. NFS over RoCE enables up to 140% higher IOPs (using a 8-KB block size, read and write with 16 threads, aggregate IOPs).

Figure 4. NFS over RoCE enables up to 150% higher IOPs (using a 2-KB block size, read and write with 16 threads, aggregate IOPs).

Conclusion

Running NFS over RDMA-enabled networks—such as RoCE, which offloads the CPU from performing the data communication job—generates a significant performance boost. As a result, Mellanox expects that NFS over RoCE will eventually replace NFS over TCP and become the leading transport technology in data centers.

Related resources

- GTC session: Magnum IO GPUDirect, NCCL, NVSHMEM, and GDA-KI on Grace Hopper and Hopper systems

- GTC session: High-Speed Streaming Signal Processing: Teaming Up the NIC and GPU

- GTC session: Accelerating and Securing GPU Accesses to Large Datasets

- SDK: GPUDirect Storage

- SDK: Magnum IO

- Webinar: Doubling Network File System Performance with RDMA-Enabled Networking

原文地址:https://blog.csdn.net/weixin_43778179/article/details/135869193

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_62825.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!

![[网鼎杯 2020 朱雀组]phpweb](https://img-blog.csdnimg.cn/img_convert/a404a4237fdec0feb92af881eaa8d0fa.png)