记录一下学习过程,得到一个需求是基于Camera2+OpenGL ES+MediaCodec+AudioRecord实现录制音视频。

需求:

- 在每一帧视频数据中,写入SEI额外数据,方便后期解码时获得每一帧中的自定义数据。

- 点击录制功能后,录制的是前N秒至后N秒这段时间的音视频,保存的文件都按照60s进行保存。

写在前面,整个学习过程涉及到以下内容,可以快速检索是否有想要的内容

- MediaCodec的使用,采用的是createInputSurface()创建一个surface,通过EGL接受camera2传过来的画面。

- AudioRecord的使用

- Camera2的使用

- OpenGL的简单使用

- H264 SEI的写入简单例子

整体思路设计比较简单,打开相机,创建OpenGL相关环境,然后创建video线程录制video相关数据,创建audio线程录制audio相关数据,video和audio数据都存在自定义的List中作为缓存,最后使用一个编码线程,将video List和audio List中的数据编码到MP4中即可。用的安卓sdk 28,因为29以上保存比较麻烦。整个工程暂时没上传,有需要私。

将以上功能都模块化,分别写到不同的类中。先介绍一些独立的模块。

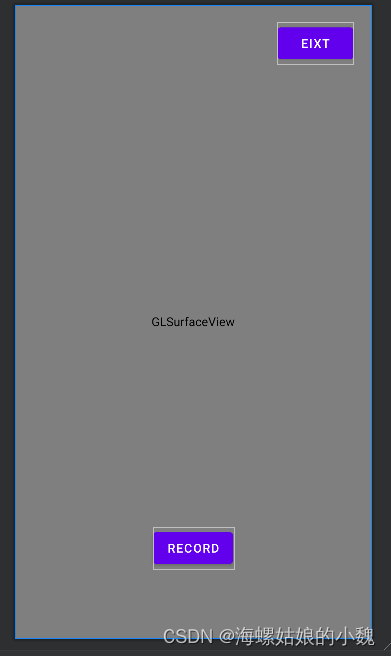

UI布局

ui很简单,一个GLSurfaceView,两个button控件。

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<android.opengl.GLSurfaceView

android:id="@+id/glView"

android:layout_width="match_parent"

android:layout_height="match_parent"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent" />

<Button

android:id="@+id/recordBtn"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginBottom="80dp"

android:text="Record"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintLeft_toLeftOf="parent"

app:layout_constraintRight_toRightOf="parent" />

<Button

android:id="@+id/exit"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginTop="20dp"

android:layout_marginRight="20dp"

android:text="Eixt"

app:layout_constraintTop_toTopOf="parent"

app:layout_constraintRight_toRightOf="parent" />

</androidx.constraintlayout.widget.ConstraintLayout>

Camera2

camera2框架的使用,比较简单,需要注意的一点是, startPreview函数中传入的surface用于后续mCaptureRequestBuilder.addTarget(surface)的参数传入。surface的产生由以下基本几步完成。现在简单提一下,下面会贴代码。

1.这个surface 就是通过openGL 生成的纹理, GLES30.glGenTextures(1, mTexture, 0);

2.纹理生成SurfaceTexture, mSurfaceTexture = new SurfaceTexture(mTexture[0]);

3.mSurfaceTexture生成一个surface, mSurface = new Surface(mSurfaceTexture);

4.mCamera.startPreview(mSurface);

public class Camera2 {

private final String TAG = "Abbott Camera2";

private Context mContext;

private CameraManager mCameraManager;

private CameraDevice mCameraDevice;

private String[] mCamList;

private String mCameraId;

private Size mPreviewSize;

private HandlerThread mBackgroundThread;

private Handler mBackgroundHandler;

private CaptureRequest.Builder mCaptureRequestBuilder;

private CaptureRequest mCaptureRequest;

private CameraCaptureSession mCameraCaptureSession;

public Camera2(Context Context) {

mContext = Context;

mCameraManager = (CameraManager) mContext.getSystemService(android.content.Context.CAMERA_SERVICE);

try {

mCamList = mCameraManager.getCameraIdList();

} catch (CameraAccessException e) {

e.printStackTrace();

}

mBackgroundThread = new HandlerThread("CameraThread");

mBackgroundThread.start();

mBackgroundHandler = new Handler(mBackgroundThread.getLooper());

}

public void openCamera(int width, int height, String id) {

try {

Log.d(TAG, "openCamera: id:" + id);

CameraCharacteristics characteristics = mCameraManager.getCameraCharacteristics(id);

if (characteristics.get(CameraCharacteristics.LENS_FACING) == CameraCharacteristics.LENS_FACING_FRONT) {

}

StreamConfigurationMap map = characteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

mPreviewSize = getOptimalSize(map.getOutputSizes(SurfaceTexture.class), width, height);

mCameraId = id;

} catch (CameraAccessException e) {

e.printStackTrace();

}

try {

if (ActivityCompat.checkSelfPermission(mContext, android.Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED) {

return;

}

Log.d(TAG, "mCameraManager.openCamera(mCameraId, mStateCallback, mBackgroundHandler);: " + mCameraId);

mCameraManager.openCamera(mCameraId, mStateCallback, mBackgroundHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

private Size getOptimalSize(Size[] sizeMap, int width, int height) {

List<Size> sizeList = new ArrayList<>();

for (Size option : sizeMap) {

if (width > height) {

if (option.getWidth() > width && option.getHeight() > height) {

sizeList.add(option);

}

} else {

if (option.getWidth() > height && option.getHeight() > width) {

sizeList.add(option);

}

}

}

if (sizeList.size() > 0) {

return Collections.min(sizeList, new Comparator<Size>() {

@Override

public int compare(Size lhs, Size rhs) {

return Long.signum((long) lhs.getWidth() * lhs.getHeight() - (long) rhs.getWidth() * rhs.getHeight());

}

});

}

return sizeMap[0];

}

private final CameraDevice.StateCallback mStateCallback = new CameraDevice.StateCallback() {

@Override

public void onOpened(@NonNull CameraDevice camera) {

mCameraDevice = camera;

}

@Override

public void onDisconnected(@NonNull CameraDevice camera) {

camera.close();

mCameraDevice = null;

}

@Override

public void onError(@NonNull CameraDevice camera, int error) {

camera.close();

mCameraDevice = null;

}

};

public void startPreview(Surface surface) {

try {

mCaptureRequestBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

mCaptureRequestBuilder.addTarget(surface);

mCameraDevice.createCaptureSession(Collections.singletonList(surface), new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession session) {

try {

mCaptureRequest = mCaptureRequestBuilder.build();

mCameraCaptureSession = session;

mCameraCaptureSession.setRepeatingRequest(mCaptureRequest, null, mBackgroundHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession session) {

}

}, mBackgroundHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

}

ImageList

这个类就是用于video 和audio缓存类,没有什么可以介绍的,直接用就好了。

public class ImageList {

private static final String TAG = "Abbott ImageList";

private Object mImageListLock = new Object();

int kCapacity;

private List<ImageItem> mImageList = new CopyOnWriteArrayList<>();

public ImageList(int capacity) {

kCapacity = capacity;

}

public synchronized void addItem(long Timestamp, ByteBuffer byteBuffer, MediaCodec.BufferInfo bufferInfo) {

synchronized (mImageListLock) {

ImageItem item = new ImageItem(Timestamp, byteBuffer, bufferInfo);

mImageList.add(item);

if (mImageList.size() > kCapacity) {

int excessItems = mImageList.size() - kCapacity;

mImageList.subList(0, excessItems).clear();

}

}

}

public synchronized List<ImageItem> getItemsInTimeRange(long startTimestamp, long endTimestamp) {

List<ImageItem> itemsInTimeRange = new ArrayList<>();

synchronized (mImageListLock) {

for (ImageItem item : mImageList) {

long itemTimestamp = item.getTimestamp();

// 判断时间戳是否在指定范围内

if (itemTimestamp >= startTimestamp && itemTimestamp <= endTimestamp) {

itemsInTimeRange.add(item);

}

}

}

return itemsInTimeRange;

}

public synchronized ImageItem getItem() {

return mImageList.get(0);

}

public synchronized void removeItem() {

mImageList.remove(0);

}

public synchronized int getSize() {

return mImageList.size();

}

public static class ImageItem {

private long mTimestamp;

private ByteBuffer mVideoBuffer;

private MediaCodec.BufferInfo mVideoBufferInfo;

public ImageItem(long first, ByteBuffer second, MediaCodec.BufferInfo bufferInfo) {

this.mTimestamp = first;

this.mVideoBuffer = second;

this.mVideoBufferInfo = bufferInfo;

}

public synchronized long getTimestamp() {

return mTimestamp;

}

public synchronized ByteBuffer getVideoByteBuffer() {

return mVideoBuffer;

}

public synchronized MediaCodec.BufferInfo getVideoBufferInfo() {

return mVideoBufferInfo;

}

}

}

GlProgram

用于创建OpenGL的程序的类。目前使用的是OpenGL3.0 版本

public class GlProgram {

public static final String mVertexShader =

"#version 300 es n" +

"in vec4 vPosition;" +

"in vec2 vCoordinate;" +

"out vec2 vTextureCoordinate;" +

"void main() {" +

" gl_Position = vPosition;" +

" vTextureCoordinate = vCoordinate;" +

"}";

public static final String mFragmentShader =

"#version 300 es n" +

"#extension GL_OES_EGL_image_external : require n" +

"#extension GL_OES_EGL_image_external_essl3 : require n" +

"precision mediump float;" +

"in vec2 vTextureCoordinate;" +

"uniform samplerExternalOES oesTextureSampler;" +

"out vec4 gl_FragColor;" +

"void main() {" +

" gl_FragColor = texture(oesTextureSampler, vTextureCoordinate);" +

"}";

public static int createProgram(String vertexShaderSource, String fragShaderSource) {

int program = GLES30.glCreateProgram();

if (0 == program) {

Log.e("Arc_ShaderManager", "create program error ,error=" + GLES30.glGetError());

return 0;

}

int vertexShader = loadShader(GLES30.GL_VERTEX_SHADER, vertexShaderSource);

if (0 == vertexShader) {

return 0;

}

int fragShader = loadShader(GLES30.GL_FRAGMENT_SHADER, fragShaderSource);

if (0 == fragShader) {

return 0;

}

GLES30.glAttachShader(program, vertexShader);

GLES30.glAttachShader(program, fragShader);

GLES30.glLinkProgram(program);

int[] status = new int[1];

GLES30.glGetProgramiv(program, GLES30.GL_LINK_STATUS, status, 0);

if (GLES30.GL_FALSE == status[0]) {

String errorMsg = GLES30.glGetProgramInfoLog(program);

Log.e("Arc_ShaderManager", "createProgram error : " + errorMsg);

GLES30.glDeleteShader(vertexShader);

GLES30.glDeleteShader(fragShader);

GLES30.glDeleteProgram(program);

return 0;

}

GLES30.glDetachShader(program, vertexShader);

GLES30.glDetachShader(program, fragShader);

GLES30.glDeleteShader(vertexShader);

GLES30.glDeleteShader(fragShader);

return program;

}

private static int loadShader(int type, String shaderSource) {

int shader = GLES30.glCreateShader(type);

if (0 == shader) {

Log.e("Arc_ShaderManager", "create shader error, shader type=" + type + " , error=" + GLES30.glGetError());

return 0;

}

GLES30.glShaderSource(shader, shaderSource);

GLES30.glCompileShader(shader);

int[] status = new int[1];

GLES30.glGetShaderiv(shader, GLES30.GL_COMPILE_STATUS, status, 0);

if (0 == status[0]) {

String errorMsg = GLES30.glGetShaderInfoLog(shader);

Log.e("Arc_ShaderManager", "createShader shader = " + type + " error: " + errorMsg);

GLES30.glDeleteShader(shader);

return 0;

}

return shader;

}

}

OesTexture

连接上面介绍的OpenGL程序,通过顶点着色器和片元着色器的坐标生成纹理

public class OesTexture {

private static final String TAG = "Abbott OesTexture";

private int mProgram;

private final FloatBuffer mCordsBuffer;

private final FloatBuffer mPositionBuffer;

private int mPositionHandle;

private int mCordsHandle;

private int mOESTextureHandle;

public OesTexture() {

float[] positions = {

-1.0f, 1.0f,

-1.0f, -1.0f,

1.0f, 1.0f,

1.0f, -1.0f

};

float[] texCords = {

0.0f, 0.0f,

0.0f, 1.0f,

1.0f, 0.0f,

1.0f, 1.0f,

};

mPositionBuffer = ByteBuffer.allocateDirect(positions.length * 4).order(ByteOrder.nativeOrder())

.asFloatBuffer();

mPositionBuffer.put(positions).position(0);

mCordsBuffer = ByteBuffer.allocateDirect(texCords.length * 4).order(ByteOrder.nativeOrder())

.asFloatBuffer();

mCordsBuffer.put(texCords).position(0);

}

public void init() {

this.mProgram = GlProgram.createProgram(GlProgram.mVertexShader, GlProgram.mFragmentShader);

if (0 == this.mProgram) {

Log.e(TAG, "createProgram failed");

}

mPositionHandle = GLES30.glGetAttribLocation(mProgram, "vPosition");

mCordsHandle = GLES30.glGetAttribLocation(mProgram, "vCoordinate");

mOESTextureHandle = GLES30.glGetUniformLocation(mProgram, "oesTextureSampler");

GLES30.glDisable(GLES30.GL_DEPTH_TEST);

}

public void PrepareTexture(int OESTextureId) {

GLES30.glUseProgram(this.mProgram);

GLES30.glEnableVertexAttribArray(mPositionHandle);

GLES30.glVertexAttribPointer(mPositionHandle, 2, GLES30.GL_FLOAT, false, 2 * 4, mPositionBuffer);

GLES30.glEnableVertexAttribArray(mCordsHandle);

GLES30.glVertexAttribPointer(mCordsHandle, 2, GLES30.GL_FLOAT, false, 2 * 4, mCordsBuffer);

GLES30.glActiveTexture(GLES30.GL_TEXTURE0);

GLES30.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, OESTextureId);

GLES30.glUniform1i(mOESTextureHandle, 0);

GLES30.glDrawArrays(GLES30.GL_TRIANGLE_STRIP, 0, 4);

GLES30.glDisableVertexAttribArray(mPositionHandle);

GLES30.glDisableVertexAttribArray(mCordsHandle);

GLES30.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, 0);

}

}

接下来介绍的VideoRecorder,AudioEncoder,EncodingRunnable三个类需要互相搭配使用

public class AudioEncoder extends Thread {

private static final String TAG = "Abbott AudioEncoder";

private static final int SAVEMP4_INTERNAL = Param.recordInternal * 1000 * 1000;

private static final int SAMPLE_RATE = 44100;

private static final int CHANNEL_COUNT = 1;

private static final int BIT_RATE = 96000;

private EncodingRunnable mEncodingRunnable;

private MediaCodec mMediaCodec;

private AudioRecord mAudioRecord;

private MediaFormat mFormat;

private MediaFormat mOutputFormat;

private long nanoTime;

int mBufferSizeInBytes = 0;

boolean mExitThread = true;

private ImageList mAudioList;

private MediaCodec.BufferInfo mAudioBufferInfo;

private boolean mAlarm = false;

private long mAlarmTime;

private long mAlarmStartTime;

private long mAlarmEndTime;

private List<ImageList.ImageItem> mMuxerImageItem;

private Object mLock = new Object();

private MediaCodec.BufferInfo mAlarmBufferInfo;

public AudioEncoder( EncodingRunnable encodingRunnable) throws IOException {

mEncodingRunnable = encodingRunnable;

nanoTime = System.nanoTime();

createAudio();

createMediaCodec();

int kCapacity = 1000 / 20 * Param.recordInternal;

mAudioList = new ImageList(kCapacity);

}

public void createAudio() {

mBufferSizeInBytes = AudioRecord.getMinBufferSize(SAMPLE_RATE, AudioFormat.CHANNEL_IN_MONO, AudioFormat.ENCODING_PCM_16BIT);

mAudioRecord = new AudioRecord(MediaRecorder.AudioSource.MIC, SAMPLE_RATE, AudioFormat.CHANNEL_IN_MONO, AudioFormat.ENCODING_PCM_16BIT, mBufferSizeInBytes);

}

public void createMediaCodec() throws IOException {

mFormat = MediaFormat.createAudioFormat(MediaFormat.MIMETYPE_AUDIO_AAC, SAMPLE_RATE, CHANNEL_COUNT);

mFormat.setInteger(MediaFormat.KEY_AAC_PROFILE, MediaCodecInfo.CodecProfileLevel.AACObjectLC);

mFormat.setInteger(MediaFormat.KEY_BIT_RATE, BIT_RATE);

mFormat.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE, 8192);

mMediaCodec = MediaCodec.createEncoderByType(MediaFormat.MIMETYPE_AUDIO_AAC);

mMediaCodec.configure(mFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

}

public synchronized void setAlarm() {

synchronized (mLock) {

Log.d(TAG, "setAudio Alarm enter");

mEncodingRunnable.setAudioFormat(mOutputFormat);

mEncodingRunnable.setAudioAlarmTrue();

mAlarmTime = mAlarmBufferInfo.presentationTimeUs;

mAlarmEndTime = mAlarmTime + SAVEMP4_INTERNAL;

if (!mAlarm) {

mAlarmStartTime = mAlarmTime - SAVEMP4_INTERNAL;

}

mAlarm = true;

Log.d(TAG, "setAudio Alarm exit");

}

}

@Override

public void run() {

super.run();

mMediaCodec.start();

mAudioRecord.startRecording();

while (mExitThread) {

synchronized (mLock) {

byte[] inputAudioData = new byte[mBufferSizeInBytes];

int res = mAudioRecord.read(inputAudioData, 0, inputAudioData.length);

if (res > 0) {

if (mAudioRecord != null) {

enCodeAudio(inputAudioData);

}

}

}

}

Log.d(TAG, "AudioRecord run: exit");

}

private void enCodeAudio(byte[] inputAudioData) {

mAudioBufferInfo = new MediaCodec.BufferInfo();

int index = mMediaCodec.dequeueInputBuffer(-1);

if (index < 0) {

return;

}

ByteBuffer[] inputBuffers = mMediaCodec.getInputBuffers();

ByteBuffer audioInputBuffer = inputBuffers[index];

audioInputBuffer.clear();

audioInputBuffer.put(inputAudioData);

audioInputBuffer.limit(inputAudioData.length);

mMediaCodec.queueInputBuffer(index, 0, inputAudioData.length, (System.nanoTime() - nanoTime) / 1000, 0);

int status = mMediaCodec.dequeueOutputBuffer(mAudioBufferInfo, 0);

ByteBuffer outputBuffer;

if (status == MediaCodec.INFO_TRY_AGAIN_LATER) {

} else if (status == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

mOutputFormat = mMediaCodec.getOutputFormat();

} else {

while (status >= 0) {

MediaCodec.BufferInfo tmpaudioBufferInfo = new MediaCodec.BufferInfo();

tmpaudioBufferInfo.set(mAudioBufferInfo.offset, mAudioBufferInfo.size, mAudioBufferInfo.presentationTimeUs, mAudioBufferInfo.flags);

mAlarmBufferInfo = new MediaCodec.BufferInfo();

mAlarmBufferInfo.set(mAudioBufferInfo.offset, mAudioBufferInfo.size, mAudioBufferInfo.presentationTimeUs, mAudioBufferInfo.flags);

outputBuffer = mMediaCodec.getOutputBuffer(status);

ByteBuffer buffer = ByteBuffer.allocate(tmpaudioBufferInfo.size);

buffer.limit(tmpaudioBufferInfo.size);

buffer.put(outputBuffer);

buffer.flip();

if (tmpaudioBufferInfo.size > 0) {

if (mAlarm) {

mMuxerImageItem = mAudioList.getItemsInTimeRange(mAlarmStartTime, mAlarmEndTime);

for (ImageList.ImageItem item : mMuxerImageItem) {

mEncodingRunnable.pushAudio(item);

}

mAlarmStartTime = tmpaudioBufferInfo.presentationTimeUs;

mAudioList.addItem(tmpaudioBufferInfo.presentationTimeUs, buffer, tmpaudioBufferInfo);

if (tmpaudioBufferInfo.presentationTimeUs - mAlarmTime > SAVEMP4_INTERNAL) {

mAlarm = false;

mEncodingRunnable.setAudioAlarmFalse();

Log.d(TAG, "mEncodingRunnable.setAudio itemAlarmFalse();");

}

} else {

mAudioList.addItem(tmpaudioBufferInfo.presentationTimeUs, buffer, tmpaudioBufferInfo);

}

}

mMediaCodec.releaseOutputBuffer(status, false);

status = mMediaCodec.dequeueOutputBuffer(mAudioBufferInfo, 0);

}

}

}

public synchronized void stopAudioRecord() throws IllegalStateException {

synchronized (mLock) {

mExitThread = false;

}

try {

join();

} catch (InterruptedException e) {

e.printStackTrace();

}

mMediaCodec.stop();

mMediaCodec.release();

mMediaCodec = null;

}

}

public class VideoRecorder extends Thread {

private static final String TAG = "Abbott VideoRecorder";

private static final int SAVE_MP4_Internal = 1000 * 1000 * Param.recordInternal;

// EGL

private static final int EGL_RECORDABLE_ANDROID = 0x3142;

private EGLContext mEGLContext = EGL14.EGL_NO_CONTEXT;

private EGLDisplay mEGLDisplay = EGL14.EGL_NO_DISPLAY;

private EGLSurface mEGLSurface = EGL14.EGL_NO_SURFACE;

private EGLContext mSharedContext = EGL14.EGL_NO_CONTEXT;

private Surface mSurface;

private int mOESTextureId;

private OesTexture mOesTexture;

private ImageList mImageList;

private List<ImageList.ImageItem> muxerImageItem;

// Thread

private boolean mExitThread;

private Object mLock = new Object();

private Object object = new Object();

private MediaCodec mMediaCodec;

private MediaFormat mOutputFormat;

private boolean mAlarm = false;

private long mAlarmTime;

private long mAlarmStartTime;

private long mAlarmEndTime;

private MediaCodec.BufferInfo mBufferInfo;

private EncodingRunnable mEncodingRunnable;

private String mSeiMessage;

public VideoRecorder(EGLContext eglContext, EncodingRunnable encodingRunnable) {

mSharedContext = eglContext;

mEncodingRunnable = encodingRunnable;

int kCapacity = 1000 / 40 * Param.recordInternal;

mImageList = new ImageList(kCapacity);

try {

MediaFormat mediaFormat = MediaFormat.createVideoFormat(MediaFormat.MIMETYPE_VIDEO_AVC, 1920, 1080);

mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatSurface);

mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, 1920 * 1080 * 25 / 5);

mediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 25);

mediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1);

mMediaCodec = MediaCodec.createEncoderByType(MediaFormat.MIMETYPE_VIDEO_AVC);

mMediaCodec.configure(mediaFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

mSurface = mMediaCodec.createInputSurface();

} catch (IOException e) {

e.printStackTrace();

}

}

@Override

public void run() {

super.run();

try {

initEgl();

mOesTexture = new OesTexture();

mOesTexture.init();

synchronized (mLock) {

mLock.wait(33);

}

guardedRun();

} catch (Exception e) {

e.printStackTrace();

}

}

private void guardedRun() throws InterruptedException, RuntimeException {

mExitThread = false;

while (true) {

synchronized (mLock) {

if (mExitThread) {

break;

}

mLock.wait(33);

}

mOesTexture.PrepareTexture(mOESTextureId);

swapBuffers();

enCodeVideo();

}

Log.d(TAG, "guardedRun: exit");

unInitEgl();

}

private void enCodeVideo() {

mBufferInfo = new MediaCodec.BufferInfo();

int status = mMediaCodec.dequeueOutputBuffer(mBufferInfo, 0);

ByteBuffer outputBuffer = null;

if (status == MediaCodec.INFO_TRY_AGAIN_LATER) {

} else if (status == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

mOutputFormat = mMediaCodec.getOutputFormat();

} else if (status == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED) {

} else {

outputBuffer = mMediaCodec.getOutputBuffer(status);

if ((mBufferInfo.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) {

mBufferInfo.size = 0;

}

if (mBufferInfo.size > 0) {

outputBuffer.position(mBufferInfo.offset);

outputBuffer.limit(mBufferInfo.size - mBufferInfo.offset);

mSeiMessage = "avcIndex" + String.format("%05d", 0);

}

mMediaCodec.releaseOutputBuffer(status, false);

}

if (mBufferInfo.size > 0) {

mEncodingRunnable.setTimeUs(mBufferInfo.presentationTimeUs);

ByteBuffer seiData = buildSEIData(mSeiMessage);

ByteBuffer frameWithSEI = ByteBuffer.allocate(outputBuffer.remaining() + seiData.remaining());

frameWithSEI.put(seiData);

frameWithSEI.put(outputBuffer);

frameWithSEI.flip();

mBufferInfo.size = frameWithSEI.remaining();

MediaCodec.BufferInfo tmpAudioBufferInfo = new MediaCodec.BufferInfo();

tmpAudioBufferInfo.set(mBufferInfo.offset, mBufferInfo.size, mBufferInfo.presentationTimeUs, mBufferInfo.flags);

if (mAlarm) {

muxerImageItem = mImageList.getItemsInTimeRange(mAlarmStartTime, mAlarmEndTime);

mAlarmStartTime = tmpAudioBufferInfo.presentationTimeUs;

for (ImageList.ImageItem item : muxerImageItem) {

mEncodingRunnable.push(item);

}

mImageList.addItem(tmpAudioBufferInfo.presentationTimeUs, frameWithSEI, tmpAudioBufferInfo);

if (mBufferInfo.presentationTimeUs - mAlarmTime > SAVE_MP4_Internal) {

Log.d(TAG, "mEncodingRunnable.set itemAlarmFalse()");

Log.d(TAG, tmpAudioBufferInfo.presentationTimeUs + " " + mAlarmTime);

mAlarm = false;

mEncodingRunnable.setVideoAlarmFalse();

}

} else {

mImageList.addItem(tmpAudioBufferInfo.presentationTimeUs, frameWithSEI, tmpAudioBufferInfo);

}

}

}

public synchronized void setAlarm() {

synchronized (mLock) {

Log.d(TAG, "setAlarm enter");

mEncodingRunnable.setMediaFormat(mOutputFormat);

mEncodingRunnable.setVideoAlarmTrue();

if (mBufferInfo.presentationTimeUs != 0) {

mAlarmTime = mBufferInfo.presentationTimeUs;

}

mAlarmEndTime = mAlarmTime + SAVE_MP4_Internal;

if (!mAlarm) {

mAlarmStartTime = mAlarmTime - SAVE_MP4_Internal;

}

mAlarm = true;

Log.d(TAG, "setAlarm exit");

}

}

public synchronized void startRecord() throws IllegalStateException {

super.start();

mMediaCodec.start();

}

public synchronized void stopVideoRecord() throws IllegalStateException {

synchronized (mLock) {

mExitThread = true;

mLock.notify();

}

try {

join();

} catch (InterruptedException e) {

e.printStackTrace();

}

mMediaCodec.signalEndOfInputStream();

mMediaCodec.stop();

mMediaCodec.release();

mMediaCodec = null;

}

public void requestRender(int i) {

synchronized (object) {

mOESTextureId = i;

}

}

private void initEgl() {

this.mEGLDisplay = EGL14.eglGetDisplay(EGL14.EGL_DEFAULT_DISPLAY);

if (this.mEGLDisplay == EGL14.EGL_NO_DISPLAY) {

throw new RuntimeException("EGL14.eglGetDisplay fail...");

}

int[] major_version = new int[2];

boolean eglInited = EGL14.eglInitialize(this.mEGLDisplay, major_version, 0, major_version, 1);

if (!eglInited) {

this.mEGLDisplay = null;

throw new RuntimeException("EGL14.eglInitialize fail...");

}

//4. 设置显示设备的属性

int[] attrib_list = new int[]{

EGL14.EGL_SURFACE_TYPE, EGL14.EGL_WINDOW_BIT,

EGL14.EGL_RENDERABLE_TYPE, EGL14.EGL_OPENGL_ES2_BIT,

EGL14.EGL_RED_SIZE, 8,

EGL14.EGL_GREEN_SIZE, 8,

EGL14.EGL_BLUE_SIZE, 8,

EGL14.EGL_ALPHA_SIZE, 8,

EGL14.EGL_DEPTH_SIZE, 16,

EGL_RECORDABLE_ANDROID, 1,

EGL14.EGL_NONE};

EGLConfig[] configs = new EGLConfig[1];

int[] numConfigs = new int[1];

boolean eglChose = EGL14.eglChooseConfig(this.mEGLDisplay, attrib_list, 0, configs, 0, configs.length, numConfigs, 0);

if (!eglChose) {

throw new RuntimeException("eglChooseConfig [RGBA888 + recordable] ES2 EGL_config_fail...");

}

int[] attr_list = {EGL14.EGL_CONTEXT_CLIENT_VERSION, 2, EGL14.EGL_NONE};

this.mEGLContext = EGL14.eglCreateContext(this.mEGLDisplay, configs[0], this.mSharedContext, attr_list, 0);

checkEglError("eglCreateContext");

if (this.mEGLContext == EGL14.EGL_NO_CONTEXT) {

throw new RuntimeException("eglCreateContext == EGL_NO_CONTEXT");

}

int[] surface_attr = {EGL14.EGL_NONE};

this.mEGLSurface = EGL14.eglCreateWindowSurface(this.mEGLDisplay, configs[0], this.mSurface, surface_attr, 0);

if (this.mEGLSurface == EGL14.EGL_NO_SURFACE) {

throw new RuntimeException("eglCreateWindowSurface == EGL_NO_SURFACE");

}

Log.d(TAG, "initEgl , display=" + this.mEGLDisplay + " ,context=" + this.mEGLContext + " ,sharedContext= " +

this.mSharedContext + ", surface=" + this.mEGLSurface);

boolean success = EGL14.eglMakeCurrent(this.mEGLDisplay, this.mEGLSurface, this.mEGLSurface, this.mEGLContext);

if (!success) {

checkEglError("makeCurrent");

throw new RuntimeException("eglMakeCurrent failed");

}

}

private void unInitEgl() {

boolean success = EGL14.eglMakeCurrent(mEGLDisplay, EGL14.EGL_NO_SURFACE, EGL14.EGL_NO_SURFACE, EGL14.EGL_NO_CONTEXT);

if (!success) {

checkEglError("makeCurrent");

throw new RuntimeException("eglMakeCurrent failed");

}

if (this.mEGLDisplay != EGL14.EGL_NO_DISPLAY) {

EGL14.eglDestroySurface(this.mEGLDisplay, this.mEGLSurface);

EGL14.eglDestroyContext(this.mEGLDisplay, this.mEGLContext);

EGL14.eglTerminate(this.mEGLDisplay);

}

this.mEGLDisplay = EGL14.EGL_NO_DISPLAY;

this.mEGLContext = EGL14.EGL_NO_CONTEXT;

this.mEGLSurface = EGL14.EGL_NO_SURFACE;

this.mSharedContext = EGL14.EGL_NO_CONTEXT;

this.mSurface = null;

}

private boolean swapBuffers() {

if ((null == this.mEGLDisplay) || (null == this.mEGLSurface)) {

return false;

}

boolean success = EGL14.eglSwapBuffers(this.mEGLDisplay, this.mEGLSurface);

if (!success) {

checkEglError("eglSwapBuffers");

}

return success;

}

private void checkEglError(String msg) {

int error = EGL14.eglGetError();

if (error != EGL14.EGL_SUCCESS) {

throw new RuntimeException(msg + ": EGL_ERROR_CODE: 0x" + Integer.toHexString(error));

}

}

private ByteBuffer buildSEIData(String message) {

// 构建 SEI 数据

int seiSize = 128;

ByteBuffer seiBuffer = ByteBuffer.allocate(seiSize);

seiBuffer.put(new byte[]{0, 0, 0, 1, 6, 5});

// 设置 SEI message

String seiMessage = "h264testdata" + message;

seiBuffer.put((byte) seiMessage.length());

// 设置 SEI user data

seiBuffer.put(seiMessage.getBytes());

seiBuffer.flip();

return seiBuffer;

}

}

public class EncodingRunnable extends Thread {

private static final String TAG = "Abbott EncodingRunnable";

private Object mRecordLock = new Object();

private boolean mExitThread = false;

private MediaMuxer mMediaMuxer;

private int avcIndex;

private int mAudioIndex;

private MediaFormat mOutputFormat;

private MediaFormat mAudioOutputFormat;

private ImageList mImageList;

private ImageList mAudioImageList;

private boolean itemAlarm;

private long mAudioImageListTimeUs = -1;

private boolean mAudioAlarm;

private int mVideoCapcity = 1000 / 40 * Param.recordInternal;

private int mAudioCapcity = 1000 / 20 * Param.recordInternal;

private int recordSecond = 1000 * 1000 * 60;

long Video60sStart = -1;

public EncodingRunnable() {

mImageList = new ImageList(mVideoCapcity);

mAudioImageList = new ImageList(mAudioCapcity);

}

private boolean mIsRecoding = false;

public void setMediaFormat(MediaFormat OutputFormat) {

if (mOutputFormat == null) {

mOutputFormat = OutputFormat;

}

}

public void setAudioFormat(MediaFormat OutputFormat) {

if (mAudioOutputFormat == null) {

mAudioOutputFormat = OutputFormat;

}

}

public void setMediaMuxerConfig() {

long currentTimeMillis = System.currentTimeMillis();

Date currentDate = new Date(currentTimeMillis);

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyyMMdd_HHmmss", Locale.getDefault());

String fileName = dateFormat.format(currentDate);

File mFile = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_DCIM),

fileName + ".MP4");

Log.d(TAG, "setMediaMuxerSavaPath: new MediaMuxer " + mFile.getPath());

try {

mMediaMuxer = new MediaMuxer(mFile.getPath(), MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

} catch (IOException e) {

e.printStackTrace();

}

avcIndex = mMediaMuxer.addTrack(mOutputFormat);

mAudioIndex = mMediaMuxer.addTrack(mAudioOutputFormat);

mMediaMuxer.start();

}

public void setMediaMuxerSavaPath() {

if (!mIsRecoding) {

mExitThread = false;

setMediaMuxerConfig();

setRecording();

notifyStartRecord();

}

}

@Override

public void run() {

super.run();

while (true) {

synchronized (mRecordLock) {

try {

mRecordLock.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

MediaCodec.BufferInfo tmpAudioBufferInfo = new MediaCodec.BufferInfo();

while (mIsRecoding) {

if (mAudioImageList.getSize() > 0) {

ImageList.ImageItem audioItem = mAudioImageList.getItem();

tmpAudioBufferInfo.set(audioItem.getVideoBufferInfo().offset,

audioItem.getVideoBufferInfo().size,

audioItem.getVideoBufferInfo().presentationTimeUs + mAudioImageListTimeUs,

audioItem.getVideoBufferInfo().flags);

mMediaMuxer.writeSampleData(mAudioIndex, audioItem.getVideoByteBuffer(), tmpAudioBufferInfo);

mAudioImageList.removeItem();

}

if (mImageList.getSize() > 0) {

ImageList.ImageItem item = mImageList.getItem();

if (Video60sStart < 0) {

Video60sStart = item.getVideoBufferInfo().presentationTimeUs;

}

mMediaMuxer.writeSampleData(avcIndex, item.getVideoByteBuffer(), item.getVideoBufferInfo());

if (item.getVideoBufferInfo().presentationTimeUs - Video60sStart > recordSecond) {

Log.d(TAG, "System.currentTimeMillis() - Video60sStart :" + (item.getVideoBufferInfo().presentationTimeUs - Video60sStart));

mMediaMuxer.stop();

mMediaMuxer.release();

mMediaMuxer = null;

setMediaMuxerConfig();

Video60sStart = -1;

}

mImageList.removeItem();

}

if (itemAlarm == false && mAudioAlarm == false) {

mIsRecoding = false;

Log.d(TAG, "mediaMuxer.stop()");

mMediaMuxer.stop();

mMediaMuxer.release();

mMediaMuxer = null;

break;

}

}

if (mExitThread) {

break;

}

}

}

public synchronized void setRecording() throws IllegalStateException {

synchronized (mRecordLock) {

mIsRecoding = true;

}

}

public synchronized void setAudioAlarmTrue() throws IllegalStateException {

synchronized (mRecordLock) {

mAudioAlarm = true;

}

}

public synchronized void setVideoAlarmTrue() throws IllegalStateException {

synchronized (mRecordLock) {

itemAlarm = true;

}

}

public synchronized void setAudioAlarmFalse() throws IllegalStateException {

synchronized (mRecordLock) {

mAudioAlarm = false;

}

}

public synchronized void setVideoAlarmFalse() throws IllegalStateException {

synchronized (mRecordLock) {

itemAlarm = false;

}

}

public synchronized void notifyStartRecord() throws IllegalStateException {

synchronized (mRecordLock) {

mRecordLock.notify();

}

}

public synchronized void push(ImageList.ImageItem item) {

mImageList.addItem(item.getTimestamp(),

item.getVideoByteBuffer(),

item.getVideoBufferInfo());

}

public synchronized void pushAudio(ImageList.ImageItem item) {

synchronized (mRecordLock) {

mAudioImageList.addItem(item.getTimestamp(),

item.getVideoByteBuffer(),

item.getVideoBufferInfo());

}

}

public synchronized void setTimeUs(long l) {

if (mAudioImageListTimeUs != -1) {

return;

}

mAudioImageListTimeUs = l;

Log.d(TAG, "setTimeUs: " + l);

}

public synchronized void setExitThread() {

mExitThread = true;

mIsRecoding = false;

notifyStartRecord();

try {

join();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

最后介绍一下Camera2Renderer和MainActivity

Camera2Renderer

Camera2Renderer继承GLSurfaceView.Renderer,通过这个类来调动所有的代码。

public class Camera2Renderer implements GLSurfaceView.Renderer {

private static final String TAG = "Abbott Camera2Renderer";

final private Context mContext;

final private GLSurfaceView mGlSurfaceView;

private Camera2 mCamera;

private int[] mTexture = new int[1];

private SurfaceTexture mSurfaceTexture;

private Surface mSurface;

private OesTexture mOesTexture;

private EGLContext mEglContext = null;

private VideoRecorder mVideoRecorder;

private EncodingRunnable mEncodingRunnable;

private AudioEncoder mAudioEncoder;

public Camera2Renderer(Context context, GLSurfaceView glSurfaceView, EncodingRunnable encodingRunnable) {

mContext = context;

mGlSurfaceView = glSurfaceView;

mEncodingRunnable = encodingRunnable;

}

@Override

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

mCamera = new Camera2(mContext);

mCamera.openCamera(1920, 1080, "0");

mOesTexture = new OesTexture();

mOesTexture.init();

mEglContext = EGL14.eglGetCurrentContext();

mVideoRecorder = new VideoRecorder(mEglContext, mEncodingRunnable);

mVideoRecorder.startRecord();

try {

mAudioEncoder = new AudioEncoder(mEncodingRunnable);

mAudioEncoder.start();

} catch (IOException e) {

e.printStackTrace();

}

}

@Override

public void onSurfaceChanged(GL10 gl, int width, int height) {

GLES30.glGenTextures(1, mTexture, 0);

GLES30.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, mTexture[0]);

GLES30.glTexParameterf(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GL10.GL_TEXTURE_MIN_FILTER, GL10.GL_NEAREST);

GLES30.glTexParameterf(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GL10.GL_TEXTURE_MAG_FILTER, GL10.GL_LINEAR);

GLES30.glTexParameterf(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GL10.GL_TEXTURE_WRAP_S, GL10.GL_CLAMP_TO_EDGE);

GLES30.glTexParameterf(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GL10.GL_TEXTURE_WRAP_T, GL10.GL_CLAMP_TO_EDGE);

GLES30.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, 0);

mSurfaceTexture = new SurfaceTexture(mTexture[0]);

mSurfaceTexture.setDefaultBufferSize(1920, 1080);

mSurfaceTexture.setOnFrameAvailableListener(new SurfaceTexture.OnFrameAvailableListener() {

@Override

public void onFrameAvailable(SurfaceTexture surfaceTexture) {

mGlSurfaceView.requestRender();

}

});

mSurface = new Surface(mSurfaceTexture);

mCamera.startPreview(mSurface);

}

@Override

public void onDrawFrame(GL10 gl) {

mSurfaceTexture.updateTexImage();

mOesTexture.PrepareTexture(mTexture[0]);

mVideoRecorder.requestRender(mTexture[0]);

}

public VideoRecorder getVideoRecorder() {

return mVideoRecorder;

}

public AudioEncoder getAudioEncoder() {

return mAudioEncoder;

}

}

主函数比较简单,就是申请权限而已。

public class MainActivity extends AppCompatActivity {

private static final String TAG = "Abbott MainActivity";

private static final String FRAGMENT_DIALOG = "dialog";

private final Object mLock = new Object();

private GLSurfaceView mGlSurfaceView;

private Button mRecordButton;

private Button mExitButton;

private Camera2Renderer mCamera2Renderer;

private VideoRecorder mVideoRecorder;

private EncodingRunnable mEncodingRunnable;

private AudioEncoder mAudioEncoder;

private static final int REQUEST_CAMERA_PERMISSION = 1;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

if (ContextCompat.checkSelfPermission(this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED

|| ContextCompat.checkSelfPermission(this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED

|| ContextCompat.checkSelfPermission(this, Manifest.permission.READ_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED

|| ContextCompat.checkSelfPermission(this, Manifest.permission.RECORD_AUDIO) != PackageManager.PERMISSION_GRANTED) {

requestCameraPermission();

return;

}

setContentView(R.layout.activity_main);

mGlSurfaceView = findViewById(R.id.glView);

mRecordButton = findViewById(R.id.recordBtn);

mExitButton = findViewById(R.id.exit);

mGlSurfaceView.setEGLContextClientVersion(3);

mEncodingRunnable = new EncodingRunnable();

mEncodingRunnable.start();

mCamera2Renderer = new Camera2Renderer(this, mGlSurfaceView, mEncodingRunnable);

mGlSurfaceView.setRenderer(mCamera2Renderer);

mGlSurfaceView.setRenderMode(GLSurfaceView.RENDERMODE_WHEN_DIRTY);

}

@Override

protected void onResume() {

super.onResume();

mRecordButton.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

synchronized (MainActivity.this) {

startRecord();

}

}

});

mExitButton.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

stopRecord();

Log.d(TAG, "onClick: exit program");

finish();

}

});

}

private void requestCameraPermission() {

if (shouldShowRequestPermissionRationale(Manifest.permission.CAMERA) ||

shouldShowRequestPermissionRationale(Manifest.permission.WRITE_EXTERNAL_STORAGE) ||

shouldShowRequestPermissionRationale(Manifest.permission.RECORD_AUDIO)) {

new ConfirmationDialog().show(getSupportFragmentManager(), FRAGMENT_DIALOG);

} else {

requestPermissions(new String[]{Manifest.permission.CAMERA,

Manifest.permission.WRITE_EXTERNAL_STORAGE,

Manifest.permission.RECORD_AUDIO}, REQUEST_CAMERA_PERMISSION);

}

}

public static class ConfirmationDialog extends DialogFragment {

@NonNull

@Override

public Dialog onCreateDialog(Bundle savedInstanceState) {

final Fragment parent = getParentFragment();

return new AlertDialog.Builder(getActivity())

.setMessage(R.string.request_permission)

.setPositiveButton(android.R.string.ok, new DialogInterface.OnClickListener() {

@Override

public void onClick(DialogInterface dialog, int which) {

}

})

.setNegativeButton(android.R.string.cancel,

new DialogInterface.OnClickListener() {

@Override

public void onClick(DialogInterface dialog, int which) {

Activity activity = parent.getActivity();

if (activity != null) {

activity.finish();

}

}

})

.create();

}

}

private void startRecord() {

synchronized (mLock) {

try {

if (mVideoRecorder == null) {

mVideoRecorder = mCamera2Renderer.getVideoRecorder();

}

if (mAudioEncoder == null) {

mAudioEncoder = mCamera2Renderer.getAudioEncoder();

}

mVideoRecorder.setAlarm();

mAudioEncoder.setAlarm();

mEncodingRunnable.setMediaMuxerSavaPath();

Log.d(TAG, "Start Record ");

} catch (Exception e) {

e.printStackTrace();

}

}

}

private void stopRecord() {

if (mVideoRecorder == null) {

mVideoRecorder = mCamera2Renderer.getVideoRecorder();

}

if (mAudioEncoder == null) {

mAudioEncoder = mCamera2Renderer.getAudioEncoder();

}

mEncodingRunnable.setExitThread();

mVideoRecorder.stopVideoRecord();

mAudioEncoder.stopAudioRecord();

}

}

原文地址:https://blog.csdn.net/weixin_42255569/article/details/135916122

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。

如若转载,请注明出处:http://www.7code.cn/show_64383.html

如若内容造成侵权/违法违规/事实不符,请联系代码007邮箱:suwngjj01@126.com进行投诉反馈,一经查实,立即删除!

实现音频数据解码并且用SDL播放](https://img-blog.csdnimg.cn/direct/acf7f593d4724ba4b6752766246d33b3.png)